|

|

| (30 intermediate revisions by 3 users not shown) |

| Line 1: |

Line 1: |

| − | == Overview ==

| + | <!-- CATEGORIES --> |

| − | Geospatial Data Processing takes advantage of the OGC Web Processing Service (WPS) as web interface to allow for the dynamic deployment of user processes. In this case the WPS chosen is the [http://52north.org/communities/geoprocessing/wps/index.html 52° North WPS], allowing the development and deployment of user “algorithms”. Is dimostrated that such “algorithms” can be developed to be processed exploiting the powerful and distributed framework offered by [http://hadoop.apache.org/mapreduce/ Apache™ Hadoop™ MapReduce]

| + | [[Category: gCube Spatial Data Infrastructure]] |

| | + | <!-- CATEGORIES --> |

| | | | |

| − | Thus was born '''''WPS-hadoop'''''.

| + | {| align="right" |

| | + | ||__TOC__ |

| | + | |} |

| | | | |

| − | == Key Features ==

| + | gCube Spatial Data Processing offers a rich array of data analytics methods via OGC Web Processing Service (WPS). |

| − | WPS-hadoop offers a web interface to access the algorithms from external HTTP clients through three different kind of requests, made available to 52 North WPS:

| + | |

| − | | + | |

| − | - The '''GetCapabilities''' operation provides access to general information about a live WPS implementation, and lists the operations and access methods supported by that implementation. 52N WPS supports the GetCapabilities operation via HTTP GET and POST.

| + | |

| − | | + | |

| − | - The '''DescribeProcess''' operation allows WPS clients to request a full description of one or more processes that can be executed by the service. This description includes the input and output parameters and formats and can be used to automatically build a user interface to capture the parameter values to be used to execute a process.

| + | |

| − | | + | |

| − | - The '''Execute''' operation allows WPS clients to run a specified process implemented by the server, using the input parameter values provided and returning the output values produced. Inputs can be included directly in the Execute request, or reference web accessible resources.

| + | |

| − | | + | |

| − | | + | |

| − | == Design ==

| + | |

| − | Extending the AbstractAlgorithm class (by 52N) we have created a new abstract class called HadoopAbstractAlgorithm where the Business Logic, hidden to the developer, is used to execute the process creating a Job for the hadoop framework.

| + | |

| − | | + | |

| − | | + | |

| − | [[Image:blocks.png]]

| + | |

| − | | + | |

| − | | + | |

| − | === Develop a custom process ===

| + | |

| − | The custom process class has to extend HadoopAbstractAlgorithm which allows you to specify the Hadoop Configuration parameters (e.g. from XML files), the Mapper and Reducer classes, Input Paths, Output Path, all the operations needed before to run the process and the way to retrieve the results.

| + | |

| − | By using HadoopAbstractAlgorithm, you need to fill these simple methods:

| + | |

| − | | + | |

| − | * protected Class<? extends Mapper<?, ?, LongWritable, Text>> getMapper()

| + | |

| − | | + | |

| − | This method returns the class to be used as Mapper;

| + | |

| − | | + | |

| − | * protected Class<? extends Reducer<LongWritable, Text, ?, ?>> getReducer()

| + | |

| − | | + | |

| − | This method returns the class to be used as Reducer (if exists);

| + | |

| − | * protected Path[] getInputPaths(Map<String, List<IData>> inputData)

| + | |

| − | | + | |

| − | This method allows to the business logic to know the exact input path(s) to pass to the Hadoop framework;

| + | |

| − | | + | |

| − | * protected String getOutputPath()

| + | |

| − | | + | |

| − | This method allows to the business logic to know the exact output path to pass to the Hadoop framework;

| + | |

| − | | + | |

| − | * protected Map buildResults()

| + | |

| − | | + | |

| − | This method is called by the business logic method to pass build output that the WPS does expect;

| + | |

| − | | + | |

| − | * public void prepareToRun(Map<String, List<IData>> inputData)

| + | |

| − | | + | |

| − | This method has to be filled by all the operations to do before to run the Hadoop Job (e.g. WPS input validation);

| + | |

| − | | + | |

| − | * protected JobConf getJobConf()

| + | |

| − | | + | |

| − | This method allows the user to specify all the configuration resources for (from) Hadoop framework (e.g. XML conf files).

| + | |

| − | | + | |

| − | [[Image:HadoopAbstractAlgorithm.png]]

| + | |

| − | | + | |

| − | === Deploy custom process ===

| + | |

| − | WPS-hadoop is deployed over Tomcat container.

| + | |

| − | | + | |

| − | In order to deploy the recently developed process, you need to:

| + | |

| − | # Export in a jar file the process.

| + | |

| − | # Copy the exported lib into the WEB-INF/lib directory.

| + | |

| − | # Restart tomcat.

| + | |

| − | #:Next, we need to register the newly created algorithm:

| + | |

| − | # Go to <nowiki>http://localhost:yourport/wps/ , e.g.http://localhost:8080/wps/.</nowiki>

| + | |

| − | # Click on 52n WPSAdmin console.

| + | |

| − | # Login with:

| + | |

| − | #:* Username: wps

| + | |

| − | #:* Password: wps

| + | |

| − | #:The Web Admin Console lets you change the basic configuration of the WPS and upload processes.

| + | |

| − | # Click on Algorithm Repository --> Properties (the '+' sign).

| + | |

| − | # Click on the Green '+' to register your process: Type in the left field Algorithm and in the right field the fully qualified class name of your created class (i.e. package + class name, e.g. org.n52.wps.demo.ConvexHullDemo).

| + | |

| − | # Click on the save icon (the 'disk').

| + | |

| − | # Next, Click on the top left on 'Save and Activate configuration'.

| + | |

| − | # Your new Process is now available, test it under: <nowiki>http://localhost:yourport/wps/WebProcessingService?Request=GetCapabilities&Service=WPS or directly http://localhost:yourport/wps/test.hmtl.</nowiki>

| + | |

| − | | + | |

| − | | + | |

| − | N.B. Alternatively to the step 1. you can follow steps 4. , 5. and 6. first, then click on Upload Process and pick the .java file of your just developed process. Then follow from step 2 onwards.

| + | |

| − | | + | |

| − | = Some complete examples =

| + | |

| − | | + | |

| − | | + | |

| − | ===The Bathymetry Algorithm ===

| + | |

| − | | + | |

| − | Here is a complete description of the use of WPS-hadoop library, through the example of Bathymetry retrieving from a netCDF file.

| + | |

| − | An input file containing a series of coordinate pairs will generate a file of "z" (bathymetry) value for the given x,y pair.

| + | |

| − | | + | |

| − | | + | |

| − | ==== Class Diagram ====

| + | |

| − | [[Image:WPSClassDiagram.png|1500px]]

| + | |

| − | | + | |

| − | ==== BathymetryAlgorithm.xml ====

| + | |

| − | This file must be named exactly like the .java one.

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8"?>

| + | |

| − | <wps:ProcessDescriptions xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink"

| + | |

| − | xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://geoserver.itc.nl:8080/wps/schemas/wps/1.0.0/wpsDescribeProcess_response.xsd" xml:lang="en-US" service="WPS" version="1.0.0">

| + | |

| − | <ProcessDescription wps:processVersion="1.0.0" storeSupported="true" statusSupported="false">

| + | |

| − | <ows:Identifier>com.terradue.wps.BathymetryAlgorithm</ows:Identifier>

| + | |

| − | <ows:Title>Bathymetry Algorithm</ows:Title>

| + | |

| − | <ows:Abstract>by Hadoop</ows:Abstract>

| + | |

| − | <ows:Metadata xlink:title="Bathymetry" />

| + | |

| − | <DataInputs>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>coordinates</ows:Identifier>

| + | |

| − | <ows:Title>coordinatesFile</ows:Title>

| + | |

| − | <ows:Abstract>URL to a file containing x,y parameters</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>InputFile</ows:Identifier>

| + | |

| − | <ows:Title>InputFile</ows:Title>

| + | |

| − | <ows:Abstract>URL to the file netCDF</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | </DataInputs>

| + | |

| − | <ProcessOutputs>

| + | |

| − | <Output>

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | <ows:Title>result</ows:Title>

| + | |

| − | <ows:Abstract>result</ows:Abstract>

| + | |

| − | <LiteralOutput>

| + | |

| − | <ows:DataType ows:reference="xs:string"/>

| + | |

| − | </LiteralOutput>

| + | |

| − | </Output>

| + | |

| − | </ProcessOutputs>

| + | |

| − | </ProcessDescription>

| + | |

| − | </wps:ProcessDescriptions>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Requests examples ====

| + | |

| − | | + | |

| − | This is an example of how to request the execution of the BathymetryAlgorithm.

| + | |

| − | | + | |

| − | ==== XML request example ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

| + | |

| − | <wps:Execute service="WPS" version="1.0.0" xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1"

| + | |

| − | xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://schemas.opengis.net/wps/1.0.0/wpsExecute_request.xsd">

| + | |

| − | <ows:Identifier>com.terradue.wps_hadoop.examples.bathymetry.BathymetryAlgorithm</ows:Identifier>

| + | |

| − | <wps:DataInputs>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>coordinates</ows:Identifier>

| + | |

| − | <ows:Title>Coordinates file for Bathymetry</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>http://t2-10-11-30-97.play.terradue.int:8888/wps/maps/coordinates</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>InputFile</ows:Identifier>

| + | |

| − | <ows:Title>netCDF file for Bathymetry</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>http://opendap.deltares.nl/thredds/fileServer/opendap/deltares/delftdashboard/bathymetry/gebco_08/gebco08.nc</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | </wps:DataInputs>

| + | |

| − | <wps:ResponseForm>

| + | |

| − | <wps:ResponseDocument storeExecuteResponse="false">

| + | |

| − | <wps:Output asReference="false">

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | </wps:Output>

| + | |

| − | </wps:ResponseDocument>

| + | |

| − | </wps:ResponseForm>

| + | |

| − | </wps:Execute>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Input parameter description ====

| + | |

| − | | + | |

| − | '''InputFile''': This file contains a series of x,y (-180< x < 180 and -90 < y < 90) pairs.

| + | |

| − | Every file's line will be taking over from a single mapper instance.

| + | |

| − | This is the InputFile required format:

| + | |

| − | <nowiki>

| + | |

| − | xa1 ya1 xa2 ya2 xa3 ya3 xa4 ya4...xan yan

| + | |

| − | xb1 yb1 xb2 yb2 xb3 yb3 xb4 yb4...xbm ybm

| + | |

| − | .

| + | |

| − | .

| + | |

| − | .

| + | |

| − | </nowiki>

| + | |

| − | | + | |

| − | ==== KVP (Key Value Pairs) request example ====

| + | |

| − | <nowiki>

| + | |

| − | http://t2-10-11-30-97.play.terradue.int:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.bathymetry.BathymetryAlgorithm&DataInputs=coordinates=http://10.11.30.97:8888/wps/maps/coordinates;InputFile=http://opendap.deltares.nl/thredds/fileServer/opendap/deltares/delftdashboard/bathymetry/gebco_08/gebco08.nc</nowiki>

| + | |

| − | | + | |

| − | === Resampler Algorithm ===

| + | |

| − | | + | |

| − | ==== Class Diagram ====

| + | |

| − | [[Image:ResamplerAlgorithm.png|1500px]]

| + | |

| − | | + | |

| − | ==== ResamplerAlgorithm.xml ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8"?>

| + | |

| − | <wps:ProcessDescriptions xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink"

| + | |

| − | xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://geoserver.itc.nl:8080/wps/schemas/wps/1.0.0/wpsDescribeProcess_response.xsd" xml:lang="en-US" service="WPS" version="1.0.0">

| + | |

| − | <ProcessDescription wps:processVersion="1.0.0" storeSupported="true" statusSupported="false">

| + | |

| − | <ows:Identifier>com.terradue.wps_hadoop.examples.resampler.ResamplerAlgorithm</ows:Identifier>

| + | |

| − | <ows:Title>Resampler Algorithm</ows:Title>

| + | |

| − | <ows:Abstract>by Hadoop</ows:Abstract>

| + | |

| − | <ows:Metadata xlink:title="resampler" />

| + | |

| − | <DataInputs>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>wcs_url</ows:Identifier>

| + | |

| − | <ows:Title>wcs_url</ows:Title>

| + | |

| − | <ows:Abstract>wcs_url</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>resolution</ows:Identifier>

| + | |

| − | <ows:Title>resolution</ows:Title>

| + | |

| − | <ows:Abstract>resolution</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | </DataInputs>

| + | |

| − | <ProcessOutputs>

| + | |

| − | <Output>

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | <ows:Title>result</ows:Title>

| + | |

| − | <ows:Abstract>result</ows:Abstract>

| + | |

| − | <LiteralOutput>

| + | |

| − | <ows:DataType ows:reference="xs:string"/>

| + | |

| − | </LiteralOutput>

| + | |

| − | </Output>

| + | |

| − | </ProcessOutputs>

| + | |

| − | </ProcessDescription>

| + | |

| − | </wps:ProcessDescriptions>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Requests examples ====

| + | |

| − | | + | |

| − | This is an example of how to request the execution of the ResamplerAlgorithm.

| + | |

| − | | + | |

| − | ==== XML request example ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

| + | |

| − | <wps:Execute service="WPS" version="1.0.0" xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1"

| + | |

| − | xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://schemas.opengis.net/wps/1.0.0/wpsExecute_request.xsd">

| + | |

| − | <ows:Identifier>com.terradue.wps_hadoop.examples.resampler.ResamplerAlgorithm</ows:Identifier>

| + | |

| − | <wps:DataInputs>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>wcs_url</ows:Identifier>

| + | |

| − | <ows:Title>WCS product's URL to be resampled</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>http://t2-10-11-30-98.play.terradue.int:8080/thredds/wcs/maps/SST_MED_SST_L4_NRT_OBSERVATIONS_010_004_c_2011-11-03_2011-11-04.nc

| + | |

| − | ?service=WCS&version=1.0.0&request=GetCoverage&COVERAGE=analysed_sst&bbox=-18,20,36,45&width=100&height=100&format=geotiff</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>wcs_url</ows:Identifier>

| + | |

| − | <ows:Title>WCS product's URL to be resampled (in degrees)</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>0.01666923868312760</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | </wps:DataInputs>

| + | |

| − | <wps:ResponseForm>

| + | |

| − | <wps:ResponseDocument storeExecuteResponse="false">

| + | |

| − | <wps:Output asReference="false">

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | </wps:Output>

| + | |

| − | </wps:ResponseDocument>

| + | |

| − | </wps:ResponseForm>

| + | |

| − | </wps:Execute>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Input parameter description ====

| + | |

| − | | + | |

| − | '''wcs_url''': This should be an URL to a WCS to be queried to get a Coverage;

| + | |

| − | | + | |

| − | '''resolution''': This is the desired resolution in degrees for the downloaded Coverage.

| + | |

| − | | + | |

| − | ==== KVP (Key Value Pairs) request example ====

| + | |

| − | <nowiki>

| + | |

| − | http://t2-10-11-30-97.play.terradue.int:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.resampler.ResamplerAlgorithm&DataInputs=wcs_url=http://t2-10-11-30-98.play.terradue.int:8080/thredds/wcs/maps/SST_MED_SST_L4_NRT_OBSERVATIONS_010_004_c_2011-11-03_2011-11-04.nc?service=WCS&version=1.0.0&request=GetCoverage&COVERAGE=analysed_sst&bbox=-18,20,36,45&width=100&height=100&format=geotiff;resolution=0.01666923868312760</nowiki>

| + | |

| − | | + | |

| − | '''Before to submit this kind of request you need to encode it in this way:'''

| + | |

| − | | + | |

| − | <nowiki>

| + | |

| − | http://t2-10-11-30-97.play.terradue.int:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.resampler.ResamplerAlgorithm&DataInputs=wcs_url=http://t2-10-11-30-98.play.terradue.int:8080/thredds/wcs/maps/SST_MED_SST_L4_NRT_OBSERVATIONS_010_004_c_2011-11-03_2011-11-04.nc?service=WCS%25version=1.0.0%25request=GetCoverage%25COVERAGE=analysed_sst%25bbox=-18,20,36,45%25width=100%25height=

| + | |

| − | 100%25format=geotiff;resolution=0.01666923868312760

| + | |

| − | </nowiki>

| + | |

| − | | + | |

| − | | + | |

| − | ==== More information ====

| + | |

| − | | + | |

| − | In order to exploit the real potential of Hadoop, more than a request should be sent to the WPS concurrently.

| + | |

| − | | + | |

| − | === Intersection Algorithm ===

| + | |

| − | | + | |

| − | ==== Class Diagram ====

| + | |

| − | [[Image:IntersectionAlgorithm.png|500px]]

| + | |

| − | | + | |

| − | ==== IntersectionHadoopAlgorithm.xml ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8"?>

| + | |

| − | <wps:ProcessDescriptions xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://geoserver.itc.nl:8080/wps/schemas/wps/1.0.0/wpsDescribeProcess_response.xsd" xml:lang="en-US" service="WPS" version="1.0.0">

| + | |

| − | <ProcessDescription wps:processVersion="1.0.0" storeSupported="true" statusSupported="false">

| + | |

| − | <ows:Identifier>com.terradue.wps_hadoop.examples.IntersectionAlgorithm</ows:Identifier>

| + | |

| − | <ows:Title>Intersection Algorithm</ows:Title>

| + | |

| − | <ows:Abstract>Calculate Intersection Feature</ows:Abstract>

| + | |

| − | <ows:Metadata xlink:title="Intersection" />

| + | |

| − | <DataInputs>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>Polygon1</ows:Identifier>

| + | |

| − | <ows:Title>Polygon1</ows:Title>

| + | |

| − | <ows:Abstract>Polygon 1 URL</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>Polygon2</ows:Identifier>

| + | |

| − | <ows:Title>Polygon2</ows:Title>

| + | |

| − | <ows:Abstract>Polygon 2 URL</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | </DataInputs>

| + | |

| − | <ProcessOutputs>

| + | |

| − | <Output>

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | <ows:Title>result</ows:Title>

| + | |

| − | <ows:Abstract>result</ows:Abstract>

| + | |

| − | <LiteralOutput>

| + | |

| − | <ows:DataType ows:reference="xs:string"/>

| + | |

| − | </LiteralOutput>

| + | |

| − | </Output>

| + | |

| − | </ProcessOutputs>

| + | |

| − | </ProcessDescription>

| + | |

| − | </wps:ProcessDescriptions>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Requests examples ====

| + | |

| − | | + | |

| − | This is an example of how to request the execution of the ResamplerAlgorithm.

| + | |

| − | | + | |

| − | ==== XML request example ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8" standalone="yes"?>

| + | |

| − | <wps:Execute service="WPS" version="1.0.0" xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1"

| + | |

| − | xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://schemas.opengis.net/wps/1.0.0/wpsExecute_request.xsd">

| + | |

| − | <ows:Identifier>com.terradue.wps_hadoop.examples.intersection.IntersectionHadoopAlgorithm</ows:Identifier>

| + | |

| − | <wps:DataInputs>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>Polygon1</ows:Identifier>

| + | |

| − | <ows:Title>First polygon to be intersected</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>http://www.fao.org/figis/geoserver/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=fifao:FAO_MAJOR&CQL_FILTER=fifao:F_AREA=21</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | <wps:Input>

| + | |

| − | <ows:Identifier>Polygon2</ows:Identifier>

| + | |

| − | <ows:Title>Second Polygon to be intersected</ows:Title>

| + | |

| − | <wps:Data>

| + | |

| − | <wps:LiteralData>http://geo.vliz.be/geoserver/Marbound/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=Marbound:eez&CQL_FILTER%3DINTERSECTS%28Marbound%3Athe_geom%2CPOLYGON%28%28-82.410003662+34.830001831%2C-42+34.830001831%2C-42+78.166666031%2C-82.410003662+78.166666031%2C-82.410003662+34.830001831%29%29%29</wps:LiteralData>

| + | |

| − | </wps:Data>

| + | |

| − | </wps:Input>

| + | |

| − | </wps:DataInputs>

| + | |

| − | <wps:ResponseForm>

| + | |

| − | <wps:ResponseDocument storeExecuteResponse="false">

| + | |

| − | <wps:Output asReference="false">

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | </wps:Output>

| + | |

| − | </wps:ResponseDocument>

| + | |

| − | </wps:ResponseForm>

| + | |

| − | </wps:Execute>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | | + | |

| − | ==== Input parameter description ====

| + | |

| − | | + | |

| − | '''Polygon1''' and '''Polygon2''': URLs to some WFS where to get two features containing valid geometries.

| + | |

| − | | + | |

| − | ==== KVP (Key Value Pairs) request example ====

| + | |

| − | <nowiki>

| + | |

| − | http://t2-10-11-30-97.play.terradue.int:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.intersection.IntersectionHadoopAlgorithm&DataInputs=Polygon1=http://www.fao.org/figis/geoserver/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=fifao:FAO_MAJOR&CQL_FILTER=fifao:F_AREA=21;Polygon2=http://geo.vliz.be/geoserver/Marbound/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=Marbound:eez&CQL_FILTER%3DINTERSECTS%28Marbound%3Athe_geom%2CPOLYGON%28%28-82.410003662+34.830001831%2C-42+34.830001831%2C-42+78.166666031%2C-82.410003662+78.166666031%2C-82.410003662+34.830001831%29%29%29</nowiki>

| + | |

| − | | + | |

| − | '''Before to submit this kind of request you need to encode it in this way:'''

| + | |

| − | | + | |

| − | <nowiki>

| + | |

| − | http://localhost:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.intersection.IntersectionHadoopAlgorithm&DataInputs=Polygon1=http%3A%2F%2Fwww.fao.org%2Ffigis%2Fgeoserver%2Fows%3Fservice%3DWFS%26version%3D1.0.0%26request%3DGetFeature%26typeName%3Dfifao%3AFAO_MAJOR%26CQL_FILTER%3Dfifao%3AF_AREA%3D21;Polygon2=http%3A%2F%2Fgeo.vliz.be%2Fgeoserver%2FMarbound%2Fows%3Fservice%3DWFS%26version%3D1.0.0%26request%3DGetFeature%26typeName%3DMarbound%3Aeez%26CQL_FILTER=INTERSECTS%28Marbound%3Athe_geom%2CPOLYGON%28%28-82.410003662+34.830001831%2C-42+34.830001831%2C-42+78.166666031%2C-82.410003662+78.166666031%2C-82.410003662+34.830001831%29%29%29

| + | |

| − | </nowiki>

| + | |

| − | | + | |

| − | | + | |

| − | ==== More information ====

| + | |

| − | | + | |

| − | This algorithm is the adaption to WPS-hadoop framework of the original IntersectionAlgorithm developed by 52North. This template was sent and discussed with FAO (Emmanuel Blondel) to be improved by the community according to their requirements (e.g. area of the intersections).

| + | |

| − | | + | |

| − | | + | |

| − | | + | |

| − | === TIFFUploader Algorithm ===

| + | |

| − | | + | |

| − | ==== Overview ====

| + | |

| − | | + | |

| − | Another algorithm was developed to create a visualization library that exploits a Geoserver WMS (Web Map Service) by uploading a file netCDF as a new Workspace and its bands as new layers.

| + | |

| − | | + | |

| − | ==== Class Diagram ====

| + | |

| − | [[Image:TIFFUploaderAlgorithm.png|500px]]

| + | |

| − | | + | |

| − | ==== Geotiff Uploader library ====

| + | |

| − | | + | |

| − | TiffUploader algorithm is based on [http://www.gdal.org/ GDAL native library] due to a software developed by Terradue called GeoTIFF Uploader, that uses a [http://gdal.org/java/ Java bind library] .

| + | |

| − | After downloaded a netCDF file on the local filesystem, Geotiff Uploader splits it in as many GeoTIFF files as the number of data bands. Finally the library upload these files as layers of a new Workspace just created from the netCDF file itself, on the Geoserver instance. The control returns to the WPSHadoop algorithm.

| + | |

| − | | + | |

| − | '''Release note:''' since Geotiff Uploader is based on GDAL Java bind, it needs to be delivered with the right dependencies, according with the architecture that will run the application. For this purpose, multiple Maven profiles where created, each for the most popular architectures:

| + | |

| − | | + | |

| − | * linux-i386

| + | |

| − | * linux-x86_64

| + | |

| − | * mac-x86_64

| + | |

| − | | + | |

| − | ==== TIFFUploaderAlgorithm.xml ====

| + | |

| − | | + | |

| − | <source lang="xml">

| + | |

| − | <?xml version="1.0" encoding="UTF-8"?>

| + | |

| − | <wps:ProcessDescriptions xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0

| + | |

| − | http://geoserver.itc.nl:8080/wps/schemas/wps/1.0.0/wpsDescribeProcess_response.xsd" xml:lang="en-US" service="WPS" version="1.0.0">

| + | |

| − | <ProcessDescription wps:processVersion="1.0.0" storeSupported="true" statusSupported="false">

| + | |

| − | <ows:Identifier>com.terradue.wps.ResamplerAlgorithm</ows:Identifier>

| + | |

| − | <ows:Title>TIFF Uploader Algorithm</ows:Title>

| + | |

| − | <ows:Abstract>by Hadoop</ows:Abstract>

| + | |

| − | <ows:Metadata xlink:title="TIFF Uploader " />

| + | |

| − | <DataInputs>

| + | |

| − | <Input minOccurs="1" maxOccurs="1">

| + | |

| − | <ows:Identifier>InputFile</ows:Identifier>

| + | |

| − | <ows:Title>Input FIle</ows:Title>

| + | |

| − | <ows:Abstract>Input file containg references to netCDF resources</ows:Abstract>

| + | |

| − | <LiteralData>

| + | |

| − | <ows:DataType ows:reference="xs:string"></ows:DataType>

| + | |

| − | <ows:AnyValue/>

| + | |

| − | </LiteralData>

| + | |

| − | </Input>

| + | |

| − | </DataInputs>

| + | |

| − | <ProcessOutputs>

| + | |

| − | <Output>

| + | |

| − | <ows:Identifier>result</ows:Identifier>

| + | |

| − | <ows:Title>result</ows:Title>

| + | |

| − | <ows:Abstract>result</ows:Abstract>

| + | |

| − | <LiteralOutput>

| + | |

| − | <ows:DataType ows:reference="xs:string"/>

| + | |

| − | </LiteralOutput>

| + | |

| − | </Output>

| + | |

| − | </ProcessOutputs>

| + | |

| − | </ProcessDescription>

| + | |

| − | </wps:ProcessDescriptions>

| + | |

| − | </source>

| + | |

| − | | + | |

| − | ==== Input/Output description ====

| + | |

| − | | + | |

| − | The input for this WPSHadoop algorithm, called '''InputFile''', is a file containing a list of URLs to netCDF files. These files are locally stored before to be splitted into as many new GeoTIFF files as the bands included in the input files.

| + | |

| − | Any line of the InputFile is processed by a separated instance of the mapper.

| + | |

| − | At the end of all the processes, a GetCapabilities request is sent to the Geoserver instance and its answer is used as output (by reference) of the whole WPS process.

| + | |

| − | | + | |

| − | = The generic WPS-Hadoop Client =

| + | |

| | | | |

| | == Overview == | | == Overview == |

| | + | Geospatial Data Processing takes advantage of the OGC Web Processing Service (WPS) as web interface. |

| | + | It is implemented by relying on the [[Data Mining Facilities | gCube platform for data analytics]]. |

| | | | |

| − | In order to better test both the present and future Algorithms, a simple Java WPS-Client was developed. It also will be very useful to allow external services/client to exploit WPS side of projects.

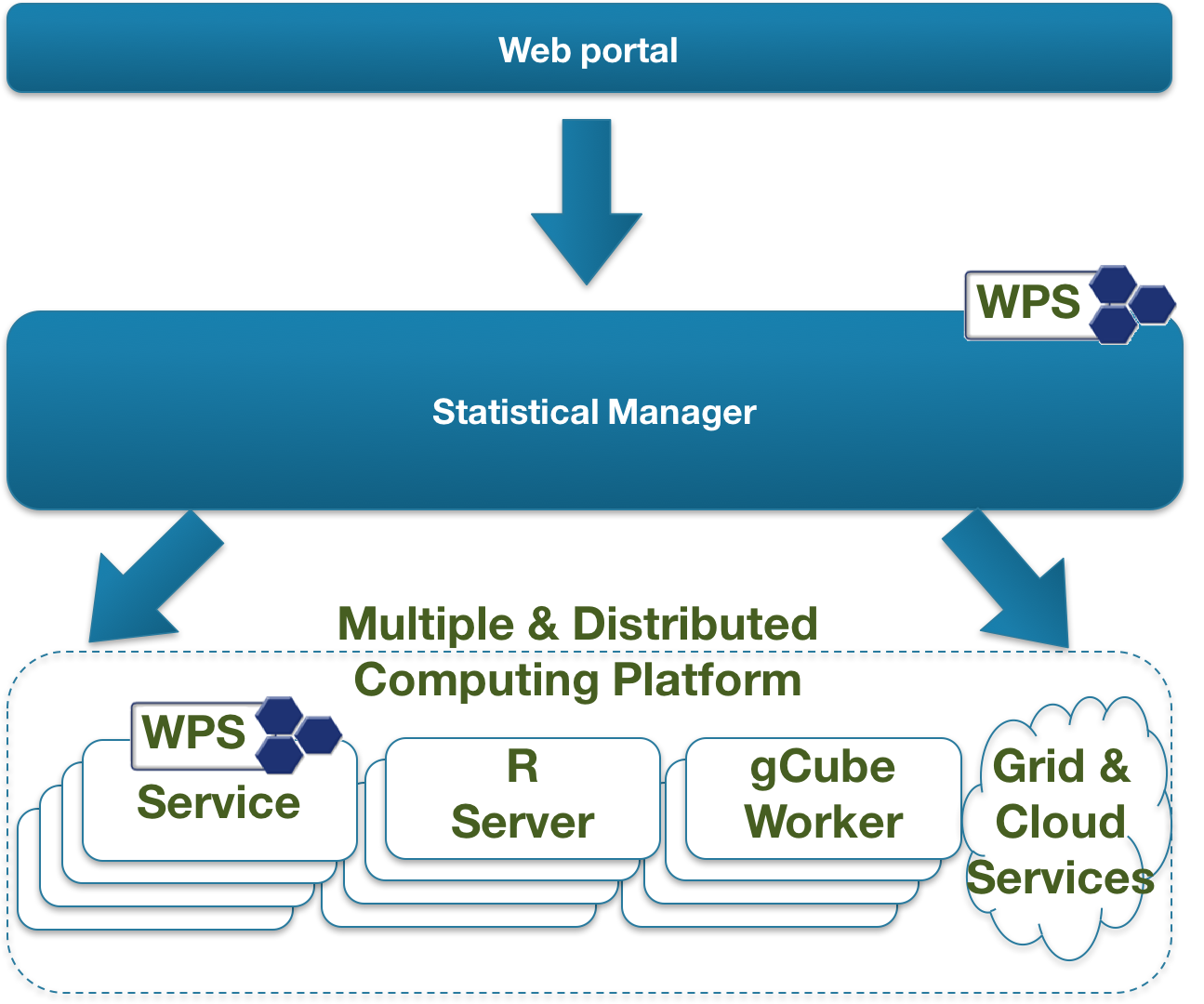

| + | [[File:Spatial_Data_Processing.png|400px|Overall Architecture]] |

| − | This client is based on the 52 North Java Client API, containing some convenient classes to interact with WPS.

| + | |

| − | In the package called "demo", you can find an example of use of this client with IntersectionAlgorithm.

| + | |

| | | | |

| − | Below in detail some snippets from this class.

| + | == Key Features == |

| − | | + | |

| − | == GetCapabilities == | + | |

| − | | + | |

| − | This section shows you how you can request a WPS Capabilities document and how to handle the response.

| + | |

| − | | + | |

| − | <source lang=java>

| + | |

| − | public CapabilitiesDocument requestGetCapabilities(String url)

| + | |

| − | throws WPSClientException {

| + | |

| − | | + | |

| − | WPSClientSession wpsClient = WPSClientSession.getInstance();

| + | |

| − | | + | |

| − | wpsClient.connect(url);

| + | |

| − | | + | |

| − | CapabilitiesDocument capabilities = wpsClient.getWPSCaps(url);

| + | |

| − | | + | |

| − | ProcessBriefType[] processList = capabilities.getCapabilities()

| + | |

| − | .getProcessOfferings().getProcessArray();

| + | |

| − | | + | |

| − | for (ProcessBriefType process : processList) {

| + | |

| − | System.out.println(process.getIdentifier().getStringValue());

| + | |

| − | }

| + | |

| − | return capabilities;

| + | |

| − | }

| + | |

| − | </source>

| + | |

| − | | + | |

| − | == DescribeProcess ==

| + | |

| − | | + | |

| − | This section shows you how you can request a WPS DescribeProcess document and how to handle the response.

| + | |

| − | | + | |

| − | <source lang=java>

| + | |

| − | public ProcessDescriptionType requestDescribeProcess(String url,

| + | |

| − | String processID) throws IOException {

| + | |

| − | | + | |

| − | WPSClientSession wpsClient = WPSClientSession.getInstance();

| + | |

| − | | + | |

| − | ProcessDescriptionType processDescription = wpsClient

| + | |

| − | .getProcessDescription(url, processID);

| + | |

| − | | + | |

| − | InputDescriptionType[] inputList = processDescription.getDataInputs()

| + | |

| − | .getInputArray();

| + | |

| − | | + | |

| − | for (InputDescriptionType input : inputList) {

| + | |

| − | System.out.println(input.getIdentifier().getStringValue());

| + | |

| − | }

| + | |

| − | return processDescription;

| + | |

| − | }

| + | |

| − | </source>

| + | |

| − | | + | |

| − | == Execute ==

| + | |

| − | | + | |

| − | This section shows you how you can execute a WPS process and how to handle the response (in the case of IntersectionAlgorithm).

| + | |

| − | The execution is a bit more complicated than the GetCapabilities and DescribeProcess operations.

| + | |

| − | | + | |

| − | <source lang=java>

| + | |

| − | public ExecuteResponseAnalyser executeProcess(String url, String processID,

| + | |

| − | ProcessDescriptionType processDescription,

| + | |

| − | HashMap<String, Object> inputs) throws Exception {

| + | |

| − | ExecuteRequestBuilder executeBuilder = new ExecuteRequestBuilder(

| + | |

| − | processDescription);

| + | |

| − | | + | |

| − | for (InputDescriptionType input : processDescription.getDataInputs()

| + | |

| − | .getInputArray()) {

| + | |

| − | String inputName = input.getIdentifier().getStringValue();

| + | |

| − | Object inputValue = inputs.get(inputName);

| + | |

| − | if (input.getLiteralData() != null) {

| + | |

| − | if (inputValue instanceof String) {

| + | |

| − | executeBuilder.addLiteralData(inputName,

| + | |

| − | (String) inputValue);

| + | |

| − | }

| + | |

| − | } else if (input.getComplexData() != null) {

| + | |

| − | // Complexdata by value

| + | |

| − | if (inputValue instanceof FeatureCollection) {

| + | |

| − | IData data = new GTVectorDataBinding(

| + | |

| − | (FeatureCollection) inputValue);

| + | |

| − | executeBuilder

| + | |

| − | .addComplexData(

| + | |

| − | inputName,

| + | |

| − | data,

| + | |

| − | "http://schemas.opengis.net/gml/3.1.1/base/feature.xsd",

| + | |

| − | "UTF-8", "text/xml");

| + | |

| − | }

| + | |

| − | // Complexdata Reference

| + | |

| − | if (inputValue instanceof String) {

| + | |

| − | executeBuilder

| + | |

| − | .addComplexDataReference(

| + | |

| − | inputName,

| + | |

| − | (String) inputValue,

| + | |

| − | "http://schemas.opengis.net/gml/3.1.1/base/feature.xsd",

| + | |

| − | "UTF-8", "text/xml");

| + | |

| − | }

| + | |

| − | | + | |

| − | if (inputValue == null && input.getMinOccurs().intValue() > 0) {

| + | |

| − | throw new IOException("Property not set, but mandatory: "

| + | |

| − | + inputName);

| + | |

| − | }

| + | |

| − | }

| + | |

| − | }

| + | |

| − | executeBuilder.setMimeTypeForOutput("text/xml", "result");

| + | |

| − | executeBuilder.setSchemaForOutput(

| + | |

| − | "http://schemas.opengis.net/gml/3.1.1/base/feature.xsd",

| + | |

| − | "result");

| + | |

| − | ExecuteDocument execute = executeBuilder.getExecute();

| + | |

| − | execute.getExecute().setService("WPS");

| + | |

| − | WPSClientSession wpsClient = WPSClientSession.getInstance();

| + | |

| − | Object responseObject = wpsClient.execute(url, execute);

| + | |

| − | if (responseObject instanceof ExecuteResponseDocument) {

| + | |

| − | ExecuteResponseDocument response = (ExecuteResponseDocument) responseObject;

| + | |

| − | ExecuteResponseAnalyser analyser = new ExecuteResponseAnalyser(

| + | |

| − | execute, response, processDescription);

| + | |

| − | IData data = (IData) analyser.getComplexDataByIndex(0,

| + | |

| − | GTVectorDataBinding.class);

| + | |

| − | return /*data*/analyser;

| + | |

| − | }

| + | |

| − | throw new Exception("Exception: " + responseObject.toString());

| + | |

| − | }

| + | |

| − | </source>

| + | |

| − | | + | |

| − | | + | |

| − | == Main method ==

| + | |

| − | | + | |

| − | Finally this section shows you how to initialize all the Input Data and call the methods described above.

| + | |

| − | | + | |

| − | <source lang=java>

| + | |

| − | public void testExecute() {

| + | |

| − | //Define the wps URL to query

| + | |

| − | String wpsURL = "http://10.11.30.97:8888/wps/WebProcessingService";

| + | |

| − | //Define the ID (name of the class) of the Process to query

| + | |

| − | String processID = "com.terradue.wps_hadoop.examples.intersection.IntersectionHadoopAlgorithm";

| + | |

| − | | + | |

| − | try {

| + | |

| − | //Example of DescribeProcess request

| + | |

| − | ProcessDescriptionType describeProcessDocument = requestDescribeProcess(

| + | |

| − | wpsURL, processID);

| + | |

| − | System.out.println(describeProcessDocument);

| + | |

| − | } catch (IOException e) {

| + | |

| − | // TODO Auto-generated catch block

| + | |

| − | e.printStackTrace();

| + | |

| − | }

| + | |

| − | try {

| + | |

| − | //GetCapabilities request

| + | |

| − | CapabilitiesDocument capabilitiesDocument = requestGetCapabilities(wpsURL);

| + | |

| − | //DescribeProcess request

| + | |

| − | ProcessDescriptionType describeProcessDocument = requestDescribeProcess(

| + | |

| − | wpsURL, processID);

| + | |

| − | // define inputs

| + | |

| − | HashMap<String, Object> inputs = new HashMap<String, Object>();

| + | |

| − | // input parameters

| + | |

| − | inputs.put(

| + | |

| − | "Polygon1",

| + | |

| − | "http://www.fao.org/figis/geoserver/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=fifao:FAO_MAJOR&CQL_FILTER=fifao:F_AREA=21"); // literal data

| + | |

| − | inputs.put(

| + | |

| − | "Polygon2",

| + | |

| − | "http://geo.vliz.be/geoserver/Marbound/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=Marbound:eez&CQL_FILTER%3DINTERSECTS(Marbound%3Athe_geom%2CPOLYGON((-82.410003662+34.830001831%2C-42+34.830001831%2C-42+78.166666031%2C-82.410003662+78.166666031%2C-82.410003662+34.830001831)))");

| + | |

| − | //Execute the request

| + | |

| − | ExecuteResponseAnalyser analyser = executeProcess(wpsURL, processID,

| + | |

| − | describeProcessDocument, inputs);

| + | |

| − |

| + | |

| − | System.out.println(analyser.getRawResponseDocument().toString());

| + | |

| − | //If you are interested to the Data part of the response...

| + | |

| − | IData data = (IData) analyser.getComplexDataByIndex(0,GTVectorDataBinding.class);

| + | |

| − | | + | |

| − | if (data == null) {

| + | |

| − | data = new LiteralStringBinding (analyser.getLiteralDataByIndex(0).getStringValue());

| + | |

| − | System.out.println("result " + data.getPayload());

| + | |

| − | }

| + | |

| − | | + | |

| − | } catch (WPSClientException e) {

| + | |

| − | e.printStackTrace();

| + | |

| − | } catch (IOException e) {

| + | |

| − | e.printStackTrace();

| + | |

| − | } catch (Exception e) {

| + | |

| − | e.printStackTrace();

| + | |

| − | }

| + | |

| − | }

| + | |

| − | | + | |

| − | </source>

| + | |

| − | | + | |

| − | == Client through Command Line interface ==

| + | |

| − | | + | |

| − | A way more generic to use this client, is to invoke it through a Command Line, also provided in the package. This is the usage description of the tool:

| + | |

| | | | |

| − | <source lang = text>

| + | gCube Spatial Data Processing distinguishing features include: |

| − | Usage: wpsclient [options]

| + | |

| − | Options:

| + | |

| − | -I, --INPUT WPS input parameter(s).

| + | |

| − | Syntax: -Ikey=value

| + | |

| − | Default: {}

| + | |

| − | -a, --asynchronous Set the execution as asynchronous.

| + | |

| − | Default: false

| + | |

| − | -c, --capabilities Display WPS GetCapabilities.

| + | |

| − | Default: false

| + | |

| − | -d, --describe Display DescribeProcess for a given process ID.

| + | |

| − | Default: false

| + | |

| − | -e, --execute Execute the given process ID with the eventually given

| + | |

| − | parameter(s).

| + | |

| − | Default: false

| + | |

| − | -f, --file Path to a file where to write the WPS output.

| + | |

| − | -h, --help Display help informations.

| + | |

| − | Default: false

| + | |

| − | -p, --process The process ID to be used.

| + | |

| − | -t, --time Set the polling interval, in case of asynchronous

| + | |

| − | execution, in milliseconds.

| + | |

| − | Default: 2000

| + | |

| − | -u, --url The WPS url (e.g.

| + | |

| − | "http://localhost:8080/wps/WebProcessingService").

| + | |

| − | </source>

| + | |

| | | | |

| | + | ; WPS-based access to an open and extensible set of processes |

| | + | : all the processes hosted by the system are exposed via RESTful protocol enacting clients to be informed on the list of available processes (GetCapabilities), to get the specification of every process (DescribeProcess) and to execute a selected process (Execute); |

| | | | |

| − | here is an example of how to use this binary:

| + | ; relying on a Hybrid and Distributed Computing Infrastructure; |

| | + | : every process can be designed to be executed on diverse and many 'computing nodes' (e.g. R engines, Java); |

| | | | |

| − | <source lang = text>

| + | ; easy integration of user-defined processes; |

| − | bin/wpsclient -e -u http://localhost:8080/wps/WebProcessingService /

| + | : the system enact users to easily add their own algorithms to the set of those offered by the system, e.g. by [[Statistical Algorithms Importer]]; |

| − | -p com.terradue.wps_hadoop.examples.intersection.IntersectionAlgorithm /

| + | |

| − | -IPolygon1=http://www.fao.org/figis/geoserver/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=fifao:FAO_MAJOR&CQL_FILTER=fifao:F_AREA=21 /

| + | |

| − | -IPolygon2=http://geo.vliz.be/geoserver/Marbound/ows?service=WFS&version=1.0.0&request=GetFeature&typeName=Marbound:eez&CQL_FILTER%3DINTERSECTS(Marbound%3Athe_geom%2CPOLYGON((-82.410003662+34.830001831%2C-42+34.830001831%2C-42+78.166666031%2C-82.410003662+78.166666031%2C-82.410003662+34.830001831)))

| + | |

| | | | |

| − | </source>

| + | ; rich array of ready to use processes; |

| | + | : the system is equipped with a [[Statistical Manager Algorithms | large set of ready to use algorithms]]; |

| | | | |

| − | In case of array of input (that in WPS must have the same identifiers) use the ',' to separate the input values.

| + | ; open science support |

| | + | : the system automatically provide for process repeatability and provenance by recording on the [[Workspace]] a comprehensive research object; |

| | | | |

| − | Example:

| + | == Subsystems == |

| | | | |

| − | -IParam=param1,param2

| + | ;[[Statistical Manager|DataMiner / Statistical Manager]] |

| | + | : ... |

| | | | |

| − | = Supported processes =

| + | ;[[Ecological Modeling]] |

| | + | : ... |

| | | | |

| − | == Simple R example ==

| + | ;[[Signal Processing]] |

| | + | : ... |

| | | | |

| − | IRD has provided a simple R example to run under WPS-hadoop. It is described [[Simple_R_example]]

| + | ; [[Geospatial Data Mining]] |

| | + | : ... |

gCube Spatial Data Processing offers a rich array of data analytics methods via OGC Web Processing Service (WPS).

Geospatial Data Processing takes advantage of the OGC Web Processing Service (WPS) as web interface.

It is implemented by relying on the gCube platform for data analytics.