The Tree Manager Library

The Tree Manager Library is a client library for the Tree Manager services. It can be used to read from and write into data sources that are accessible via those services.

The library is available in our Maven repositories with the following coordinates:

<groupId>org.gcube.data.access</groupId> <artifactId>tree-manager-library</artifactId> <version>...</version>

Source code and documentation are also available in the repositories as secondary artefacts with classifiers sources and javadoc, respectively:

<groupId>org.gcube.data.access</groupId> <artifactId>tree-manager-library</artifactId> <classifier>sources</code> <version>...</version> <groupId>org.gcube.data.access</groupId> <artifactId>tree-manager-library</artifactId> <classifier>javadoc</code> <version>...</version>

Contents

Concepts

As clients of the tree-manager-library we need to understand:

- what are the Tree Manager services, and what they can do for us;

- what data they may take and return;

- how to stage them and where to find them;

- which tools to use to work with them, in addition to the

tree-manager-library.

We build this understanding in the rest of this Section, so that we can conceptualise our work ahead of implementation. We then illustrate how the tree-manager-library helps us with the implementation.

About the Services

The Tree Manager services let us access heterogeneous data sources in a uniform manner. They present us a unifying view of the data as edge-labelled trees, and translate that view to the native data model and access APIs of individual sources. These translations are implemented as service plugins, which are defined independently from the services for specific data sources or for whole classes of similar sources. If there is a plugin for a given source, then that source is "pluggable" in the Tree Manager services.

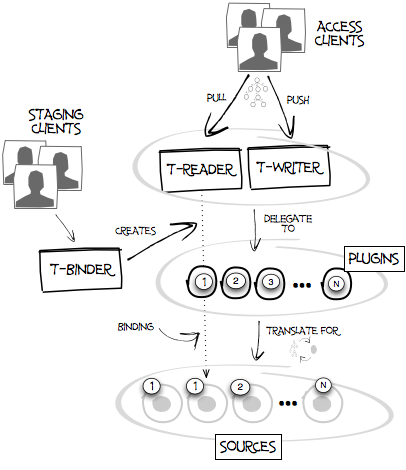

In more details:

- the T-Reader and T-Writer services give us read and write APIs over pluggable sources. We may be able to access any such source through either or both, depending on the source itself, the capabilities of the associated plugin, or the intended access policy.

- the T-Binder service let us connect T-Reader and/or T-Writer services to pluggable sources. Thus the service stages the others, creating read and/or write views over the sources.

We can then interact with The Tree Manager services in either one of two roles. When we act as staging clients, we invoke the T-Binder service to create read and write views over pluggable sources, ahead of access. When we act as access clients, we invoke the T-Reader and the T-Writer to consume such views, i.e. pull and push data from and towards them. Of course, we can play both roles within the same piece of code.

In summary, services, plugins, clients, and sources come together as follows:

Tree Types

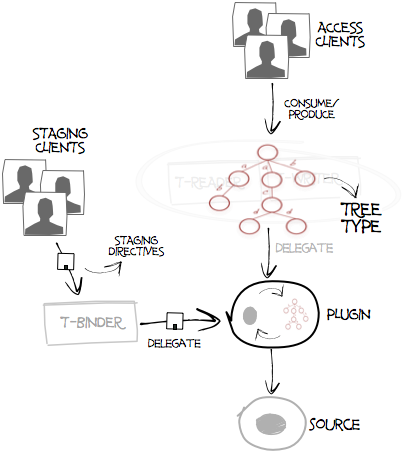

At first glance, clients and plugins have liitle to share. The previous picture makes it clear: client sit in front of the services, plugin live behind them. At a closer look, however clients may have clear dependencies on plugins.

For access clients the dependency has to do with the "shape" of trees. T-Reader and T-Writer do not constrain what edges trees may have and what values may be in their leaves. In contrast, plugins typically translate the data model of data sources to a particular tree type. If we want to access those sources, we need to know those types. Often they are fully defined by the plugin, and in this case we will find them defined in their documentation. In other cases, plugins work with types defined by broader agreement. In these cases, we go wherever the agreement is documented to find the definition of the tree type.

For staging clients the dependency is even more explicit. When we ask the T-Binder to create read and write views over a source, we need to name what plugin is going to handle that source. This plugin may also allow us to configure how it is going to handle the source. These staging directives vary from plugin to plugin, and may be arbitrarily complex. The T-Binder will take them and dutifully pass them on to the target plugin. We learn about these directives in the documentation of the plugin. In most cases, the plugin will give us facilities to formulate directives, such as bean classes and facilities to serialise them on the wire. The facilities will ship in a shared library that we will then add to our dependencies. Again, the documentation of the plugin will provide the required coordinates.

Do we always depend on plugins? No, if we do not stage the services and can process trees of arbitrary types. In a word, if we are truly generic read clients. A good example here is a source explorer, which renders in some generic fashion the contents of any data source that can be accessed through the services. Another example is a generic indexer or transformer, which can be declaratively configured to work on any tree type. gCube includes in fact many clients that belong to this category. These clients use the Tree Manager services as the single data source they need to deal with.

In all the other cases, however, we need to know the plugins that are relevant to our task, as they define the data we will be accessing and and/or how to make that data accessible in the first place. The following picture illustrates the point.

Suitable Endpoints

Abstractions aside, it's worth remembering that we do not interact with the services, which are unreachable software abstractions . Rather, we interact with addressable service endpoints, which pop up to life when we deploy and start the services. Deployment is largely back-end business, but as clients we should be aware of the following facts:

- T-Binder, T-Reader, and T-Writer are always deployed together, on one or more nodes.

- plugins are always deployed "near" the service endpoints, i.e on the same nodes.

- plugins are deployed independently from each other. There is no requirement that a plugin be deployed wherever the services are.

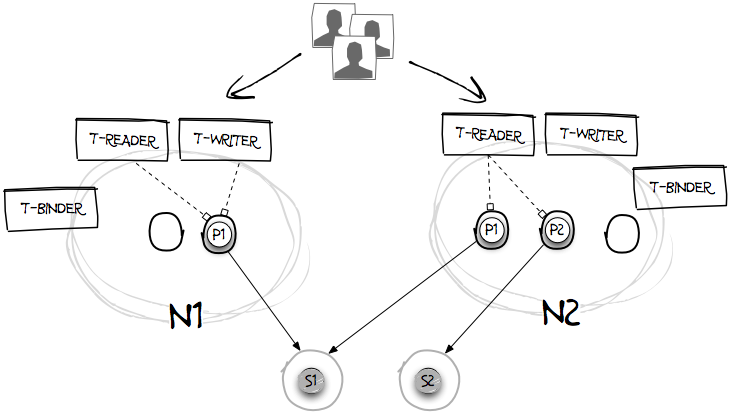

This makes for a wide range of deployment scenarios, where different scenarios address different requirements of access policy or quality of service. Consider for example the following scenario:

Here the services are deployed on two nodes, N1 and N2. There are different plugins on the two nodes but one plugin P1 is available on both nodes. Using this plugin, the T-Binder at N1 has created read and write views over a source S1. Similarly, the T-Binder at N2 has created a read view over the same source. We may now read data from S1 using the T-Reader at either node. This redundancy helps avoiding bottlenecks under load and service outages after partial failures.

Note however that we may only write data into S1 from the T-Writer at N1. Perhaps scalability and outages are less of a concern for write operations. At least they aren't 't yet; the T-Writer at N2 may be bound to S1 later, when and if those concerns arise.

We may also read from a different source S2, through the T-Reader at N2 and via a plugin P2 which is not available on N1, at least not yet. We may not write into S2, however, as there is no T-Writer around which let us do that. Perhaps the source is truly read-only to remote clients. Perhaps it is the intended policy that we may not change the source through the Tree Manager services.

This scenario makes it clear that, at any given time:

- not all endpoints are equally suitable

- if we want to write in S1 we have no business with what's on N2. Conversely, if we want to read from S2 we can ignore N1.

- not all endpoints are uniquely suitable

- if we want to read from S1, either of the two nodes will do and if we cannot work with one we can always try the other.

- the suitability of endpoints may vary over time

- If either node holds no interest to us for a given task, it may do so tomorrow. P2 may be deployed o N1, T-Readers and T-Writers for S2 may be staged on it, a T-Writer for S1 may be staged on N2, and so on.

So, service deployment may well be back-end business, but as clients we simply cannot ignore that the world out there is varied and in movement. Our first task is thus to locate suitable endpoints right when we need them, i.e. T-Binders with the right plugin or T-Readers and T-Writers bound to the right sources.

We can find suitable endpoints by querying the Information Services. These services resolve queries against descriptions that the endpoints have previously published. T-Binders publish the list of plugins that are locally available on their nodes. T-Readers and T-Writers publish information about the data sources they are bound to.

The good news is that the tree-manager-library can do the heavy lifting for us. We do not need to know how to specify and submit actual queries, only declare the properties of suitable endpoints. The library takes over from there, acting as a client of the Information Services on our behalf.

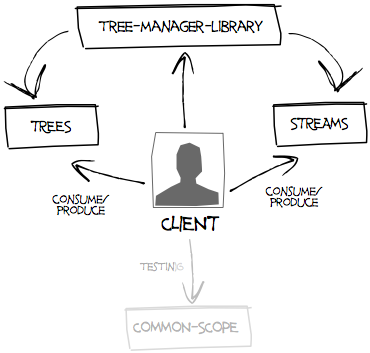

Additional Dependencies

We use the tree-manager-library to locate and invoke service endpoints, but we find in other libraries the tools to work with trees. We share these libraries with services, plugins, and in fact any other component which requires similar support. The tree-manager-library specifies them as dependencies and Maven brings them automatically on our classpath:

- the Tree library : the library contains an object implementations of the tree model used by the services. We use it to:

- construct, change, and inspect edge-labelled trees;

- describe what trees or parts thereof we want to read from sources;

- describe what trees or parts thereof we want to change or cancel from sources.

- In some cases, we may also use additional facilities, e.g. obtain resolvable URIs for trees and tree nodes, or generate synthetic trees for testing purposes.

- the Stream library: this library lets us work with data streams, which we encounter when we read or write many trees at once. We use it primarily for its facilities to produce and consume streams. In some cases, we may also use it to transform streams, handle their failures, publish them on the network, and more.

We need to be well familiar with the documentation of trees and streams before we can work with the tree-manager-library.

There is in fact a third library we may need to interact with, common-scope, which is also an indirect dependency of the tree-manager-library. If we have called gCube services before, then we know that the Tree Manager services are deployed to run in particular scopes, i.e. environments dedicated to specific groups of users. We can discover them and call them only when we work in the same scope, i.e. on behalf of the same groups of users. In production, it is likely that the scope in which we work is defined for us within the environment in which run. In other environments, and surely during testing, we are responsible for setting the scope in which we work. Consult the documentation of common-scope to learn how to do that. In all cases, scope is a transversal concern for our task, and at no point will we need to handle it in our client code or pass it explicitly to the APIs of tree=manager-library.

Proxies

When we use the tree-manager-library, we interact with remote service endpoints through local proxies.

To obtain a proxy for a T-Reader endpoint we provide a query that identifies the source bound to the endpoint. In code:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... StatefulQuery query = readSource().withName("...").build(); TReader reader = reader().matching(query).build();

In words:

- we create proxy and query with static methods of the

TServiceFactoryclass, a one-stop shop to obtain objects from the library. For added fluency, we start by importing the static methods of the factory. - we invoke the method

readSource()of the factory to get a query builder, and use the builder to characterise the T-Reader. - we repeat the process to get a proxy. We invoke the

reader()method to obtain a proxy builder, and use it to get a proxy configured with the query.

We assume here we know the name of the source. We could equally work with source identifiers:

StatefulQuery query = readSource().withId("...").build();

In some cases, we already know the address of a suitable endpoint. Perhaps some other components has passed it on to us, or perhaps we are testing against specific endpoints. In these cases, we can pass the address to the proxy instead of a query:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... W3CEndpointReference address = ... TReader reader = reader().at(address).build();

Note: javax.xml.ws.W3CEndpointReference is the standard implementation of the WS-Addressing notion of endpoint reference which we use to "address" endpoints of the Tree Manager services. If we want to create a W3CEndpointReference instance we use a javax.xml.ws.W3CEndpointReferenceBuilder.

We follow exactly the same pattern to obtain T-Writer proxies, just change the static methods that kick off the constructions:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... StatefulQuery query = writeSource().withName("...").build(); TWriter reader = writer().matching(query).build();

Again, we can also configure the proxy for specific endpoints, if we already have their addresses:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... W3CEndpointReference address = ... TWriter writer = writer().at(address).build();

Things do not change substantially for T-Binder proxies either, though this time we look for endpoints with the plugin that can handle some target source:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... StatefulQuery query = plugin("..."); TBinder reader = binder().matching(query).build();

We can also use known endpoints, though this time we can model them directly as URIs, URLs, or even (hostname,port) pairs:

e.g.:

import static org.gcube.data.tml.proxies.TServiceFactory.*; ... String hostname = ... int port = ... TBinder binder = binder().at(hostname,port).build();

Note: the reason for different address models for T-Readers/T-Writers and T-Binders lies in the technical nature of the services. A W3CEndpointReference to T-Readers and T-Writers encapsulates the identifier of the source that the endpoint should access on our behalf, as each endpoint may be connected to many. No such qualification is required for T-Binder endpoints. We can happily ignore this technicality as we go about accessing the services.

Mechanics aside, the thing to notice is that proxies do not submit our queries straight away. Proxy creation is a lightweight, purely local operation. When we first call one of their methods, the queries are translated in the lower-level form expected by the Information Services and sent away. As the results arrive, the proxies take the first found endpoint and translate the method invocation into a remote invocation for that endpoint. If this goes well, they cache the address of the endpoint and use it straight away at the next method invocation, not necessarily the same method. If things go wrong, however, the proxies consider the nature of the problem. If it seems confined to the endpoint, they try to interact with the second endpoint they found. If there are no more endpoints to try out, or as soon as the problem is such to discourage further tries, the proxies clear their cache and pass the problem on to us. If we somehow recover from it and continue to invoke their methods, the proxies re-submit the query and the process repeats. Overall, proxies "stick" to endpoints as much as they can, minimising the costs of discovery, and try to exploit endpoint replication for increased resilience. Effectively, they behave like good clients on our behalf.

Finally, note that we can keep a proxy around for as long as we need. We can create one, invoke one of its methods and then discard it. Or we can use a single proxy for our entire lifetime, if this makes our code simpler. Proxies share address caches with each other, so that any endpoint that one proxy puts in the cache can be used by any other proxy which is configured with the same query. In other words, creating multiple proxy instances does not raise additional discovery costs. Since proxies are immutable, we can also use them safely from multiple threads. Overall, we can treat proxies like plain beans, without worrying about issues of lifetime or thread safety.

Reading from Sources

Once we have a read proxy we can invoke its methods to pull data from the target source. We can lookup trees and tree nodes from their identifiers, one at the time or many at once. Or we can discover trees that have given properties. Let us see how.

Read a Tree

Here's a how we lookup a tree with a given identifier:

import org.gcube.data.trees.patterns.Patterns.*; TReader reader = ... Tree tree = reader.get("..some identifier...");

If all goes well, we get back a Tree which we can handle with the facilities of trees library, e.g navigate it according to its tree type.

If things go wrong in an unrecoverable manner we get an unchecked exception, a DiscoveryException if the proxy cannot find a suitable endpoint to talk to, or a generic ServiceException if talking fails in an unexpected way. We are free to deal with the problem where it is most convenient for us, not necessarily at the point of invocation. Things may also go wrong in expected ways, i.e. when the identifier does not identify a tree after all. In this case we get a checked exception, UnknownTreeException, which we must handle at the point of invocation.

If we are only interested in parts of the tree, we can use tree patterns to describe them:

TReader reader = ... Pattern pattern = ... Tree tree = reader.get("..some identifier...",pattern);

What does not match the pattern is pruned off, i.e. it does not travel on the wire. The tree pattern language is rather flexible, we should get familiar with its documentation and try to avoid pulling data we do not need to work with.