The Tree Manager Framework

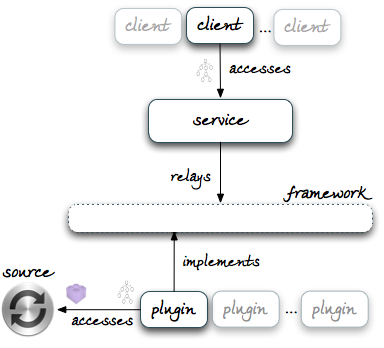

The Tree Manager service may be called to store or retrieve edge-labelled trees. Either way, the data is not necessarily stored locally to service endpoints, nor it is stored as trees. Instead, the data is most often held in remote data sources, it is managed independently from the service, and it is exposed by other access services in a variety of forms and through different APIs.

The service applies transformations from its own API and tree model to those of the underlying data sources. Transformations are implemented in plugins, libraries developed in autonomy from the service so as to extend its capabilities at runtime. Service and plugins interact through a protocol defined by a set of local interfaces which, collectively, define a framework for plugin development.

The framework is packaged and distributed as a stand-alone library, the tree-manager-framework, and serves as a dependency for both service and plugins.

The library and all its transitive dependencies are available in our Maven repositories. Plugins that are managed with Maven, can resolve them with a single dependency declaration:

<dependency> <groupId>org.gcube.data.access</groupId> <artifactId>tree-manager-framework</artifactId> <version>...</version> <scope>compile</scope> </dependency>

In what follows, we address the plugin developer and describe the framework in detail, illustrating also design options and best practices for plugin development.

Contents

Overview

Service and the plugins interact in order to notify each other of the occurrence of certain events:

- the service observes events that relate to its clients, first and foremost their requests. These events translate in actions which plugins must perform on data sources;

- plugins may observe events that relate to data sources, first and foremost changes to their state. These events need to be reported to the service.

The framework defines the interfaces through which all the relevant events may be notified.

The most important events are client requests, which can be of one of the following types:

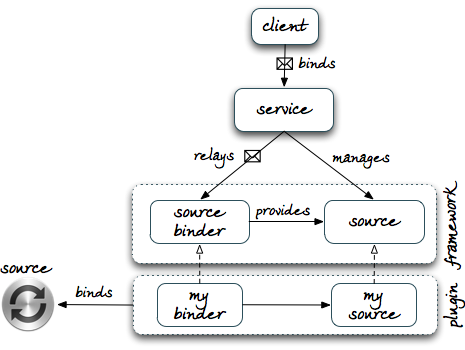

- bind request

- a client asks the service to "connect" to one or more data sources. The client targets a specific plugin and includes in the request all the information that the plugin needs in order to establish the bindings. The service delivers the request to a

SourceBinderprovided by the plugin, and it expects back oneSourceinstance for each bound source. The plugin configures theSources with information extracted or derived from the request. Thereafter, the service manages theSources on behalf of the plugin.

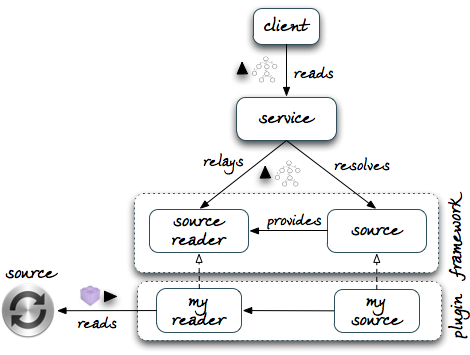

- read request

- a client asks the service to retrieve trees from a data source that has been previously bound to some plugin. The client may not be aware of the plugin, having only discovered that the service can read data from the target source. The service identifies a corresponding

Sourcefrom the request and then relays the request to aSourceReaderassociated with theSource, expecting trees back. It is the job of the reader to translate the request for the API of the data source, and to transform the results returned by the source into trees.

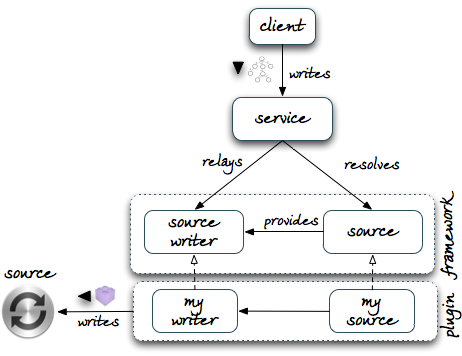

- write request

- a client asks the service to add or update trees in a data source that has been previously bound to some plugin. The client knows about the plugin and what type of trees it expects. The service identifies a corresponding

Sourcefrom the request and then relays the request to aSourceWriterassociated with theSource. Again, it is the job of the writer to translate the request for the API of the target source, including transforming the input trees into the data structures that the source expects.

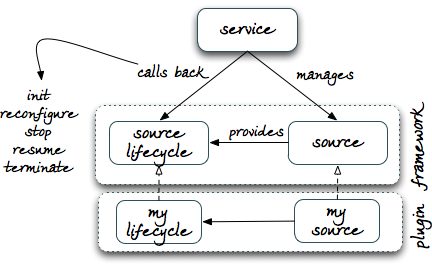

Besides relaying client requests, the service also notifies plugins of key events in the lifetime of their bindings. It does so by invoking event-specific callbacks of SourceLifecycles associated with Sources. As we shall see, lifetime events include the initialisation, reconfiguration, passivation, resumption, and termination of bindings.

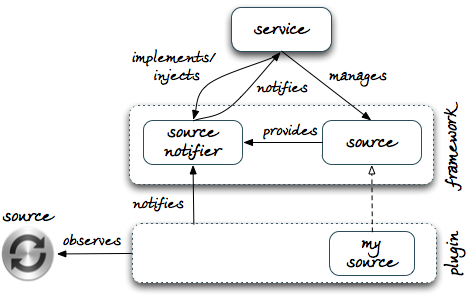

These are all the events that the service observes and passes on to plugins. Others events may be observed directly by plugins, including changes in the state of bound sources. These events are predefined SourceEvents, and plugins report them to SourceNotifiers that the service itself associates with Sources. The service also registers its own SourceConsumers with SourceNotifiers so as to receive event notifications.

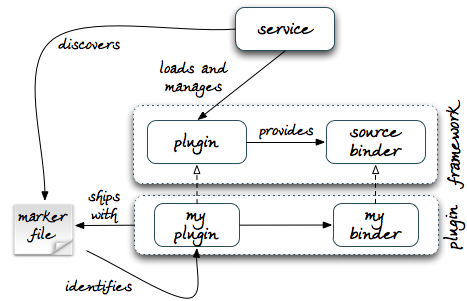

All the key components of a plugin are introduced to the service through an implementation of the Plugin interface. From it, the service obtains SourceBinders and, from the binders, bound Sources. From bound Sources, the service obtains SourceLifecycles, SourceReaders, and SourceWriters.

In addition, Plugin implementations exposes descriptive information about plugins which the service publishes in the infrastructure and uses in order to mange the plugins. For increased control over their own lifecycle, plugins may implement the PluginLifecycle interface, which extends Plugin with callbacks invoked by the service when it loads and unloads plugins.

To bootstrap the process of component discovery and find Plugin implementations, the service uses the standard ServiceLoader mechanism. Accordingly, plugins include a file META-INF/services/org.gcube.data.tmf.api.Plugin in their Jar distributions, where the file contains a single line with the qualified name of the Plugin or PluginLifecycle implementation which they provide.

Design Plan

The framework has been designed to support a wide range of plugins. The following questions characterise the design of a plugin and illustrate some key variations across designs:

- what sources can the plugin bind to?

- all plugins bind and access data sources, but their knowledge of the sources may vary. In particular:

- a source-specific plugin targets a given data source, typically with a custom API and data model;

- a source-generic plugin targets an open-ended class of data sources with the same API and data model, typically a standard.

- what type of trees does it accepts and/or returns?

- all plugins transform data to trees and/or from trees, but the structure and intended semantics of those trees, i.e. their tree type, may vary substantially from plugin to plugin. In particular:

- a type-specific plugin transforms a concrete data model into a corresponding tree type defined by the plugin or else through broader consensus. Effectively, the plugin uses the tree model of the service as a general-purpose carrier for the target data model. The tree-type may be s. Source-specific plugins are typically also type-specific.

- a type-generic plugin transforms data to and from a model which is as general-purpose as the tree model. The tree type of the plugin may be entirely unconstrained, or it may be constrained at the point of binding to specific data sources. For example, a plugin may target generic XML or RDF repositories.

- a multi-type plugin transforms a concrete data model into a number of tree types, based on directives included in bind requests. The plugin may embed the required transformations, or take a more a dynamic approach and define a framework that can be extended by an unbound number of transformers. The plugin may also use a single transformation but assign multiple types to its trees, from more generic types to more specific types.

- what requests does the plugin support?

- all plugins must accept at least one form of bind request, but a plugin may support many so as to cater for different types of bindings, or to support reconfiguration of previous bindings. For example, most plugins:

- bind a single source per request, but some may bind many source with a single request.

- support read requests but do not support write requests, typically because the bound sources are static or because they grant write access only to privileged clients. At least in principle, the converse may apply and a plugin may grant only write access to the sources. In general, a plugin may support one of the following access modes: read-only, write-only, or read-write.

- what functional and QoS limitations does the plugin have?

- rarely will the API and tree model of the service prove functionally equivalent to those of bound sources. Even if a plugin restricts its support to a particular access mode, e.g. read-only, it may not be able to support all the requests associated with that mode, or to support them all efficiently. For example, the bound sources:

- may offer no lookup API because they they do not mandate or regulate the existence of identifiers in the data;

- may offer no query API or support (the equivalent of) a subset of the filters that clients may specify in query requests;

- may not allow the plugin to retrieve, add, or update many trees at once;

- may not support updates at all, may not support partial updates, or may not support deletions.

- In some cases, the plugin may be able to compensate for differences, typically at the cost of QoS reduction. For example:

- if the bound sources do not support lookups, the plugin may be configured in bind requests with queries that simulate them;

- if the bound sources support only some filters, the plugin may apply additional ones on the data returned by the sources;

- if the bound sources do not support partial updates, the plugin may first fetch the data first and then update it locally.

- In other cases, for example when bound sources do not support deletions, the plugin has not other obvious option but to reject client requests.

Answering the questions above fixes some of the free variables in plugin design and helps to characterise it ahead of implementation. Collectively, the answers define a "profile" for the plugin and the presentation of this profile should have a central role in its documentation.

Implementation Plan

Moving from the design of a plugin to its implementation, our overview shows that the framework expects the following components:

- a

Pluginimplementation, which describes the plugin to the service; - a

SourceBinderimplementation, which binds data sources from client requests; - a

Sourceimplementation, which describes a bound source to the service; - a

SourceLifecycleimplementation, which defines actions triggered at key events in the management of a bound source; - a

SourceReaderimplementation and/or aSourceWriterimplementation, which provide read and write access over a bound source.

- a

as well as:

- a classpath resource

META-INF/services/org.gcube.data.tmf.api.Plugin, which allows the service to discover the plugin.

- a classpath resource

Many of the components above give only structure to the plugin and most are straightforward to implement. As we shall see, the framework includes partial implementations of many interfaces that simplify further the development of the plugin, by reducing boilerplate code or providing overridable defaults.

With this support, the complexity of the plugin concentrates on the implementation of SourceReaders and/or SourceWriters, where it varies proportionally to the capabilities of data sources and the sophistication required in transforming their access APIs and data model.

In the rest of this guide we look at each interface defined by the framework in more detail, discussing the specifics of their methods and providing advice on how to implement them.

Plugin, PluginLifecycle, and Environment

The implementation of a plugin begins in Plugin interface. The interface defines the following methods, which the service invokes to gather information about the plugin when it first loads it:

-

String name()

- returns the name of the plugin. The service publishes this information so that its clients may find the service endpoints where the plugin is available.

-

String description()

- returns a free-form description of the plugin. The service publishes this information so that it can be displayed to users through a range of interactive clients (e.g. monitoring tools);

-

List<Property> properties()

- returns triples of (property name, property value, property description) . The service publishes this information so that it can be displayed to users through a range of interactive clients (e.g. monitoring tools). The implementation must return

nullor an empty list if it has no properties to publish;

-

SourceBinder binder()

- returns an implementation of the

SourceBinderinterface. The service will invoke this method whenever it receives a bind request, as discussed below. Typically,SourceBinderimplementations are stateless and implementbinder()so as to return always the same instance.

-

List<String> requestSchemas()

- returns the schemas of the bind requests that the plugin can process. The service will publish this information to show how clients can use the plugin to bind data sources. There are interactive clients within the system that use the request schema to generate forms for interactive formulation of bind requests. The implementation may return

nulland decide to document its expectations elsewhere. If it does not returnnull, it is free to use any schema language of choice, though XML Schemas remains the recommended language. In the common case in which bind requests are bound to Java classes with JAXB, the plugin can generate schemas dynamically usingJAXBContext.generateSchema().

-

boolean isAnchored()

- returns an indication of whether the plugin is anchored, i.e. stores data locally to service endpoints and does not access remote data source. If the implementation returns

true, the service inhibits its internal replication schemes for the plugin. If the plugin accesses remote data sources, the implementation must returnfalse.

As mentioned above, a plugin that needs more control over its own lifetime can implement PluginLifecycle, which extends Plugin with the following methods:

-

void init(Environment)

- is invoked by the service when the plugin is first loaded;

-

void stop(Environment)

- is invoked by the service when the plugin is unloaded;

For example, the plugin may implement init() to start up a DI container.

Environment is implemented by the service to encapsulate access to the environment in which the plugin is deployed or undeployed. At the time of writing, it serves solely as a sandbox over the location of the file system which the plugin is allowed to write and read from. Accordingly, Environment exposes only the following method:

-

File file(path)

- returns a

Filewith a given path relative to the storage location of the plugin. The plugin may then use theFileto create new files or read existing files.

SourceBinder

When it needs to relay bind requests to a given plugin, the service access its Pluginimplementation and asks for a SourceBinder, as discussed Plugin above . It then invokes the single method of the binder:

List<Source> bind(Element)

The method returns one or more Sources, each of which represents a successful binding with a data source. The binding process is driven by the DOM element in input, which captures the request received by the service. It's up to the binder to inspect the request with some XML API, including data binding APIs such as JAXB, and act upon it. If the binder does not recognise the request, or else it finds it invalid, then the binder must throw an InvalidRequestException. The binder can throw a generic Exception for any other failure that occurs in the binding process, as the service will deal with it.

As to the binding process itself, note that:

- the process may vary significantly across plugins. For many, it may be as simple as extracting from the request the addresses of service endpoints that provide access to existing data sources. For others, it may require discovering such addresses through a registry. Yet for others it may be a complex process comprised of a number of local and remote actions.

- in most cases, the binder will bind a single data source, hence return a single

Source. In some cases, however, the binder may bind many data sources from a single request, hence return multipleSources.

- in most cases, the binder will bind a single data source, hence return a single

Finally, note that:

- the service discards all the

Sources that the binder has returned from previous invocations ofbind(). For this reason, the binder should avoid performing expensive work inbind(), e.g. engage in network interactions. As we discuss below, the plugin should carry out this work inSourceLifecycle.init(), which the service invokes only for theSources that it retains. The minimal amount of work that the binder should perform inbind()is really to identify data sources and build correspondingSources.

- the service discards all the

- the service configures a number of objects on the

Sources returned by the binder. including aSourceNotifierand anEnvironment. As these objects are not yet available inbind(), the binder must not access the file system or fire events. This is a corollary of the previous reccomendation, i.e. avoid side-effects inbind().

- the service configures a number of objects on the

Source

Plugins implement the Source interface to provide the service with information about the data sources bound by the SourceBinder. The services publishes this information on behalf of the plugin, and clients may use it to discover sources available for read and/or write access.

Specifically, the interface defines the following methods:

-

String id()

- returns an identifier for the bound source.

-

String name()

- returns a descriptive name for the bound source.

-

String description()

- returns a brief description of the source.

-

List<Property> properties()

- returns properties for the bound source as triple (property name, property value, property description), all

String-valued. These properties mirror the properties returned byPlugin.properties(), though they relate to a single source rather than the plugin. Implementations must returnnullor an empty list if they have no properties to publish.

-

List<QName> types()

- returns all the tree types produced and/or accepted by the plugin for the bound source.

-

Calendar creationTime()

- returns the time in which the bound source was created. Note that this is the creation time of bound source, not the

Sourceinstance's. Implementations must returnnullif the plugin has no means to obtain this information.

-

boolean isUser()

- indicates whether the bound source is intended for general access. This is not a security option as such, and it does not imply any form of authorisation or query filtering. Rather, it’s a marker that may be used by certain clients to exclude certain sources from their processes. Most plugins bind always user-level sources, hence return

truesystematically. If appropriate, the plugin can be designed to take hints for bind requests.

The callbacks above expose static information about bound sources, i.e. the plugin can set them at binding time. Others are instead dynamic, in that the plugin may update them during the lifetime of the source binding. The service publishes these properties along with static properties, but it also allows clients to be notified of their changes. The plugin is responsible for observing and relaying these changes to the service, as we discuss below. The dynamic properties are:

-

Calendar lastUpdate()

- returns the time in which the bound source was last updated. Note that this is the last update time of the bound source, not the

Sourceinstance's. Implementations must returnnullif the plugin has no means to obtain this information.

-

Long cardinality()

- returns the number of elements in the bound source. Again, implementations must return

nullif the plugin has no means to obtain this information.

Besides descriptive information, the services obtains from Sources other plugin components which are logically associated with the bound source. The relevant callbacks are:

SourceLifecycle lifecycle()

- returns the

SourceLifecycleassociated with the bound source;

-

SourceReader reader()

- returns the

SourceReaderassociated with the bound source. Implementations must returnnullif the plugin does not support read requests. Note that in this case the plugin must support write requests.

-

SourceWriter writer()

- returns the :returns the

SourceWriterassociated with the bound source. Implementations must returnnullif the plugin does not support write requests. Note that in this case the plugin must support read requests;

Finally, note that:

-

Sources may be passivated to disk by the service, as we discuss in more detail below. For this reason,Sourceis aSerializableinterface, and implementations must honour this interface.

-

- The framework provides an

AbstractSourceclass that implements the interface partially.Sourcesimplementations can and should extend it to avoid plenty of boilerplate code (state variables, accessor methods, default values, implementations ofequals(),hashcode(), andtoString(), shutdown hooks, correct serialisation, etc.).AbstractSourcesimplifies also the management of dynamic properties, in that it automatically fires a change event whenever the plugin changes the time of last update ofSources.

- The framework provides an

SourceLifecycle

Plugins implement the SourceLifecycle interface to be called back at key points in the lifetime their source bindings.

The interface defines the following callbacks:

-

void init()

- the service calls this method on new bindings. As discussed above, a plugin can use this method to carry expensive initialisation processes or produce side-effects. If the plugin needs to engage in remote interactions or has some tasks to schedule, this is the place where it should do it. Failures thrown from this method fail bind requests.

-

void reconfigure(Element)

- the service calls this method on existing bindings when clients attempt to rebind the same source. The plugin can use the bind request to reconfigure the existing binding. If the plugin does not support reconfiguration, the implementation must throw an

InvalidRequestException. If reconfiguration is possible but fails, the implementation must throw a genericException.

-

void stop()

- the service calls this method when it is shutting down, or when it is passivating the bindings to storage in order to release some memory. If the plugin schedules some tasks, this is where it should stop them.

-

void resume()

- the service calls this method when it restarts after a previous shutdown, or when clients need a binding that was previously passivated by the service. If the plugin schedules some tasks, this is where it should re-start them. If the binding cannot be resumed, the implementation must throw the failure so that the service can handle it.

-

void terminate()

- the service calls this method when clients do no longer need access to the bound source. If the plugin has some resources to release, this is where is should do it, typically after invoking

stop()to gracefully stop any scheduled tasks that may still be running.

Note that:

- plugins that need to implement only a subset of the callbacks above can extend

LifecycleAdapterand override only the callbacks of interest.

- like

Source,SourceLifecycleis aSerializableinterface. The implementation must honour this interface.

SourceEvent, SourceNotifier, and SourceConsumer

During its lifetime, a plugin may have the means to observe events that relate to data sources, typically through subscription or polling mechanisms exposed by the sources. In this case, the plugin may report these events to SourceNotifier that the service configures on Source at binding time.

The framework defines a tagging interface SourceEvent to model events. It also pre-defines two events as constants of the SourceEvent interface:

SourceEvent is a tagging interface for objects that represent events that relate to data sources and that may only be observed by the plugin. In the interface, two such events are pre-defined as constants:

-

SourceEvent.CHANGE

- this event occurs when the dynamic properties of a

Sourcechange, such as its cardinality or the time of its last update.

-

SourceEvent.REMOVE

- this event occurs when a bound source is no longer available. Note that this is different from the event that occurs when clients indicate that access to the source is no longer needed (cf.

SourceLifetime.terminate()).

If the plugin observes then events, it can report them to the service by invoking the following method of the SourceNotifier:

void notify(SourceEvent);

Note again that:

- when

Sources extendAbstractSource, changing their time of last update automatically firesSourceEvent.CHANGEevents. Unless there are no other reasons to notify events to the service, the plugin may never have to invokenotify()explicitly.

- when

- as already noted , the service will configure

SourceNotifiers onSources only after these are returned bySourceBinder.bind(). Any attempt to notify events prior to that moment will fail. For this reason, if the plugin needs to change dynamic properties at binding time, then it should do so inSourceLifecycle.init().

- as already noted , the service will configure

SourceNotifier has a second method that can be invoked to subscribe consumers for SourceEvent notifications:

void subscribe(SourceConsumer,SourceEvent...)

This method subscribes a SourceConsumer to one or more SourceEvents. Normally, plugins do not have to invoke it, as the service will subscribe its own SourceConsumers with the SourceNotifiers. In other words, the common flow of events is from the plugin to the service.

However, the plugin is free to make an internal use of the available support for event subscription and notification. In particular, the plugin can define its own SourceEvents and implement and subscribe its own SourceConsumers. In this case, SourceConsumers must implement the single method:

void onEvent(SourceEvent...)

which is invoked by the SourceNotifier with one or more SourceEvents. Normally, the subscriber will receive single event notifications, but the first notification after subscription will carry the history of all the events previously notified by the SourceNotifier.

Auxiliary APIs

All the previous interfaces provide a skeleton around the core functionality of the plugin, which is to transform the API and the tree model of the service to those of the bound sources. The task requires familiarity with three APIs defined outside the framework:

- the tree API

- the plugin uses this API to construct and deconstruct the edge-labelled tree that it accepts in write requests and/or returns in read requests. The API offers a hierarchy of classes that model whole trees (

Tree) as well as individual nodes (Node), fluent APIs to construct object graphs based on these classes, and various APIs to traverse them;

- the pattern API

- the plugin uses this API to constructs and deconstruct tree patterns, i.e. sets of constraints that clients use in read requests to characterise the trees of interest, both in terms of topology and leaf values. The API offers a hierarchy of patterns (

Pattern), method to fluently construct patterns, as well as methods to match tree against patterns (i.e. verify that the trees satisfy the constraints, cf.Pattern.match(Node)) and to prune trees with patterns (i.e. retain only the nodes that have been explicitly constrained, cf.Pattern.prune(Node)). The plugin must ensure that it returns trees that have been pruned with the patterns provided by clients;

- the stream API

- the plugin uses this API to model the data streams that flow in and out of the plugin. Streams are used in read requests and write requests that take or return many data items at once, such as trees, tree identifiers, or even paths to tree nodes. The streams API models such data streams as instances of the

Streaminterface, a generalisation of the standard JavaIteratorinterface which reflects the remote nature of the data. Not all plugins need to implement stream-based operations from scratch, as the framework offers synthetic implementations for them. These implementations, however, are derived from those that work with one data item at the time, hence have very poor performance when the data source is remote. Plugins should use them only when native implementations are not an option because the bound sources do not offer any stream-based or paged bulk operation. When they do, the plugin should really feed their transformed outputs intoStreams. In a few cases, the plugin may need advanced facilities provided by the streams API, such as fluent idioms to convert, pre-process or post-process data streams.

Documentation on working with trees, tree patterns, and streams is available elsewhere, and we do not replicate it here. The tree API and the pattern API are packaged together in a trees library available in our Maven repositories. The streams API is packaged in a streams library also available in the same repositories. If the plugin also uses Maven for build purposes, these libraries are already available in its classpath as indirect dependencies of the framework.

SourceReader

A plugin implements the SourceReader interface to process read requests. The interface defines the following methods for "tree lookup":

-

Tree get(String,Pattern)

- returns a tree with a given identifier and pruned with a given

Pattern. The reader must throw anUnknownTreeExceptionif the identifier does not identify a tree in the source, and anInvalidTreeExceptionif a tree can be identified but does not match the pattern. The reader should report any other failure to the service, i.e. rethrow it.

-

Stream<Tree> get(Stream<String>,Pattern)

- returns trees with given identifiers and pruned with a given

Pattern. The reader must throw a genericExceptionif it cannot produce the stream at all. It must otherwise handle lookup failures for individual trees asget(String,Pattern)does, inserting them into the stream.

In addition, a SourceReader implements the following "query" method:

-

Stream<Tree> get(Pattern)

- returns trees pruned with a given

Pattern. Again, the reader must throw a genericExceptionif it cannot produce the stream at all, though it must simply not add to it whenever trees do not match the pattern.

Finally, a SourceReader implements lookup methods for individual tree nodes:

-

Node getNode(Path )

- returns a node from the

Pathof node identifiers that connect it to the root of a tree. The reader must throw anUnknownPathExceptionif the path does not identify a tree node.

-

Stream<Node> getNodes(Stream<Path>)

- returns nodes from the

Paths of node identifiers that connect them to the root of trees. The reader must throw a genericExceptionif it cannot produce the stream at all. It must otherwise handle lookup failures for individual paths asgetNode(Path)does, inserting them into the stream.

Depending on the capabilities of the bound source, implementing some of the methods above may prove challenging or altogether impossible. For example, if the source offers only lookup capabilities, the reader may not be able to implement query methods. In this sense, notice that the reader is not forced to fully implement any of the methods above. In particular, it can:

- throw a

UnsupportedOperationExceptionfor all requests to a given method, or: - throw a

UnsupportedRequestExceptionfor certain requests of a given method.

When this is the case, the plugin should clearly report its limitations in its documentation.

Similarly, the plugin is not forced to implement all methods from scratch. The framework defines a partial implementation of SourceReader, AbstractReader, which the plugin can derive to obtain default implementations of certain methods, including:

- a default implementation of

get(Stream<String>,Pattern); - a default implementation of

getNode(Path); - a default implementation of

getNodes(Stream<Path).

These defaults are derived from the implementation of get(String,Pattern) provided by the plugin. Note, however, that their performance is likely to be poor over remote sources, as get(String,Pattern) moves data one item at the time. For getNode(Path) the problem is marginal, but for stream-based methods the impact on performance is likely to be substantial. The default implementations should thus be considered as surrogates for real implementations, and the plugin should override them if and when a more direct mappings on the capabilities of the bound sources exists.

When the reader does implement the methods above natively, the following issues arise:

- applying patterns

- in some cases, the reader may be able to transform patterns in terms of the querying/filtering capabilities of the bound source. Often, it may be able to do so only partially, i.e. by extracting from the patterns the subset of constraints that it can transform. In this case, the reader would push this subset towards the source, transform the results into trees, and then prune the trees with the original pattern, so as to post-filter the data along the constraints that it could not transform.

- If the bound source offers no querying/filtering capabilities, then the reader must apply the pattern only locally on the unfiltered results returned by the source. Note that the performance of

get(Pattern)in this scenario can be severely compromised if the bound source is remote, as the reader would effectively transfer its entire contents over the network at each invocation of the method. The reader may then opt for not implementing this method at all, or for rejecting requests that use particularly ‘inclusive’ patterns (e.g.Patterns.tree()orPatterns.any(), which do not constraint trees at all).

- transforming data into trees

- the reader is free to follow the approach and choose the technologies that seem most relevant to the purpose, in that the framework neither limits nor supports any particular choice. It is a good design practice to push the transformations outside the reader, particularly when the plugin supports multiple tree types, but also to simplify unit testing. The transformation may even be pushed outside the whole plugin and put in a separate library that may be reused in different contexts. For example, it may be reused in another plugin that binds sources through a different protocol but under the same data model. If the transformation works both ways (e.g. because the plugin supports write requests), it may also be reused at the client-side, to revert from tree types to the original data models.

- streaming data

- a reader that implements

get(Pattern), or that overrides the surrogate implementations of stream-based methods inherited byAbstractReader, must implement theStreaminterface over the bulk transfer mechanisms offered by the bound source. In the common case in which the source uses a paging mechanism, the plugin can provide a 'look-ahead'Streamimplementation that localise a new page of data inhasNext()whenevernext()has fully traversed the page previously localised. Other transfer mechanisms may require more custom solutions.

In summary, the plugin can deliver a simple implementation of SourceReader by:

- implementing

get(String,Pattern)andget(Pattern), and - inheriting surrogate implementations of all the other methods from

AbstractReader.

Alternatively, the plugin may be able to deliver more performant implementation of SourceReader by:

- inheriting from

AbstractReaderand - overriding one or more surrogate implementations with native ones.

Of course, the plugin may be able to deliver native implementations of some methods and not others.

SourceWriter

A plugin implements the SourceWriter interface to process write requests. Writers are rarely implemented by plugins that bind to remote sources, which typically offer read-only interfaces. Writers may be implemented instead by plugins that bind to local sources, so as to turn the service endpoint into a storage service for structured data. The Tree Repository is a primary example of this type of plugin.

The SourceWriter interface defines the following methods to insert new data in the bound source:

-

Tree add(Tree)

- inserts a tree in the bound source and returns the same tree as this has been inserted in the source. With its signature, the method supports data sources with different insertion models:

- If the data is annotated at the point of insertion with identifiers, timestamps, versions and similar metadata, the writer can return these annotations back to the client;

- If instead the data is unmodified at the point of insertion, the writer can return

nullto the client so as to simulate a true "add-and-forget" model and avoid unnecessary data transfers.

- Fire-and-forget insertions may also be desirable under the first model, when clients have no use for the annotations added to the data at the point of insertion. The plugin may support these clients if it allows them to specify directives in the input tree itself (e.g. special attributes on root nodes). The writer would recognise directives and return

nullto clients. - Regardless of the insertion model of the bound source, input trees may be invalid for insertion, e.g. miss required metadata, have metadata that it should not have (e.g. identifiers that should be assigned by the bound source), or be otherwise malformed with respect to insertion requirements. When this happens, the writer must throw an

InvalidTreeException.

-

Stream<Tree> add(Stream<Tree>)

- inserts trees in the bound source and returns the outcomes in the same order, where the outcomes are those that

add(Tree)would return for each input tree. In particular, the writer must model failures for individual trees asadd(Tree)would, inserting them into the stream. It must instead throw a genericExceptionif it cannot produce the stream at all.

The SourceWriter interface also implements the following methods to change data already in the bound source:

-

Tree update(Tree)

- updates a given tree in the bound source and returns the same tree as this has been updated in the source. Like with insertions, the signature of the method supports sources with diverse update models:

- If the bound source models updates in terms of replacement, the input tree may simply encode the new version of the data;

- if instead the bound source support in-place updates, the input tree may encode no more and no less than the exact changes to be applied to the existing data. The tree API supports in-place updates with the notion of a delta tree, i.e. a special tree that encodes the changes applied to a given tree over time, i.e. contains only the nodes of the tree that have been added, modified, or deleted, marked with a corresponding attribute. The API can also compute the delta tree between a tree and another tree that represents its evolution at a given point in time (cf.

Node.delta()). Clients may thus compute the delta tree for a set of changes and invoke the service with it. The writer may parse delta tree to effect the changes or, more simply, revert to a replacement model of update: retrieve the data to be updated, transform it into a tree, and then use again the tree API to update it with the changes carried in the delta tree (cf.Node.update(Node)).

- under both models, the input tree can carry the directive to delete existing data, rather than modify it.

- In all cases, the plugin must document the expectations of its writers over the input tree. Note that input tree must allow the writer to identify which data should be updated. If the target data cannot be identified (e.g. it no longer exists in the source), the writer must throw an

UnknownTreeException. If the input tree does allow the writer to identify the target data but it does not meet expectations otherwise, then the writer must throw anInvalidTreeException.

-

Stream<Tree> update(Stream<Tree>)

- updates given trees in the bound source and returns the outcomes in the same order, where the outcomes are those that

update(Tree)would return for each input tree. In particular, the writer must model failures for individual trees asupdate(Tree)would, inserting them into the stream. It must instead throw a genericExceptionif it cannot produce the stream at all.