Difference between revisions of "Tabular Data Flow Manager"

m (→Well suited Use Cases) |

m (→Well suited Use Cases) |

||

| Line 43: | Line 43: | ||

=== Well suited Use Cases === | === Well suited Use Cases === | ||

This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps, where the steps are performed by potentially different service instances. | This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps, where the steps are performed by potentially different service instances. | ||

| − | An example is the data flow leading to the production of an enhanced version of data set containing catch statistics: the data are initially massaged the [[TimeSeries | Time Series Service]] that takes care of associating to it the proper Reference Data, then passed to the [[ | + | An example is the data flow leading to the production of an enhanced version of data set containing catch statistics: the data are initially massaged the [[TimeSeries | Time Series Service]] that takes care of associating to it the proper Reference Data, then passed to the to the [[Occurrence Data Enrichment Service]] to be improved with additional data. Finally, the enriched dataset goes to the [[Statistical_Manager | Statistical Service]] with the goal to extract certain features via data mining algorithms. |

Revision as of 18:31, 18 May 2012

The goal of this facility is to realise an integrated environment supporting the definition and management of workflows of tabular data. Each workflow consists of a number of tabular data processing steps where each step is realized by an existing service conceptually offered by a gCube based infrastructure.

In the following, the design rationale, key features, high-level architecture, as well as the deployment scenarios are described.

Overview

The goal of this service is to offer a facilities for tabular data workflow creation, management and monitoring. The workflow can involve a number of data manipulation steps each performed by potentially different services to produce the desired output. User defined workflow can be scheduled for deferred execution and the user notified about the workflow progress.

Design

Philosophy

Tabular Data Flow Manager offers a service for tabular data workflow creation, management and monitoring. The underlying idea is to decouple the logic needed to represent and execute workflows of tabular data processing from the single steps each taking care of part of the overall manipulation. This aims at maximizing the exploitation and reuse of components aiming at offering data manipulation facilities. Moreover, this make it possible to 'codify' standard (including domain oriented ones) data manipulation processes and execute them whenever data deserving such a kind of processing manifest.

Architecture

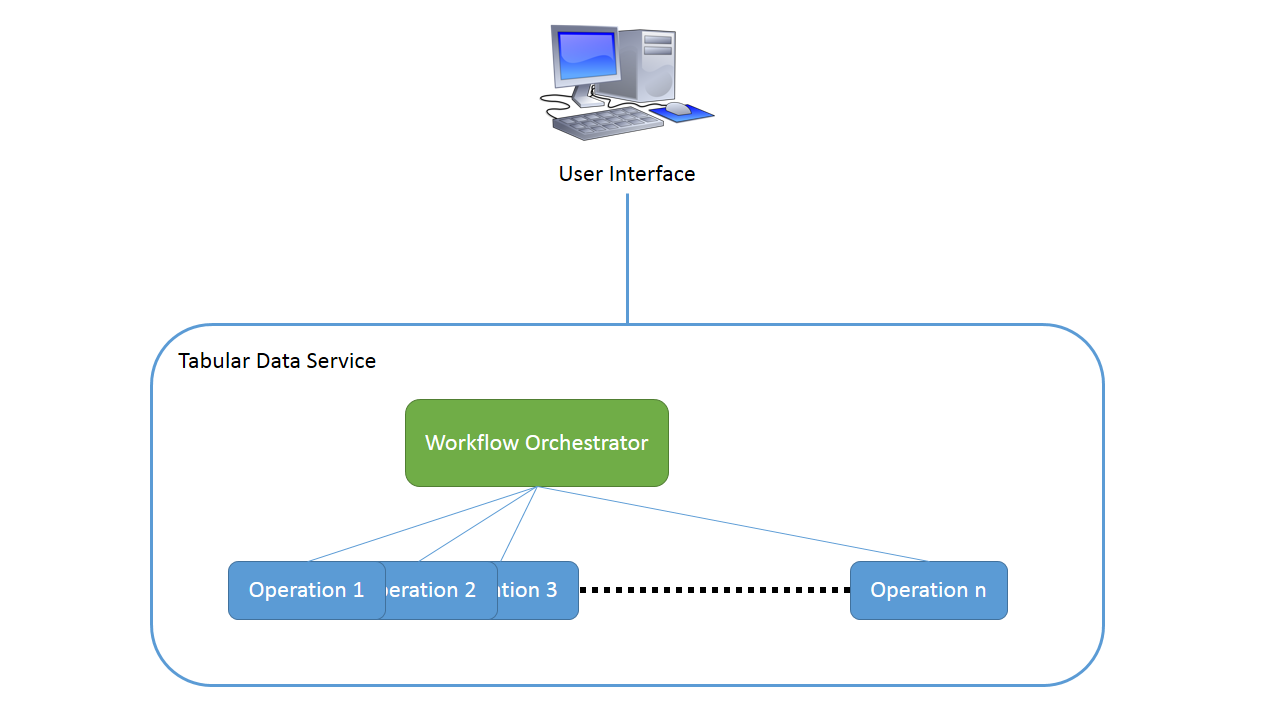

The subsystem comprises the following components:

- Tabular Data Flow Service: the core element of this functional area. It offers workflow creation, management and monitoring functions;

- Tabular Data Flow UI: the user interface of this functional area. It provide its user with the web based user interface for creating, executing and monitoring the workflow(s);

- Tabular Data Agent: an helper component every service willing to offer tabular data manipulation functionality has to be equipped with.

A diagram of the relationships between these components is reported in the following figure:

Deployment

The Service should be deployed in a single node, while the agents should be deployed with the service that want to offer his functionality to the flow service. The User Interface can be deployed in the infrastructure portal.

Use Cases

Well suited Use Cases

This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps, where the steps are performed by potentially different service instances. An example is the data flow leading to the production of an enhanced version of data set containing catch statistics: the data are initially massaged the Time Series Service that takes care of associating to it the proper Reference Data, then passed to the to the Occurrence Data Enrichment Service to be improved with additional data. Finally, the enriched dataset goes to the Statistical Service with the goal to extract certain features via data mining algorithms.