Difference between revisions of "Statistical Manager"

(Created page with '{| align="right" ||__TOC__ |} A cross usage service developed in the iMarine Work Package 10, aiming to provide users and services with tools for performing Data mining operatio…') |

|||

| Line 8: | Line 8: | ||

== Overview == | == Overview == | ||

| − | The goal of this service is to offer a unique access for performing data mining or statistical operations on heterogeneous data. data can reside on client side in the form of | + | The goal of this service is to offer a unique access for performing data mining or statistical operations on heterogeneous data. data can reside on client side in the form of csv files or they can be remotely hosted, as SDMX documents or they can be stored in a database. |

The Service is able to take such inputs and execute the requested operation by invoking the most suited computational infrastructure, chosing among a set of available possibilities: executions can run on multi-core machines, or on different computational Infrastructure, like the d4Science itself or Windows Azure and other options. | The Service is able to take such inputs and execute the requested operation by invoking the most suited computational infrastructure, chosing among a set of available possibilities: executions can run on multi-core machines, or on different computational Infrastructure, like the d4Science itself or Windows Azure and other options. | ||

| Line 25: | Line 25: | ||

*Evaluators | *Evaluators | ||

| − | Further details are available at the [https://gcube.wiki.gcube-system.org/gcube/index.php/Ecological_Modeling|Ecological Modeling] wiki page, where some experiments are shown along with explanations on the algorithms. | + | Further details are available at the [https://gcube.wiki.gcube-system.org/gcube/index.php/Ecological_Modeling | Ecological Modeling] wiki page, where some experiments are shown along with explanations on the algorithms. |

Revision as of 11:05, 9 May 2012

A cross usage service developed in the iMarine Work Package 10, aiming to provide users and services with tools for performing Data mining operations. This document outlines the design rationale, key features, and high-level architecture, as well as the options deployment.

Overview

The goal of this service is to offer a unique access for performing data mining or statistical operations on heterogeneous data. data can reside on client side in the form of csv files or they can be remotely hosted, as SDMX documents or they can be stored in a database.

The Service is able to take such inputs and execute the requested operation by invoking the most suited computational infrastructure, chosing among a set of available possibilities: executions can run on multi-core machines, or on different computational Infrastructure, like the d4Science itself or Windows Azure and other options.

Algorithms are implemented as plug-ins which makes the injection mechanism of new functionalities easy to deploy.

Design

Philosophy

This represents a unique endpoint for those clients or services which want to perform complex operations without going to investigate into the details of the implementation. Currently the set of operations which can be performed has been divided into:

- Generators

- Modelers

- Transducers

- Evaluators

Further details are available at the | Ecological Modeling wiki page, where some experiments are shown along with explanations on the algorithms.

Architecture

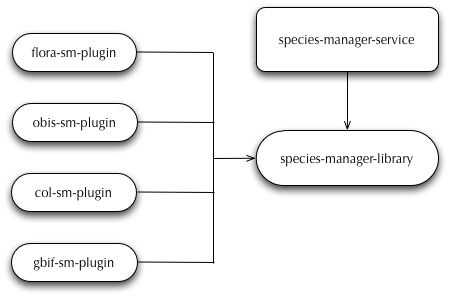

The subsystem comprises the following components:

- species-products-dicovery-service: a stateless Web Service that exposes read operations and implements it by delegation to dynamically deployable plugins for target repository sources within and outside the system;

- species-products-dicovery-library: a client library that implements a high-level facade to the remote APIs of the Species manager service;

- obis-spd-plugin: a plugin of the Species Products Discovery service that interacts with OBIS data source;

- gbif-spd-plugin: a plugin of the Species Products Discovery service that interacts with GBIF data source;

- catalogueoflife-spd-plugin: a plugin of the Species Products Discovery service that interacts with Catalogue of Life data source;

- flora-spd-plugin: a plugin of the Species Products Discovery service that interacts with Brazilian Flora data source.

- worms-spd-plugin: a plugin of the Species Products Discovery service that interacts with Worms data source.

- specieslink-spd-plugin: a plugin of the Species Products Discovery service that interacts with SpeciesLink data source.

A diagram of the relationships between these components is reported in the following figure:

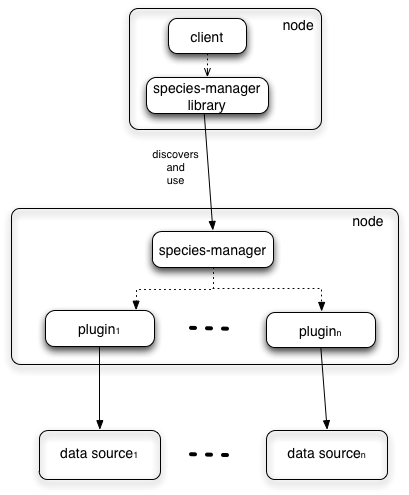

Deployment

All the components of the subsystem must be deployed together in a single node. This subsystem can be replicated in multiple hosts; this does not guarantee a performance improvement because the scalability of this system depends on the capacity of external repositories contacted by the plugins. There are no temporal constraints on the co-deployment of services and plugins. Every plugin must be deployed on every instance of the service. This subsystem is lightweight, it does not need of excessive memory or disk space.

Small deployment

Use Cases

Well suited Use Cases

The subsystem is particularly suited to support abstraction over biodiversity data. Every biodiversity repository can be easily integrated in this subsystem developing a plugin.

The development of any plugin of the Species Manager services immediately extends the ability of the systems to discovery new biodiversity data.