Data Transfer Scheduler

Contents

Data Transfer Scheduler Service

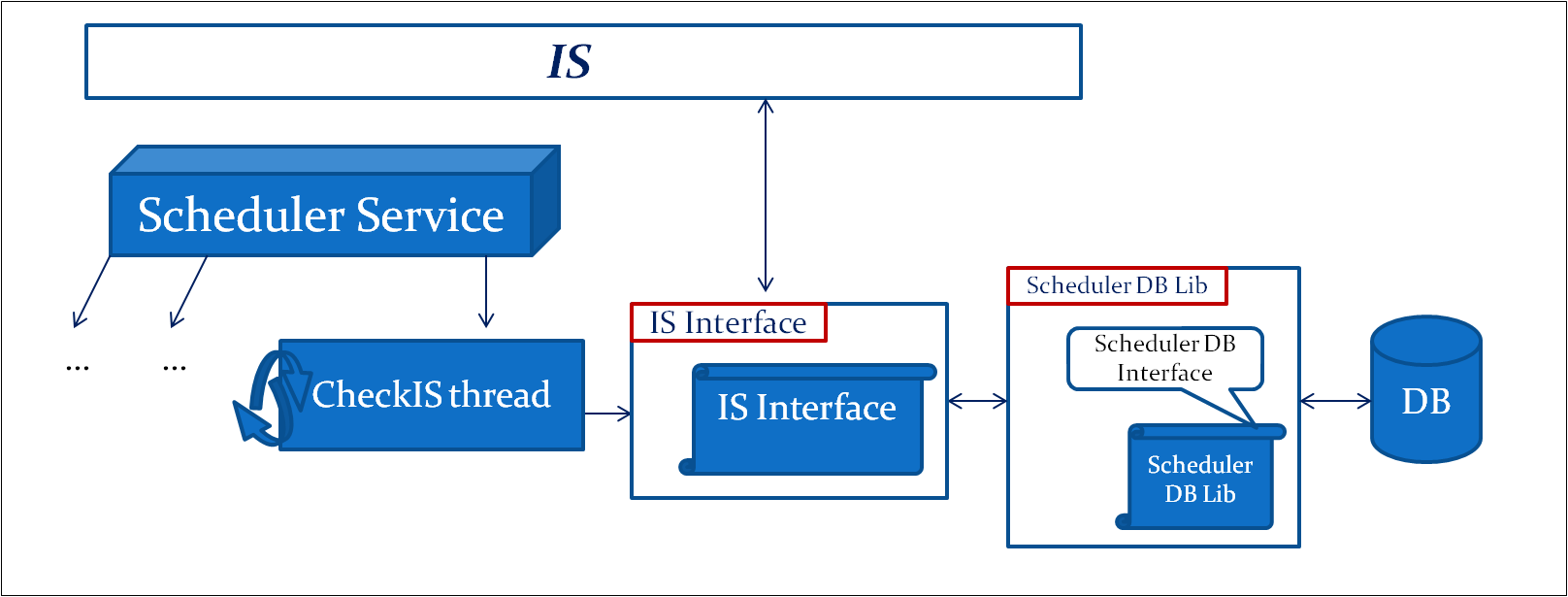

The Data Transfer Scheduler Service is responsible for the transfer scheduling activity delegating and spawning the transfer logic to the series of Data Transfer Agents deployed on the infrastructure.

Architecture

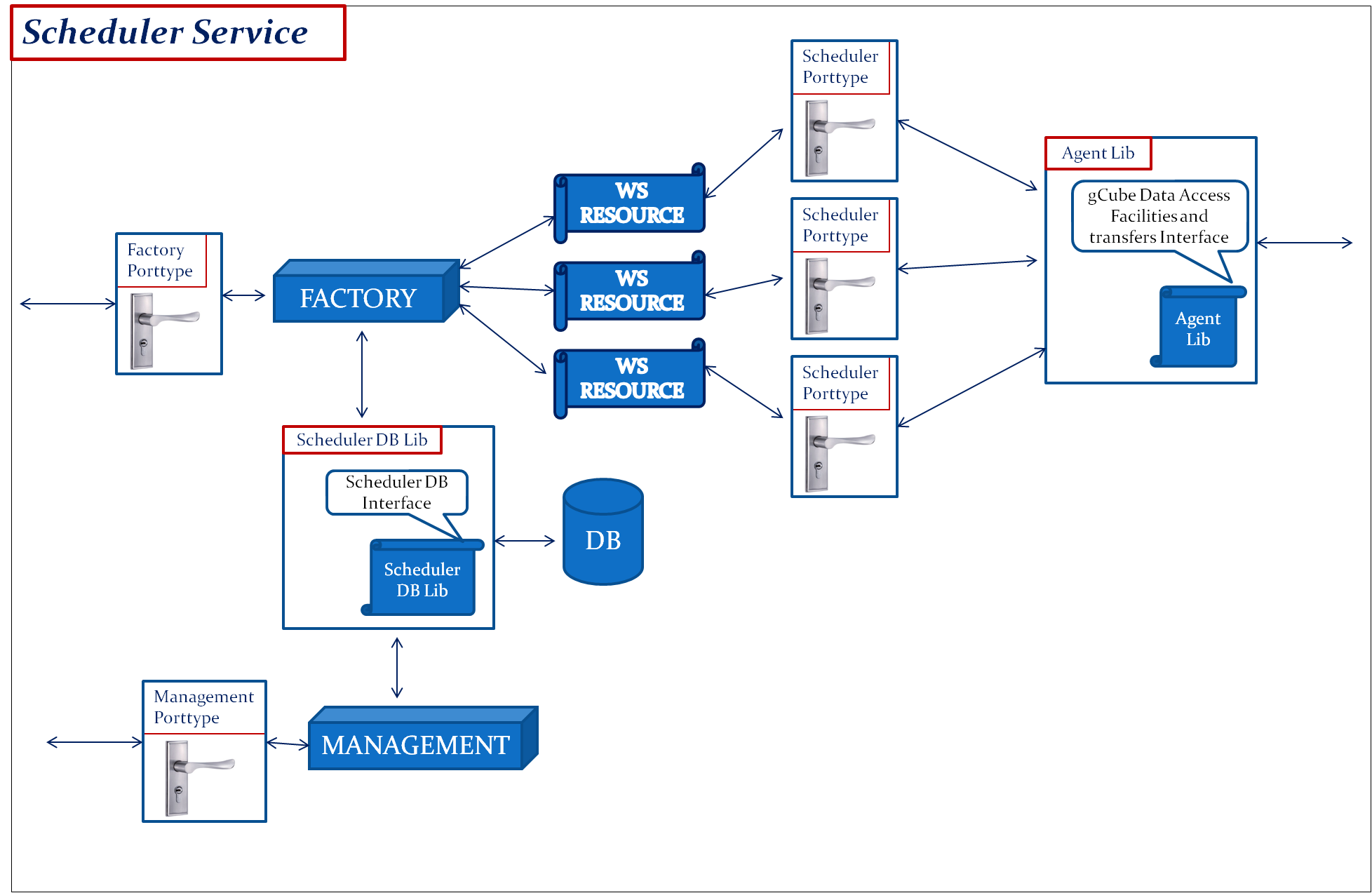

In detail the main service consists of scheduler service (singleton) and the management service.

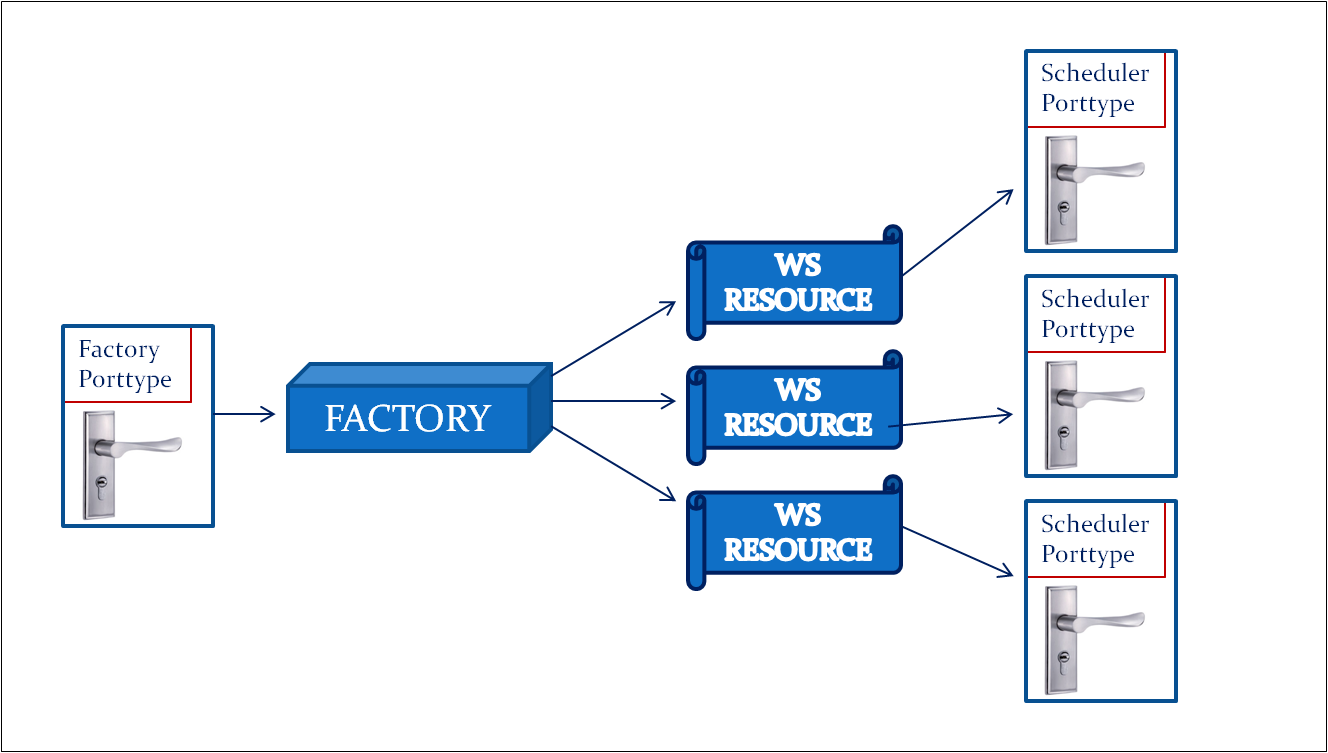

- The scheduler service has the factory porttype so that the submitting API of the Data Transfer Scheduler Library can access it and every WS Resource that the factory creates for a different transfer-operation has one scheduler porttype.

factory porttype: It constitutes the porttype that checks for the client if it's been already registered or not. If the client has been already registered, it just returns the EndpointReferenceType for its resource; in other case it makes a new key in order to create a unique resource for it. The operation is called checkIn, it takes a unique id (maybe name) as an input value and returns an EndpointReferenceType.

scheduler porttype: It is the main porttype of service because here the client comes in order to pass the info for the scheduling. There is an operation called storeInfoScheduler which takes a SchedulerObj type (having all the appropriate info for a scheduled transfer such as source, destination, type of transfer etc) as input and returns nothing. This operation is responsible for storing this amount of information to the local DB.

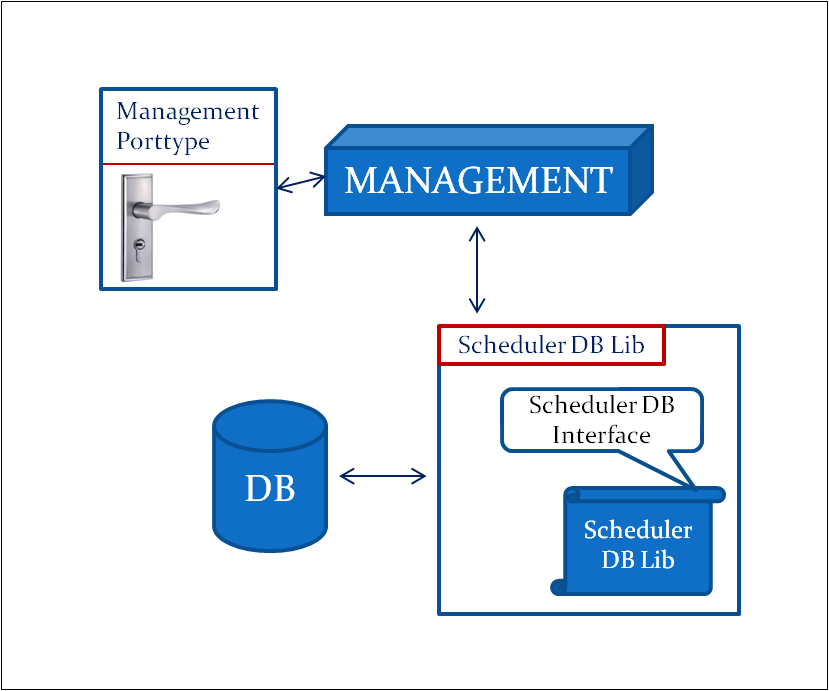

- The management service has the management porttype so that the management API of the Data Transfer Scheduler Library can get and set information about the users, the scopes, the files and what else the client wants to manage.

Both of the Scheduler and Management services use the the Data Transfer Scheduler Database Library so as to access the local DB, each one for a different purpose. The first one stores the details of the transfer scheduling and the second one stores or get info about the local GHN.

The following figure indicates the complete parts of the Data Transfer Scheduler Service:

Types of Transfer

The client can set a transfer between these types:

- FileBasedTransfer

- TreeBasedTransfer

The fileTransfer includes transfer cases from Workspace/MongoDB/DataSource/Agent's node/URI to MongoDB/DataStorage/Agent's node.

The treeTransfer includes the transfer case of transferring from a tree collection to another one.

Types of Schedule

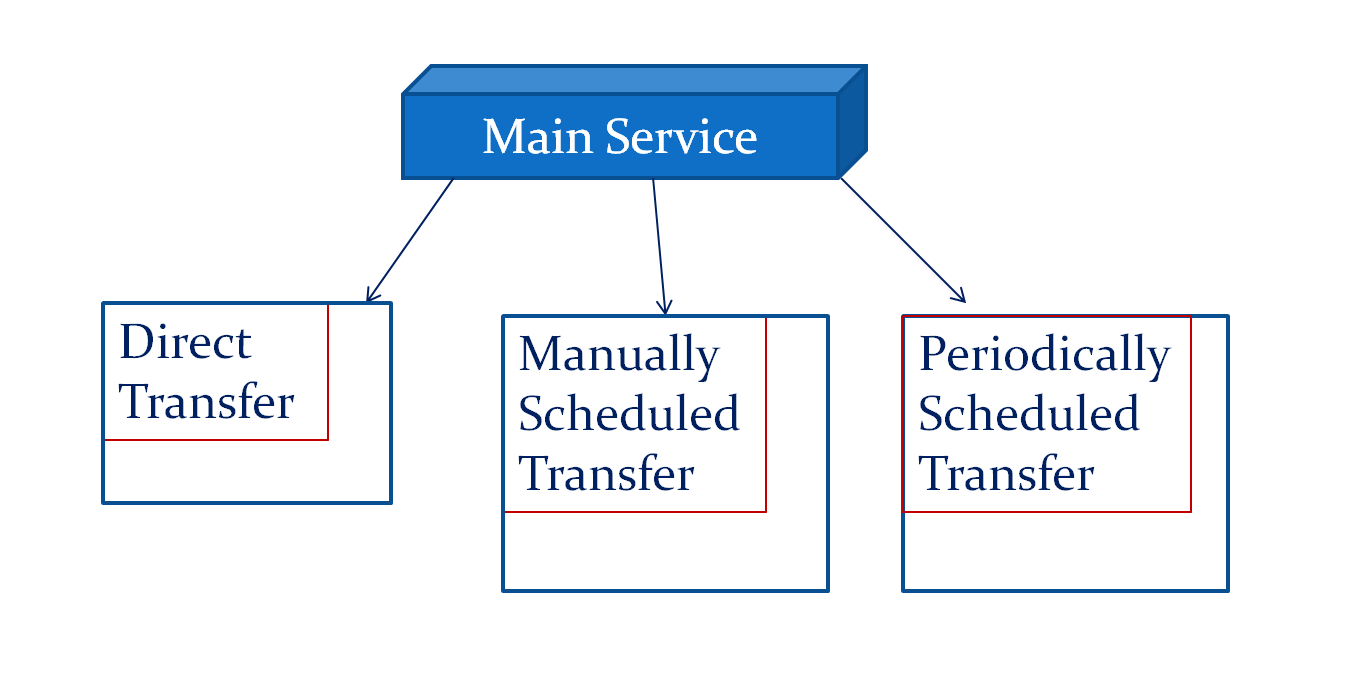

There are three types of schedule:

- Direct Transfer

- Manually Scheduled

- Periodically Scheduled

In the Direct Transfer the client can simply submit a transfer without any schedule. The service directly through the agent library makes the transfer happen.

In the Manually Scheduled Transfer the client submit a transfer by providing also the date that he wants to start the transfer. More specifically the given date should be only a specific instance and the transfer will take place only once.

The actual schedule exists in the Periodically Scheduled Transfer where the client sets the period that he wants the transfer to take place. He can choose one of the six given options: every minute/hour/day/week/month/year. At this type of schedule, the client should also give the start date of the schedule. This is a specific instance like in the manually scheduled transfer and constitutes the beggining of the scheduled transfer.

Transfer Characteristics

- Each transfer is able to have more than one transfer objects.

- The status point of every transfer is reloaded at each stage of transfer.

- The several status points are : STANDBY (the transfer has not started yet), ONGOING, CANCELED, FAILED, COMPLETED

- Depends on what type of schedule the transfer has got, it may or may not change its status again when it is finished. In details in case of having a periodically scheduled transfer, the transfer needs to take place every time that is written in the type schedule. Consequently each time that the transfer has been completed or failed and has taken this value of status it is changed to STANDBY because it needs to take place again.

Main Service Operations

As regards the main service of the scheduler (stateful) there are these main operations so far:

- storeInfoScheduler

- cancelScheduledTransfer

- monitorScheduledTransfer

- getScheduledTransferOutcomes

The first one constitutes the major method which is responsible for retrieving the string message, converting it to the apropriate objects and storing the specific transfer including its schedule to the DB. The return value is the transfer identifier in the Scheduler DB.

The second operation is responsible for canceling a scheduled transfer. It cancels a transfer having firstly check the type of schedule and the status of the transfer. Its behavior differs from case to case. For example if the status is STANDBY it does not need to connect to the Agent because the transfer has not started yet. In this case it just changes the transfer's status from STANDBY to CANCELED.

When a transfer takes place there is a point that the thread responsible for the transfer is waiting for the result of the transfer. More precicely at that point the relevant thread calls the monitor operation of the Agent Service and waits for its result. Consequently the monitoring is integrated inside the core of the service. Though, in case of a wanted monitoring from outside, the client can give the transfer identifier (received from the storeInfoScheduler operation) to the monitorScheduledTransfer and receive the monitor result of the scheduler. The behavior of this method is standard because it returns the stored (in the scheduler DB) status of the specific transfer.

The last operation is responsible for retrieving the outcomes of a specific transfer. In case of calling this operation at a point that the transfer has not started yet a relevant message will be returned as the result of this operation.

NOTE: You cannot call one of the last three operations if the transfer type is LocalFileBasedTransfer because this is the only case of having a sync operation between Scheduler and Agent.

Internal Operations

Besides the above operations, there are also some others needed for the scheduler plan. These operations are internal and they are not for interacting with the clients.

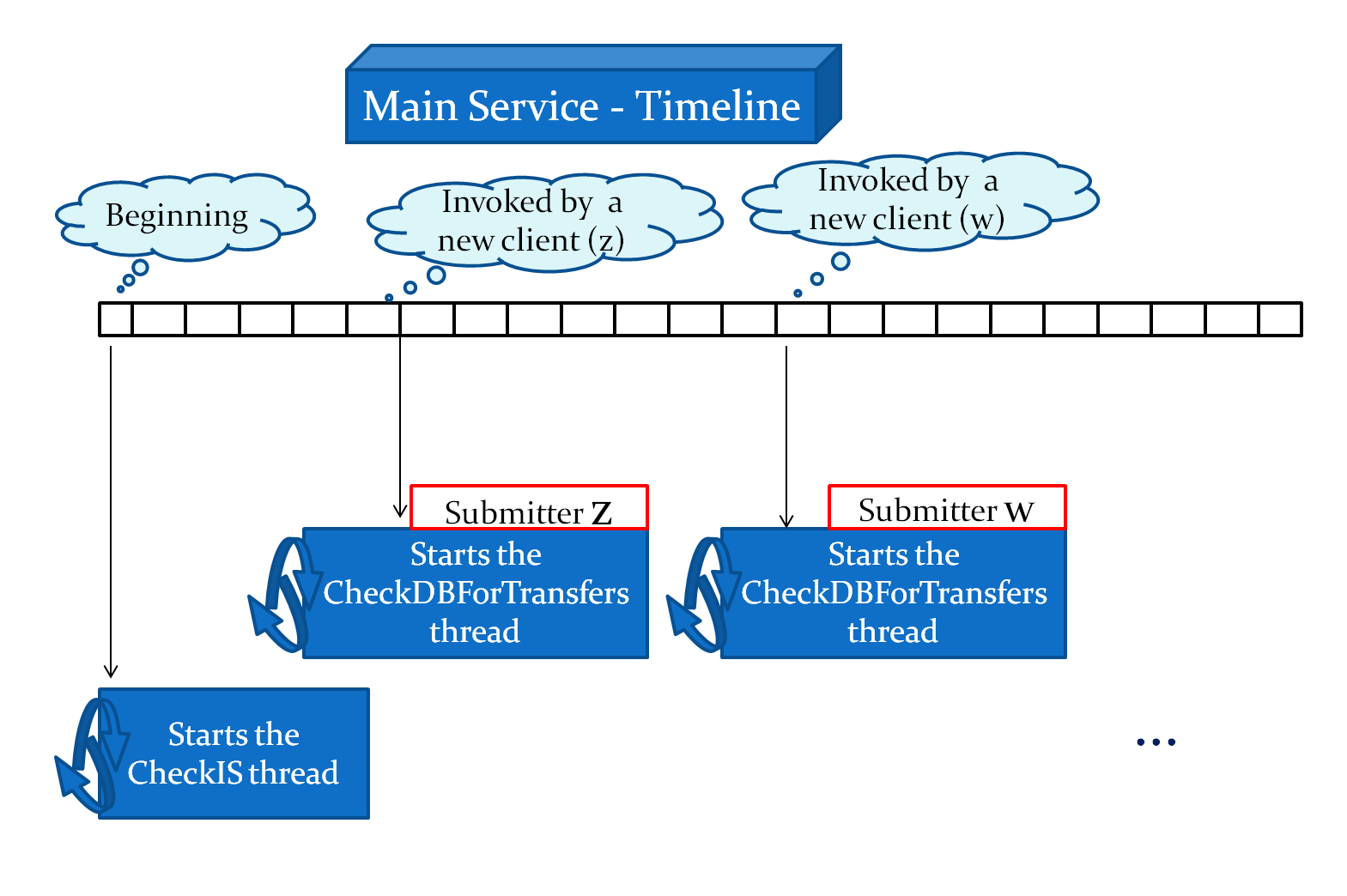

- CheckDBForTransfers

The first time that a new client connects to the factory and creates the resource, the factory also starts a thread called CheckDBForTransfers which is responsible for checking the DB each time interval (given in deploy-jndi-config file) about any transfers (from the same submitter) that need to start. If a transfer need to take place, the CheckDBForTransfers starts another thread called TransferHandler and pass the current info to it in order to manage this specific transfer.

in case of a very big time interval:

-if the client submit a direct transfer, the transfer will take place immediately without changing the time interval.

-when a manually scheduled transfer has been submitted that is about to happen in a time period less than the next check of thread, the time interval is being changed in order to check properly and in time this transfer.

-when a periodically scheduled transfer has been submitted that has a frequency which is less than the time interval, the time interval is being replaced

in case of an old given date:

-if the client submit a manual or a periodical transfer with starting instance an old date, the starting instance is being replaced with the present instance and we start immediately the transfer.

- CheckIS

The service need to know about the existed agents and storages in the infrastructure. This is the reason why there is a need for a continuously check at Information System and update in the DB. At the point that the Scheduler Service starts to run, it commences a new thread named CheckIS which has been created for the previous need. It checks the IS each interval time we have given to deploy-jndi-config file and it updates the DB about IS.

Note: When the container starts the check interval time is very short for the first minute so as to retrieve all the appropriate info at the beginning and then it becomes as much as the value in the configuration file.

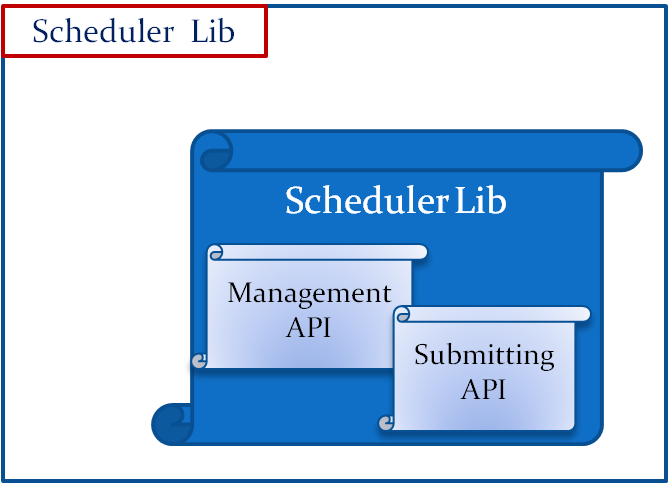

Data Transfer Scheduler Library

Architecture

The Data Transfer Scheduler Library is the Client Library implementing the API for Data Transfer Scheduling. In particular it consists of two separate API’s, one for the management and one for the scheduling.

- The management API is responsible for:

- Retrieving information about the transfers.

- Retrieving objects from the IS (agents, data sources etc.).

- Check the existence of the above objects in DB.

- Retrieving information regarding the agent statistics.

- The scheduling API is responsible for submitting a specific or several transfer operations, cancel a transfer, monitor a transfer and getting the outcomes of a transfer.

The Library implements Asynchronous operations for the data transfer scheduling.

Just to give a initial overview of the API the following is a simple code snippet which invokes method for retrieving information about the transfers.

ScopeProvider.instance.set("/gcube/devsec"); //Management Library ManagementLibrary managementLibrary =(ManagementLibrary)transferManagement().at("node18.d.d4science.research-infrastructures.eu", 8081).withTimeout(10, TimeUnit.SECONDS).build(); //getAllTransfersInfo String resourceName="ALL"; CallingManagementResult callingManagementResult= managementLibrary.getAllTransfersInfo(resourceName); if(callingManagementResult==null)logger.debug("null result from service"); else if(callingManagementResult.getAllTheTransfersInDB()==null)logger.debug("null transfer info from service"); else{ List<TransferInfo> objs=callingManagementResult.getAllTheTransfersInDB(); for(TransferInfo obj: objs){ logger.debug("TransferId="+((TransferInfo)obj).getTransferId()+" -- Status="+((TransferInfo)obj).getStatus()+" -- Submitter="+((TransferInfo)obj).getSubmitter()); if(((TransferInfo)obj).getTypeOfSchedule().isDirectedScheduled()){ logger.debug(" -- directedScheduled\n"); } else if(((TransferInfo)obj).getTypeOfSchedule().getManuallyScheduled()!=null){ Calendar calendarTmp = ((TransferInfo)obj).getTypeOfSchedule().getManuallyScheduled().getCalendar(); logger.debug(" -- manuallyScheduled for: "+calendarTmp.get(Calendar.DAY_OF_MONTH)+"-"+calendarTmp.get(Calendar.MONTH)+"-"+calendarTmp.get(Calendar.YEAR)+" (day-month-year) at "+calendarTmp.get(Calendar.HOUR_OF_DAY)+":"+calendarTmp.get(Calendar.MINUTE)+" (hour-minute)\n" ); } else if(((TransferInfo)obj).getTypeOfSchedule().getPeriodicallyScheduled()!=null){ logger.debug(" -- periodicallyScheduled with FrequencyType:" +((TransferInfo)obj).getTypeOfSchedule().getPeriodicallyScheduled().getFrequency().getValue()+"\n"); } } }

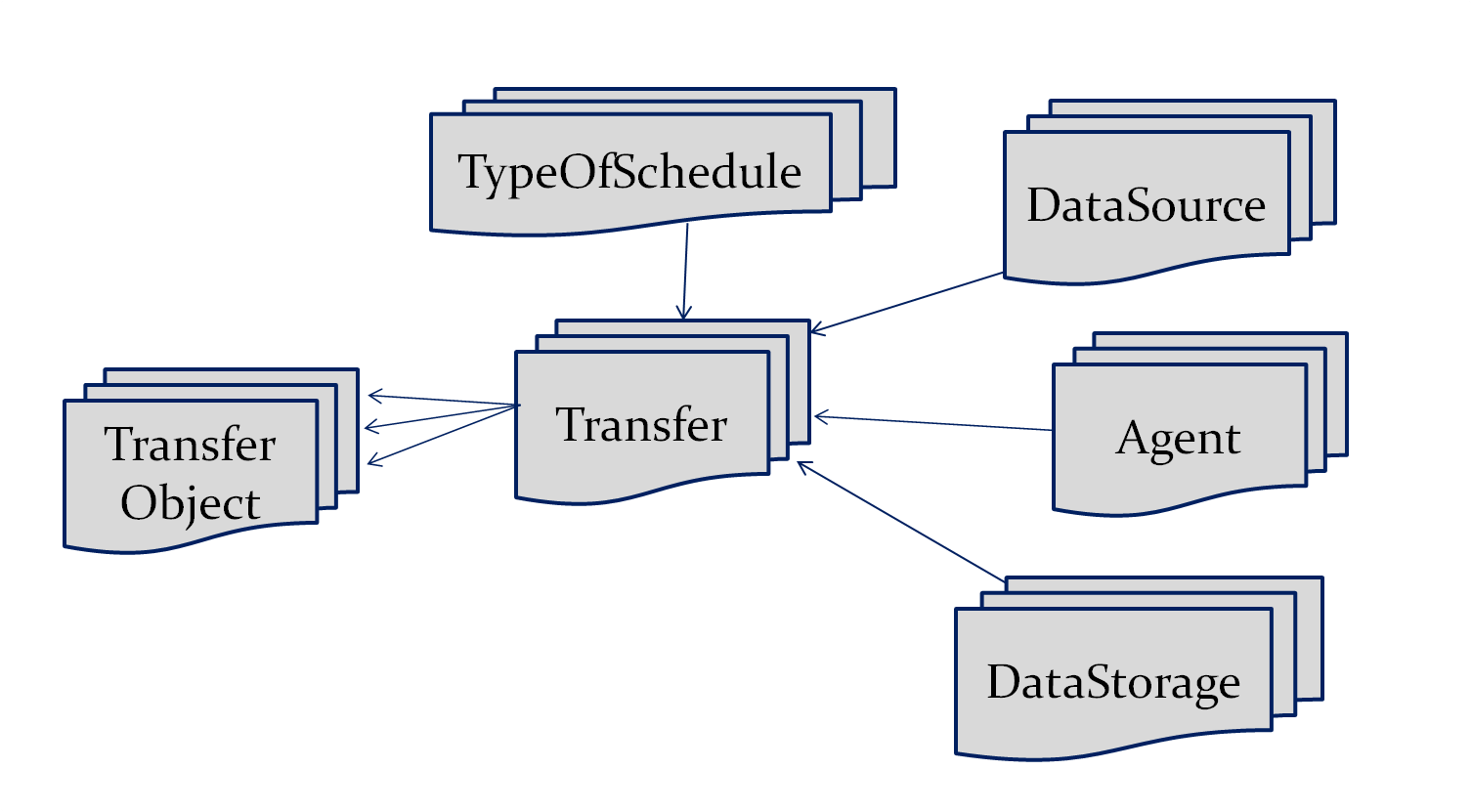

Data Transfer Scheduler Database Library

The Data Transfer Scheduler Database Library implements the API so that the Data Transfer Scheduler Service (either the scheduler one or the management) can access the database. The main structure of the DB without any details can be shown at the following picture.

Each transfer entity is identified by a unique transfer id which is different from the transfer id that the Agent Service may keep for this transfer. Besides the unique id it keeps the following information:

- typeOfScheduleId (The id of the TypeOfSchedule entity)

- submitter (The one who submit the transfer)

- status (The status point)

- scope (the needed scope in case of calling agent service)

- agentId (The id of the Agent)

- transferType (either 'LocalFileBased', 'FileBased' or 'TreeBased')

- destinationFolder (the folder for storing the transferred objects)

- overwrite

- unzipFile

- sourceId (The id of the DataSource) - if having data source node

- storageId (The id of the DataStorage) - if having data storage node

- objectTrasferredIDs (The Ids of the transfer objects been transferred succesful)

- objectFailedIDs (The Ids of the transfer objects not been transferred because of a failure)

- transferError (The error occured in case of a failure)

As we can see there is no info inside the transfer entity about the objects that need to be transfered. This is because the transfer objects keep the id of the specific transfer and not the opposite.

Data Transfer Scheduler IS Library

The Data Transfer Scheduler IS Library implements the API so that the Data Transfer Scheduler Service can retrieve needed info about the Information System and store them in the Database. It also uses the DB interface in order to access the DB of the scheduler service.

The already existed methods:

- updateObjsInDB (This method updates info about either the agents, the data sources or the data storages in DB)

- getObjsFromIS (It retrieves the current info about the requested object(agent-source-storage) from IS)

- checkIfObjExistsInIS_ByHostname (it checks if the given hostname exists or not in IS)

- checkIfObjExistsInIS_ById (By taking a simple id as a parameter it returns a Boolean value indicating if the object(agent-source-storage) exist or not in IS)

- checkIfObjExistsInDB_ById ( The only difference with the previous one is that instead of IS this method checks the DB)

- setObjToDB (Store an object(agent-source-storage) in DB)