Broker and Matchmaker

Contents

Broker and Matchmaker

The Broker and Matchmaker represents the subsystem promoting and supporting the optimal selection and usage of resources during the VRE deployment phase. In particular, it is invoked to select the most appropriate pool of gHNs to be used, among those available in the context of the VRE, during the deployment of the services needed to operate the VRE. Because of this, it interacts with:

- the Information System (IS) to be aware of the available gHNs as well as of their distinguishing features (e.g. the number of Running Instances currently hosted by it, the RAM the machine is equipped with) and

- with the Virtual Organisation Management (VO-Management) to act securely and filter out the gHNs that falls out of the operational context of the VRE. From an architectural point of view it mainly consists of a service implementing the matchmaking algorithm.

Reference architecture

The main task of the BM is the selection of the most suitable set of gHNs to deploy a specified set of packages. The matching process, implemented by the BM-Algorithm component, is based on the matchmaking algorithm. The process returns an association between packages to be deployed and gHNs, named deployment schema, trying to minimize the number of gHNs used. The deployment schema can then be used by the client (namely, the VREManager) to drive the deployment process.The matching process takes into account the current status of the infrastructure, i.e. the set of services already deployed on gHNs and the current state of the node. This service is able to query the gCube Information Service (IS) to obtain the current deployment status, as well as requirements of packages to be deployed (contained in ServiceProfiles and stored in the IS).

Resources and Properties

The Matchmaker service adopts a Factory Pattern to compute multiple deployment plans at once. Each BMResource publishes as WS-ResourceProperty the status of the planning, the timestamp of the planning request and the final deployment plan. The BMResources are not persisted. Different Matchmaker RIs can concurrently build deployment plan in the same gCube-based infrastructure. As a consequence of a deployment operation, the status of the infrastructure will be modified, for this reason the deployment scheme resulting from a subsequent matching request could be different.

To avoid race conditions among concurrent matching processes the Matchmaker Service has a controlled locking gHNs behaviour preventing two matching plans from concurrently run. When a deployment process originated from a deployment scheme has been completed, the Matchmaker Service proposes a deployment plan. This client (the VREManger) tries to deploy the desired packages according to the plan and notifies, at the end of this activity, the Matchmaker with a deployment report. At this stage BM-Service can release the locked GHNs .

Functions

The main functions supported by the Matchmaker Service (Factory and Service) are:

- makeDeploymentPlan(String xmlPackages) - which takes as input parameter the list of packages to be deployed. This packages list contains also solved dependencies tree, possibly grouped by node. The method invokes the makePlan method of the BMResource and publishes its result as WS-ResourceProperty;

- checkGHNLock(CheckGHNLockRequest) - which takes as input parameter CheckGHNLockRequest and allows checking if the BMResource, is planning to use a GHN;

- sendFeedbackReport(String xmlFeedback) - which takes as input parameter a VREManager report in xml format about the deployment of the proposal plan.

The public interface of the Broker and Matchmaker port-types can be found here.

Requesting a deployment plan

BM is in charge to build a deployment plan that minimize number of packages to be deployed in order to have a VRE up-and-running, reuse of already deployed packages, where possible and reduce the number of used gHNs.

A request to create a deployment plan is evaluated in the scope call and performed through the makeDeploymentPlan() operation. There is an xml file to to provide to invoke this operation. This file should contain two kind of information:

- the list of packages to be deployed organized per GHN

- the desired GHNs

By default, deployment plan takes into account gHNs of the call scope: VRE Manager can specify, if needed, a fine-grained set of gHNs. In particular a client can specify a global GHNList for all packages to be deployed or a local GHNList, for each GHN-grouped list of packages.

NOTE: Current implementation enforces the global GHNList element only, while the per-group GHNList element will be supported starting from the next release of the service.

Here an example of xml request file:

<GHN> <Package reuse="false"> <ServiceClass>ABE</ServiceClass> <ServiceName>Annotation</ServiceName> <ServiceVersion>1.00.00</ServiceVersion> <PackageName>Main</PackageName> <PackageVersion>1.00.00</PackageVersion> </Package> <Package reuse="false"> <ServiceClass>InformationSystem</ServiceClass> <ServiceName>IS-Notifier</ServiceName> <ServiceVersion>1.00.00</ServiceVersion> <PackageName>Notifier-service</PackageName> <PackageVersion>1.00.00</PackageVersion> </Package> <GHNList> <GHN>f57331e0-8be2-11dd-bd6a-e3813256862a</GHN> </GHNList> </GHN> <GHNList> <GHN>6a5c5d50-8950-11dd-889c-83ac6328d38a</GHN> <GHN>846a0730-88cc-11dd-94de-8b2d8231edf0</GHN> </GHNList>

For example, the previous file is produced by the VREManager API Requesting a deployment

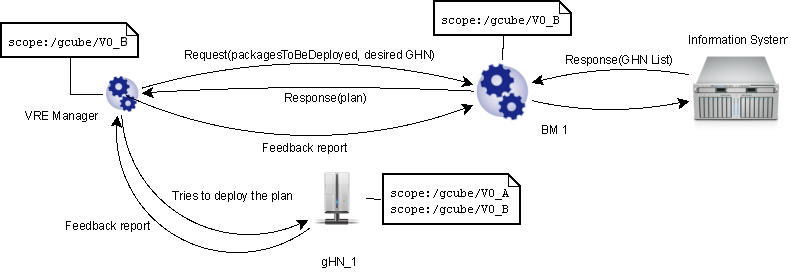

This request triggers a number of events generically simplified in the following image:

The makeDeploymentPlan operation to request a deployment plan can be invoked as follows:

import org.apache.axis.message.addressing.AttributedURI; import org.apache.axis.message.addressing.EndpointReferenceType; import org.apache.log4j.Level; import org.apache.log4j.spi.RootCategory; import org.gcube.brokermatchmaker.bmm.stubs.FactoryPortType; import org.gcube.brokermatchmaker.bmm.stubs.service.FactoryServiceAddressingLocator; import org.gcube.common.core.contexts.GCUBERemotePortTypeContext; import org.gcube.common.core.scope.GCUBEScope; import org.gcube.common.core.utils.logging.GCUBEClientLog; EndpointReferenceType factory_endpoint = new EndpointReferenceType(); factory_endpoint.setAddress(new Address("http://"+ <BMFactory hostname>+":"+ <BMPort port> +"/wsrf/services/gcube/brokermatchmaker/bmm/Factory")); FactoryPortType factoryPT = new FactoryServiceAddressingLocator().getFactoryPortTypePort(factory_endpoint); //we proxy the factory PT with the desired scope factoryPT = GCUBERemotePortTypeContext.getProxy(factoryPT, GCUBEScope.getScope("/gcube/devsec")); String newLine = System.getProperty("line.separator"); FileReader reader = new FileReader("/home/turli/workspace/D4S_TESTS/src/ListPackages.xml"); BufferedReader br = new BufferedReader(reader); StringBuilder sb = new StringBuilder(); for (String line = br.readLine(); line != null; line = br.readLine()) { sb.append(line).append(newLine); } EndpointReferenceType service_endpoint = factoryPT.makeDeploymentPlan(sb.toString());

The checkGHNLock operation is in charge to control if a GHN is involved in a deployment plan still in computation by another GHN. The checkGHNLock operation can be invoked as follows:

StatefulPortType statefulPT = new StatefulServiceAddressingLocator().getStatefulPortTypePort(service_endpoint); // then we have to proxy also the stateful PT... statefulPT = GCUBERemotePortTypeContext.getProxy(statefulPT, GCUBEScope.getScope("/gcube/devsec")); statefulPT.checkGHNLock(new CheckGHNLockRequest(ghns, timestamp)); // the list GHN ID you are interested in

Each WS-Resource in BM are labeled with a timestamp. This is used to implement a locking algorithm. During a checkGHNLock operation the current timestamp should be specified, to decide which concurrent BM can use that GHN.

Each BMResource accepts a feedback report (basically produced by VREManager) regarding the result of the proposed deployment plan. In particular, let's describe a typical scenario where a VREmanager asks for a deployment plan to a BM service. BM service produces this plan and propose it to VREManager that tries to deploy the packages requested according to the BM plan. During this step, the BM service keeps locked all the GHNs involved in the proposed plan thus no other BM can consider them in their plan. To unlock GHNs, BM service waits for a feedback report from VREManager.

Here an example of feedback report:

<GHN id="1"> <Package status="succeeded"> <ServiceClass>ABE</ServiceClass> <ServiceName>Annotation</ServiceName> <ServiceVersion>1.00.00</ServiceVersion> <PackageName>Main</PackageName> <PackageVersion>1.00.00</PackageVersion> </Package> </GHN> <GHN id="2"> <Package status="failed"> <ServiceClass>BMM</ServiceClass> <ServiceName>broker</ServiceName> <ServiceVersion>1.0.0</ServiceVersion> <PackageName>Main</PackageName> <PackageVersion>1.0.1</PackageVersion> </Package> </GHN>

where the plan for GHN with ID=1 has been successfully completed while VREManager had a problem to deploy a BMM service in GHN with ID=2.

Matching Algorithm

The matchmaking is a variant of a Bin Packing problem, a combinatorial NP-hard problem. In the Bin Packing a set of objects of different size must be packed into a finite number of bins of different capacities minimizing the number of bins used. The relationship between the Bin Packing and the matchmaking problem can be summarized as follow:

- Input description:

a. A set of n items with size: d1, d2, ..., dn; corresponding to packages to be deployed b. A set of m bins with capacity: c1, c2, ...,cm; corresponding to available GHNs with their features and current status

- Problem description:

c. Store all the items using the smallest number of bins (i.e. GHNs)

Since the Bin Packing problem is NP-hard, the optimal solution will requires exponential time to be found. A most efficient approach uses heuristics to find a sub-optimal solution in polynomial time. Analytical and empirical evaluations suggest that the best heuristic for this problem is the First-Fit Decreasing algorithm. The algorithm is organized in the following steps:

- Sort objects in decreasing order of size, from the biggest to the smallest

- Insert each object one by one into the first bin (e.g. GHN) that has room for it. If no bin has room for it, we must start another bin

First-fit decreasing can be implemented in O(n*logn + b*n) time, where b<=min(m,n) is the number of bins actually used, by doing a linear sweep through the bins on each insertion. If compared to the standard Bin Packing problem, the matchmaking algorithm has a number of additional constraints. First of all each package (item) has a set of requirements on the GHN hosting it. These requirements are of different types:

- Property requirements (e.g. OS type == Linux)

- Capacity requirements (e.g. CPU speed > 500MHz)

- Consumption requirements (e.g. disk space > 30MB)

- Software requirements (e.g. libX v2.4 has to be already installed)

Moreover, two types of dependency among couples of packages to be deployed can be specified. Supported types for relations among packages are:

- uses – this type of dependency implies that, after deployment, involved packages will establish some kind of interaction.

Although it is desirable to minimize network communication, the fulfilment of this constraint is not to be considered mandatory for a proposed deployment solution.

- same-host – this type of dependency implies that the two involved packages have to be deployed on the same GHN. Dependencies of this type are mandatory; a violation would lead to a faulty deployment scheme.

These dependencies and requirements can be defined in profiles of services and further refined in a matching request. Finally, in each request is also possible to define a list of favourite gHNs where to deploy the item defined previously. The deployment schema resulting from the matchmaking process must take into account these requirements and dependencies and ensure that:

- For each package, its requirements are satisfied by the identified GHN;

- Each GHN satisfies the aggregation of consumption requirements of software being hosted (e.g. the sum of disk space used by packages being installed on a GHN must not exceed the current available disk space for that GHN);

- As required by the ‘same-host’ constraints, packages are deployed on the same hosts;

- Minimize the number of GHN hosting packages bound by a ‘uses’ constraint.