Difference between revisions of "Tabular Data Flow Manager"

m (→Key features) |

(→Well suited Use Cases) |

||

| (4 intermediate revisions by the same user not shown) | |||

| Line 4: | Line 4: | ||

The goal of this facility is to realise an integrated environment supporting the definition and management of workflows of tabular data. | The goal of this facility is to realise an integrated environment supporting the definition and management of workflows of tabular data. | ||

| − | Each workflow consists of a number of tabular data processing steps where each step is realized by an existing service conceptually offered by a gCube based infrastructure. | + | Each workflow consists of a number of tabular data processing steps where each step is realized by an existing service component conceptually offered by a gCube based infrastructure. |

In the following, the design rationale, key features, high-level architecture, as well as the deployment scenarios are described. | In the following, the design rationale, key features, high-level architecture, as well as the deployment scenarios are described. | ||

| Line 10: | Line 10: | ||

== Overview == | == Overview == | ||

| − | The goal of this service is to offer a facilities for tabular data workflow | + | The goal of this service is to offer a facilities for tabular data workflow management, execution and monitoring. |

| − | The workflow can involve a number of data manipulation steps each performed by potentially different | + | The workflow can involve a number of data manipulation steps each performed by potentially different service components to produce the desired output. |

| − | + | ||

=== Key features === | === Key features === | ||

The subsystem provides for: | The subsystem provides for: | ||

| + | |||

| + | ;declarative approach | ||

| + | :Instead of providing the user with means to describe the workflow as a set of transformation steps the user provides a table template as a set of properties a target table should comply with. | ||

;flexible and open workflow definition mechanism | ;flexible and open workflow definition mechanism | ||

| − | :The | + | :The set of workflow steps can be enriched providing wider capabilities for template descriptiveness; |

;user-friendly interface | ;user-friendly interface | ||

| − | :The subsystem offers a graphical user interface where users | + | :The subsystem offers a graphical user interface where users can define table templates. Moreover, the environment allow to actually perform a workflow by applying a template to an imported table; |

== Design == | == Design == | ||

| Line 27: | Line 29: | ||

=== Philosophy === | === Philosophy === | ||

| − | Tabular Data Flow Manager offers a service for tabular data workflow creation, management and monitoring. | + | Tabular Data Flow Manager offers a service for tabular data workflow creation, management and monitoring. |

| − | The underlying idea is to | + | The underlying idea is to provide means to the service client to command multiple operations by providing a table template. A table template can be defined in terms of a set of properties the workflow resulting table should camply with. Table templates can be created by the end user with the UI and saved for later reuse. Applying a template to a target tabular data table results in the materialization of a set of workflow steps on the service, which can be monitored remotely. |

| − | This aims at maximizing the exploitation and reuse of components | + | Each step is managed by a single software component which can also be invoked singularly. |

| − | + | This approach aims at maximizing the exploitation and reuse of components offering data manipulation facilities. | |

=== Architecture === | === Architecture === | ||

The subsystem comprises the following components: | The subsystem comprises the following components: | ||

| − | * '''Tabular Data | + | * '''Flow Service''': A subset of Tabular Data Service functionalities that allows workflow creation, management, execution and monitoring; |

| + | |||

| + | * '''Flow UI''': the user interface of this functional area. It provides users with the web based user interface for creating, executing and monitoring the workflow(s); | ||

| − | * ''' | + | * '''Workflow Orchestrator''': A service components that ''unpacks'' a table template into a sequence of operations to be performed on a target table; |

| − | * ''' | + | * '''Operation modules''': A set of software modules, each one managing a specific operation (transformation,validation,import,export). |

A diagram of the relationships between these components is reported in the following figure: | A diagram of the relationships between these components is reported in the following figure: | ||

| Line 46: | Line 50: | ||

== Deployment == | == Deployment == | ||

| − | The Service should be deployed in a single node | + | The Service should be deployed in a single node along with the operation modules. The User Interface can be deployed in the infrastructure portal along with the needed client library. |

== Use Cases == | == Use Cases == | ||

=== Well suited Use Cases === | === Well suited Use Cases === | ||

| − | This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps | + | This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps. An example is the data flow that allows a user to curate a set of uncurated data, provided periodically by a data provider, apply a set of default transformation and validation procedures and merge all the curated data chunks into a single table at the end of the process. |

| − | An example is the data flow | + | |

Latest revision as of 14:45, 21 November 2013

The goal of this facility is to realise an integrated environment supporting the definition and management of workflows of tabular data. Each workflow consists of a number of tabular data processing steps where each step is realized by an existing service component conceptually offered by a gCube based infrastructure.

In the following, the design rationale, key features, high-level architecture, as well as the deployment scenarios are described.

Overview

The goal of this service is to offer a facilities for tabular data workflow management, execution and monitoring. The workflow can involve a number of data manipulation steps each performed by potentially different service components to produce the desired output.

Key features

The subsystem provides for:

- declarative approach

- Instead of providing the user with means to describe the workflow as a set of transformation steps the user provides a table template as a set of properties a target table should comply with.

- flexible and open workflow definition mechanism

- The set of workflow steps can be enriched providing wider capabilities for template descriptiveness;

- user-friendly interface

- The subsystem offers a graphical user interface where users can define table templates. Moreover, the environment allow to actually perform a workflow by applying a template to an imported table;

Design

Philosophy

Tabular Data Flow Manager offers a service for tabular data workflow creation, management and monitoring. The underlying idea is to provide means to the service client to command multiple operations by providing a table template. A table template can be defined in terms of a set of properties the workflow resulting table should camply with. Table templates can be created by the end user with the UI and saved for later reuse. Applying a template to a target tabular data table results in the materialization of a set of workflow steps on the service, which can be monitored remotely. Each step is managed by a single software component which can also be invoked singularly. This approach aims at maximizing the exploitation and reuse of components offering data manipulation facilities.

Architecture

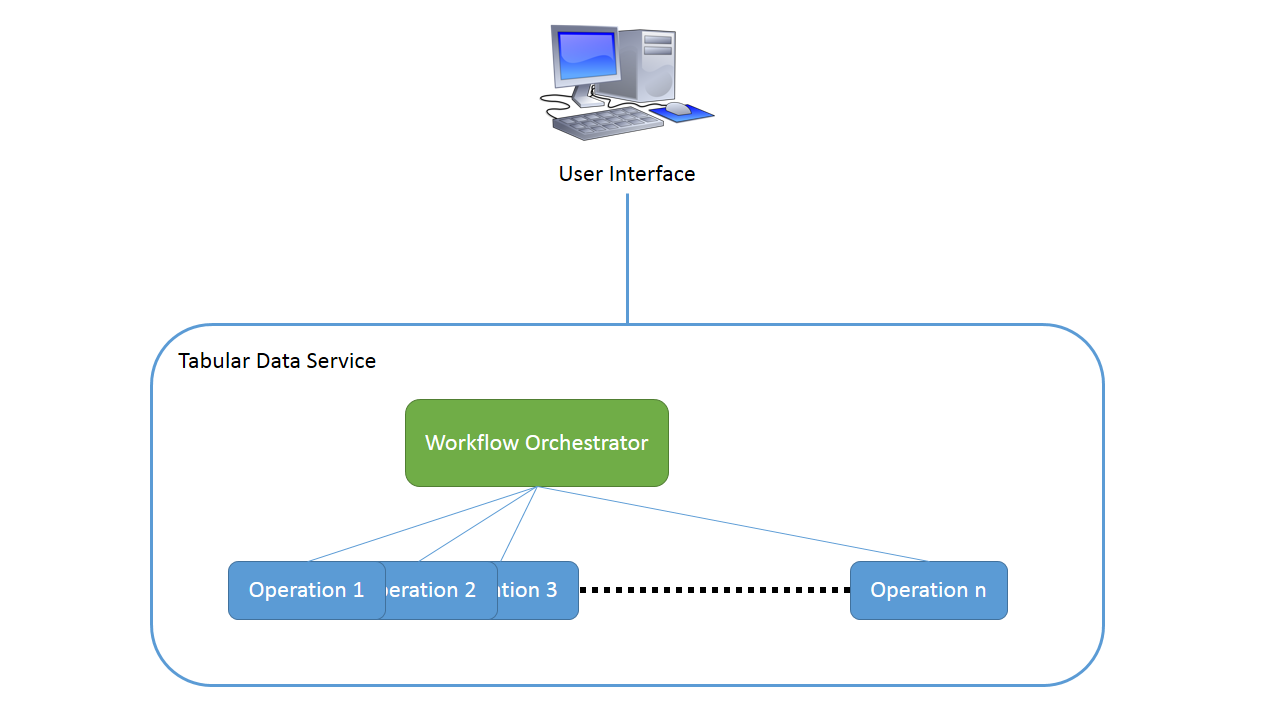

The subsystem comprises the following components:

- Flow Service: A subset of Tabular Data Service functionalities that allows workflow creation, management, execution and monitoring;

- Flow UI: the user interface of this functional area. It provides users with the web based user interface for creating, executing and monitoring the workflow(s);

- Workflow Orchestrator: A service components that unpacks a table template into a sequence of operations to be performed on a target table;

- Operation modules: A set of software modules, each one managing a specific operation (transformation,validation,import,export).

A diagram of the relationships between these components is reported in the following figure:

Deployment

The Service should be deployed in a single node along with the operation modules. The User Interface can be deployed in the infrastructure portal along with the needed client library.

Use Cases

Well suited Use Cases

This component well fit all the cases where it is necessary to manage a defined flow of data manipulation steps. An example is the data flow that allows a user to curate a set of uncurated data, provided periodically by a data provider, apply a set of default transformation and validation procedures and merge all the curated data chunks into a single table at the end of the process.