Difference between revisions of "Index Management Framework"

(New page: {{UnderUpdate}}) |

(→Deployment Instructions) |

||

| (133 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | {{ | + | =Contextual Query Language Compliance= |

| + | The gCube Index Framework consists of the Index Service which provides both FullText Index and Forward Index capabilities. All of them are able to answer [http://www.loc.gov/standards/sru/specs/cql.html CQL] queries. The CQL relations that each of them supports, depends on the underlying technologies. The mechanisms for answering CQL queries, using the internal design and technologies, are described later for each case. The supported relations are: | ||

| + | |||

| + | * [[Index_Management_Framework#CQL_capabilities_implementation | Index Service]] : =, ==, within, >, >=, <=, adj, fuzzy, proximity, within | ||

| + | <!--* [[Index_Management_Framework#CQL_capabilities_implementation_2 | Geo-Spatial Index]] : geosearch | ||

| + | * [[Index_Management_Framework#CQL_capabilities_implementation_3 | Forward Index]] : --> | ||

| + | |||

| + | =Index Service= | ||

| + | The Index Service is responsible for providing quick full text data retrieval and forward index capabilities in the gCube environment. | ||

| + | |||

| + | Index Service exposes a REST API, thus it can be used by different general purpose libraries that support REST. | ||

| + | For example, the following HTTP GET call is used in order to query the index: | ||

| + | |||

| + | http://'''{host}'''/index-service-1.0.0-SNAPSHOT/'''{resourceID}'''/query?queryString=((e24f6285-46a2-4395-a402-99330b326fad = tuna) and (((gDocCollectionID == 8dc17a91-378a-4396-98db-469280911b2f)))) project ae58ca58-55b7-47d1-a877-da783a758302 | ||

| + | |||

| + | Index Service is consisted by a few components that are available in our Maven repositories with the following coordinates: | ||

| + | |||

| + | <source lang="xml"> | ||

| + | |||

| + | <!-- index service web app --> | ||

| + | <groupId>org.gcube.index</groupId> | ||

| + | <artifactId>index-service</artifactId> | ||

| + | <version>...</version> | ||

| + | |||

| + | |||

| + | <!-- index service commons library --> | ||

| + | <groupId>org.gcube.index</groupId> | ||

| + | <artifactId>index-service-commons</artifactId> | ||

| + | <version>...</version> | ||

| + | |||

| + | <!-- index service client library --> | ||

| + | <groupId>org.gcube.index</groupId> | ||

| + | <artifactId>index-service-client-library</artifactId> | ||

| + | <version>...</version> | ||

| + | |||

| + | <!-- helper common library --> | ||

| + | <groupId>org.gcube.index</groupId> | ||

| + | <artifactId>indexcommon</artifactId> | ||

| + | <version>...</version> | ||

| + | |||

| + | </source> | ||

| + | |||

| + | ==Implementation Overview== | ||

| + | ===Services=== | ||

| + | The new index is implemented through one service. It is implemented according to the Factory pattern: | ||

| + | *The '''Index Service''' represents an index node. It is used for managements, lookup and updating the node. It is a compaction of the 3 services that were used in the old Full Text Index. | ||

| + | |||

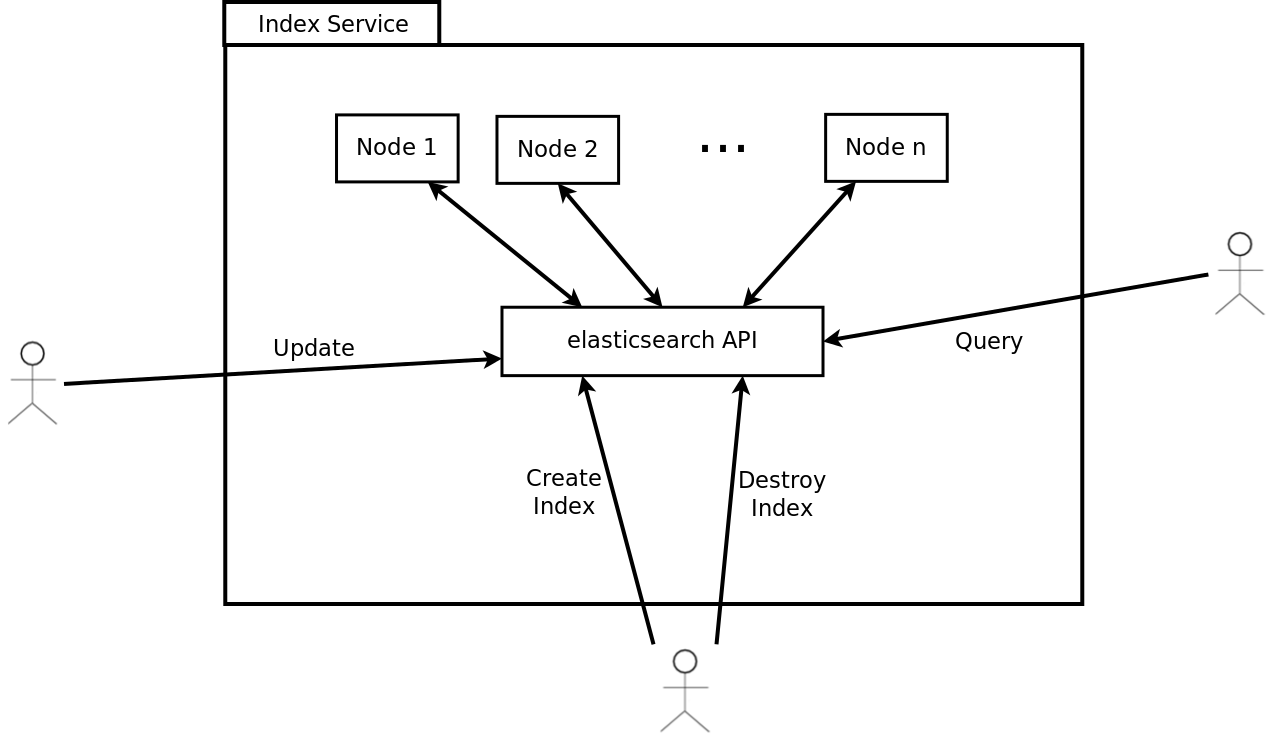

| + | The following illustration shows the information flow and responsibilities for the different services used to implement the Index Service: | ||

| + | |||

| + | [[File:FullTextIndexNodeService.png|frame|none|Generic Editor]] | ||

| + | |||

| + | It is actually a wrapper over ElasticSearch and each IndexNode has a 1-1 relationship with an ElasticSearch Node. For this reason creation of multiple resources of IndexNode service is discouraged, instead the best case is to have one resource (one node) at each container that consists the cluster. | ||

| + | |||

| + | Clusters can be created in almost the same way that a group of lookups and updaters and a manager were created in the old Full Text Index (using the same indexID). Having multiple clusters within a scope is feasible but discouraged because it usually better to have a large cluster than multiple small clusters. | ||

| + | |||

| + | The cluster distinction is done through a clusterID which is either the same as the indexID or the scope. The deployer of the service can choose between these two by setting the value of ''defaultSameCluster'' variable in the ''deploy.properties'' file true of false respectively. | ||

| + | |||

| + | Example | ||

| + | <pre> | ||

| + | defaultSameCluster=true | ||

| + | </pre> | ||

| + | or | ||

| + | |||

| + | <pre> | ||

| + | defaultSameCluster=false | ||

| + | </pre> | ||

| + | |||

| + | ''ElasticSearch'', which is the underlying technology of the new Index Service, can configure the number of replicas and shards for each index. This is done by setting the variables ''noReplicas'' and ''noShards'' in the ''deploy.properties'' file | ||

| + | |||

| + | Example: | ||

| + | <pre> | ||

| + | noReplicas=1 | ||

| + | noShards=2 | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | '''Highlighting''' is a new supported feature by Full Text Index (also supported in the old Full Text Index). If highlighting is enabled the index returns a snippet of the matching query that is performed on the presentable fields. This snippet is usually a concatenation of a number of matching fragments in those fields that match queries. The maximum size of each fragment as well as the maximum number of the fragments that will be used to construct a snippet can be configured by setting the variables ''maxFragmentSize'' and ''maxFragmentCnt'' in the ''deploy.properties'' file respectively: | ||

| + | |||

| + | Example: | ||

| + | <pre> | ||

| + | maxFragmentCnt=5 | ||

| + | maxFragmentSize=80 | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | |||

| + | The folder where the data of the index are stored can be configured by setting the variable ''dataDir'' in the ''deploy.properties'' file (if the variable is not set the default location is the folder that the container runs). | ||

| + | |||

| + | Example : | ||

| + | <pre> | ||

| + | dataDir=./data | ||

| + | </pre> | ||

| + | |||

| + | In order to configure whether to use Resource Registry or not (for translation of field ids to field names) we can change the value of the variable ''useRRAdaptor'' in the ''deploy.properties'' | ||

| + | |||

| + | Example : | ||

| + | <pre> | ||

| + | useRRAdaptor=true | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | Since the Index Service creates resources for each Index instance that is running (instead of running multiple Running Instances of the service) the folder where the instances will be persisted locally have to be set in the variable ''resourcesFoldername'' in the ''deploy.properties''. | ||

| + | |||

| + | Example : | ||

| + | <pre> | ||

| + | resourcesFoldername=./resources/index | ||

| + | </pre> | ||

| + | |||

| + | Finally, the hostname of the node as well as the port and the scope that the node is running on have to set in the in the variables ''hostname'' and ''scope'' in the ''deploy.properties''. | ||

| + | |||

| + | Example : | ||

| + | <pre> | ||

| + | hostname=dl015.madgik.di.uoa.gr | ||

| + | port=8080 | ||

| + | scope=/gcube/devNext | ||

| + | </pre> | ||

| + | |||

| + | ===CQL capabilities implementation=== | ||

| + | Full Text Index uses [http://lucene.apache.org/ Lucene] as its underlying technology. A CQL Index-Relation-Term triple has a straightforward transformation in lucene. This transformation is explained through the following examples: | ||

| + | |||

| + | {| border="1" | ||

| + | ! CQL triple !! explanation !! lucene equivalent | ||

| + | |- | ||

| + | ! title adj "sun is up" | ||

| + | | documents with this phrase in their title | ||

| + | | title:"sun is up" | ||

| + | |- | ||

| + | ! title fuzzy "invorvement" | ||

| + | | documents with words "similar" to invorvement in their title | ||

| + | | title:invorvement~ | ||

| + | |- | ||

| + | ! allIndexes = "italy" (documents have 2 fields; title and abstract) | ||

| + | | documents with the word italy in some of their fields | ||

| + | | title:italy OR abstract:italy | ||

| + | |- | ||

| + | ! title proximity "5 sun up" | ||

| + | | documents with the words sun, up inside an interval of 5 words in their title | ||

| + | | title:"sun up"~5 | ||

| + | |- | ||

| + | ! date within "2005 2008" | ||

| + | | documents with a date between 2005 and 2008 | ||

| + | | date:[2005 TO 2008] | ||

| + | |} | ||

| + | |||

| + | In a complete CQL query, the triples are connected with boolean operators. Lucene supports AND, OR, NOT(AND-NOT) connections between single criteria. Thus, in order to transform a complete CQL query to a lucene query, we first transform CQL triples and then we connect them with AND, OR, NOT equivalently. | ||

| + | |||

| + | ===RowSet=== | ||

| + | The content to be fed into an Index, must be served as a [[ResultSet Framework|ResultSet]] containing XML documents conforming to the ROWSET schema. This is a very simple schema, declaring that a document (ROW element) should contain of any number of FIELD elements with a name attribute and the text to be indexed for that field. The following is a simple but valid ROWSET containing two documents: | ||

| + | <pre> | ||

| + | <ROWSET idxType="IndexTypeName" colID="colA" lang="en"> | ||

| + | <ROW> | ||

| + | <FIELD name="ObjectID">doc1</FIELD> | ||

| + | <FIELD name="title">How to create an Index</FIELD> | ||

| + | <FIELD name="contents">Just read the WIKI</FIELD> | ||

| + | </ROW> | ||

| + | <ROW> | ||

| + | <FIELD name="ObjectID">doc2</FIELD> | ||

| + | <FIELD name="title">How to create a Nation</FIELD> | ||

| + | <FIELD name="contents">Talk to the UN</FIELD> | ||

| + | <FIELD name="references">un.org</FIELD> | ||

| + | </ROW> | ||

| + | </ROWSET> | ||

| + | </pre> | ||

| + | |||

| + | The attributes idxType and colID are required and specify the Index Type that the Index must have been created with, and the collection ID of the documents under the <ROWSET> element. The lang attribute is optional, and specifies the language of the documents under the <ROWSET> element. Note that for each document a required field is the "ObjectID" field that specifies its unique identifier. | ||

| + | |||

| + | ===IndexType=== | ||

| + | How the different fields in the [[Full_Text_Index#RowSet|ROWSET]] should be handled by the Index, and how the different fields in an Index should be handled during a query, is specified through an IndexType; an XML document conforming to the IndexType schema. An IndexType contains a field list which contains all the fields which should be indexed and/or stored in order to be presented in the query results, along with a specification of how each of the fields should be handled. The following is a possible IndexType for the type of ROWSET shown above: | ||

| + | |||

| + | <source lang="xml"> | ||

| + | <index-type> | ||

| + | <field-list> | ||

| + | <field name="title"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <highlightable>yes</highlightable> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="contents"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="references"> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <highlightable>no</highlightable> <!-- will not be included in the highlight snippet --> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="gDocCollectionID"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="gDocCollectionLang"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field-list> | ||

| + | </index-type> | ||

| + | </source> | ||

| + | |||

| + | Note that the fields "gDocCollectionID", "gDocCollectionLang" are always required, because, by default, all documents will have a collection ID and a language ("unknown" if no collection is specified). Fields present in the ROWSET but not in the IndexType will be skipped. The elements under each "field" element are used to define how that field should be handled, and they should contain either "yes" or "no". The meaning of each of them is explained bellow: | ||

| + | |||

| + | *'''index''' | ||

| + | :specifies whether the specific field should be indexed or not (ie. whether the index should look for hits within this field) | ||

| + | *'''store''' | ||

| + | :specifies whether the field should be stored in its original format to be returned in the results from a query. | ||

| + | *'''return''' | ||

| + | :specifies whether a stored field should be returned in the results from a query. A field must have been stored to be returned. (This element is not available in the currently deployed indices) | ||

| + | *'''highlightable''' | ||

| + | :specifies whether a returned field should be included or not in the highlight snippet. If not specified then every returned field will be included in the snippet. | ||

| + | *'''tokenize''' | ||

| + | :Not used | ||

| + | *'''sort''' | ||

| + | :Not used | ||

| + | *'''boost''' | ||

| + | :Not used | ||

| + | |||

| + | We currently have five standard index types, loosely based on the available metadata schemas. However any data can be indexed using each, as long as the RowSet follows the IndexType: | ||

| + | *index-type-default-1.0 (DublinCore) | ||

| + | *index-type-TEI-2.0 | ||

| + | *index-type-eiDB-1.0 | ||

| + | *index-type-iso-1.0 | ||

| + | *index-type-FT-1.0 | ||

| + | |||

| + | ===Query language=== | ||

| + | The Full Text Index receives CQL queries and transforms them into Lucene queries. Queries using wildcards will not return usable query statistics. | ||

| + | |||

| + | |||

| + | ==Deployment Instructions== | ||

| + | |||

| + | In order to deploy and run Index Service on a node we will need the following: | ||

| + | * index-service-''{version}''.war | ||

| + | * smartgears-distribution-''{version}''.tar.gz (to publish the running instance of the service on the IS and be discoverable) | ||

| + | ** see [http://gcube.wiki.gcube-system.org/gcube/index.php/SmartGears_gHN_Installation here] for installation | ||

| + | * an application server (such as Tomcat, JBoss, Jetty) | ||

| + | |||

| + | There are a few things that need to configured in order for the service to be functional. All the service configuration is done in the file ''deploy.properties'' that comes within the service war. Typically, this file should be loaded in the classpath so that it can be read. The default location of this file (in the exploded war) is ''webapps/service/WEB-INF/classes''. | ||

| + | |||

| + | The hostname of the node as well as the port and the scope that the node is running on have to set in the in the variables ''hostname'' and ''scope'' in the ''deploy.properties''. | ||

| + | |||

| + | Example : | ||

| + | <pre> | ||

| + | hostname=dl015.madgik.di.uoa.gr | ||

| + | port=8080 | ||

| + | scope=/gcube/devNext | ||

| + | </pre> | ||

| + | |||

| + | Finally, [http://gcube.wiki.gcube-system.org/gcube/index.php/Resource_Registry Resource Registry] should be configured to not run in client mode. This is done in the ''deploy.properties'' by setting: | ||

| + | |||

| + | <pre> | ||

| + | clientMode=false | ||

| + | </pre> | ||

| + | |||

| + | '''NOTE''': it is important to note that ''resourcesFoldername'' as well as ''dataDir'' properties have relative paths in their default values. In some cases these values maybe evaluated by taking into account the folder that the container was started, so in order to avoid problems related to this behavior it is better for these properties to take absolute paths as values. | ||

| + | |||

| + | ==Usage Example== | ||

| + | |||

| + | ===Create an Index Service Node, feed and query using the corresponding client library=== | ||

| + | |||

| + | The following example demonstrate the usage of the IndexClient and IndexServiceClient. | ||

| + | Both are created according to the Builder pattern. | ||

| + | |||

| + | <source lang="java"> | ||

| + | |||

| + | final String scope = "/gcube/devNext"; | ||

| + | |||

| + | // create a client for the given scope (we can provide endpoint as extra filter) | ||

| + | IndexFactoryClient indexFactoryClient = new IndexFactoryClient.Builder().scope(scope).build(); | ||

| + | |||

| + | factoryClient.createResource("myClusterID", scope); | ||

| + | |||

| + | // create a client for the same scope (we can provide endpoint, resourceID, collectionID, clusterID, indexID as extra filters) | ||

| + | IndexClient indexClient = new IndexFactoryClient.Builder().scope(scope).build(); | ||

| + | |||

| + | try{ | ||

| + | indexClient.feedLocator(locator); | ||

| + | indexClient.query(query); | ||

| + | } catch (IndexException) { | ||

| + | // handle the exception | ||

| + | } | ||

| + | </source> | ||

| + | |||

| + | <!--=Full Text Index= | ||

| + | The Full Text Index is responsible for providing quick full text data retrieval capabilities in the gCube environment. | ||

| + | |||

| + | ==Implementation Overview== | ||

| + | ===Services=== | ||

| + | The full text index is implemented through three services. They are all implemented according to the Factory pattern: | ||

| + | *The '''FullTextIndexManagement Service''' represents an index manager. There is a one to one relationship between an Index and a Management instance, and their life-cycles are closely related; an Index is created by creating an instance (resource) of FullTextIndexManagement Service, and an index is removed by terminating the corresponding FullTextIndexManagement resource. The FullTextIndexManagement Service should be seen as an interface for managing the life-cycle and properties of an Index, but it is not responsible for feeding or querying its index. In addition, a FullTextIndexManagement Service resource does not store the content of its Index locally, but contains references to content stored in Content Management Service. | ||

| + | *The '''FullTextIndexUpdater Service''' is responsible for feeding an Index. One FullTextIndexUpdater Service resource can only update a single Index, but one Index can be updated by multiple FullTextIndexUpdater Service resources. Feeding is accomplished by instantiating a FullTextIndexUpdater Service resources with the EPR of the FullTextIndexManagement resource connected to the Index to update, and connecting the updater resource to a ResultSet containing the content to be fed to the Index. | ||

| + | *The '''FullTextIndexLookup Service''' is responsible for creating a local copy of an index, and exposing interfaces for querying and creating statistics for the index. One FullTextIndexLookup Service resource can only replicate and lookup a single instance, but one Index can be replicated by any number of FullTextIndexLookup Service resources. Updates to the Index will be propagated to all FullTextIndexLookup Service resources replicating that Index. | ||

| + | |||

| + | It is important to note that none of the three services have to reside on the same node; they are only connected through WebService calls, the [[IS-Notification | gCube notifications' framework]] and the [[ Content Manager (NEW) | gCube Content Management System]]. The following illustration shows the information flow and responsibilities for the different services used to implement the Full Text Index: | ||

| + | [[Image:GeneralIndexDesign.png|frame|none|Generic Editor]] | ||

| + | |||

| + | ===CQL capabilities implementation=== | ||

| + | Full Text Index uses [http://lucene.apache.org/ Lucene] as its underlying technology. A CQL Index-Relation-Term triple has a straightforward transformation in lucene. This transformation is explained through the following examples: | ||

| + | |||

| + | {| border="1" | ||

| + | ! CQL triple !! explanation !! lucene equivalent | ||

| + | |- | ||

| + | ! title adj "sun is up" | ||

| + | | documents with this phrase in their title | ||

| + | | title:"sun is up" | ||

| + | |- | ||

| + | ! title fuzzy "invorvement" | ||

| + | | documents with words "similar" to invorvement in their title | ||

| + | | title:invorvement~ | ||

| + | |- | ||

| + | ! allIndexes = "italy" (documents have 2 fields; title and abstract) | ||

| + | | documents with the word italy in some of their fields | ||

| + | | title:italy OR abstract:italy | ||

| + | |- | ||

| + | ! title proximity "5 sun up" | ||

| + | | documents with the words sun, up inside an interval of 5 words in their title | ||

| + | | title:"sun up"~5 | ||

| + | |- | ||

| + | ! date within "2005 2008" | ||

| + | | documents with a date between 2005 and 2008 | ||

| + | | date:[2005 TO 2008] | ||

| + | |} | ||

| + | |||

| + | In a complete CQL query, the triples are connected with boolean operators. Lucene supports AND, OR, NOT(AND-NOT) connections between single criteria. Thus, in order to transform a complete CQL query to a lucene query, we first transform CQL triples and then we connect them with AND, OR, NOT equivalently. | ||

| + | |||

| + | ===RowSet=== | ||

| + | The content to be fed into an Index, must be served as a [[ResultSet Framework|ResultSet]] containing XML documents conforming to the ROWSET schema. This is a very simple schema, declaring that a document (ROW element) should contain of any number of FIELD elements with a name attribute and the text to be indexed for that field. The following is a simple but valid ROWSET containing two documents: | ||

| + | <pre> | ||

| + | <ROWSET idxType="IndexTypeName" colID="colA" lang="en"> | ||

| + | <ROW> | ||

| + | <FIELD name="ObjectID">doc1</FIELD> | ||

| + | <FIELD name="title">How to create an Index</FIELD> | ||

| + | <FIELD name="contents">Just read the WIKI</FIELD> | ||

| + | </ROW> | ||

| + | <ROW> | ||

| + | <FIELD name="ObjectID">doc2</FIELD> | ||

| + | <FIELD name="title">How to create a Nation</FIELD> | ||

| + | <FIELD name="contents">Talk to the UN</FIELD> | ||

| + | <FIELD name="references">un.org</FIELD> | ||

| + | </ROW> | ||

| + | </ROWSET> | ||

| + | </pre> | ||

| + | |||

| + | The attributes idxType and colID are required and specify the Index Type that the Index must have been created with, and the collection ID of the documents under the <ROWSET> element. The lang attribute is optional, and specifies the language of the documents under the <ROWSET> element. Note that for each document a required field is the "ObjectID" field that specifies its unique identifier. | ||

| + | |||

| + | ===IndexType=== | ||

| + | How the different fields in the [[Full_Text_Index#RowSet|ROWSET]] should be handled by the Index, and how the different fields in an Index should be handled during a query, is specified through an IndexType; an XML document conforming to the IndexType schema. An IndexType contains a field list which contains all the fields which should be indexed and/or stored in order to be presented in the query results, along with a specification of how each of the fields should be handled. The following is a possible IndexType for the type of ROWSET shown above: | ||

| + | |||

| + | <pre> | ||

| + | <index-type> | ||

| + | <field-list> | ||

| + | <field name="title" lang="en"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="contents" lang="en> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="references" lang="en> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="gDocCollectionID"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="gDocCollectionLang"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field-list> | ||

| + | </index-type> | ||

| + | </pre> | ||

| + | |||

| + | Note that the fields "gDocCollectionID", "gDocCollectionLang" are always required, because, by default, all documents will have a collection ID and a language ("unknown" if no collection is specified). Fields present in the ROWSET but not in the IndexType will be skipped. The elements under each "field" element are used to define how that field should be handled, and they should contain either "yes" or "no". The meaning of each of them is explained bellow: | ||

| + | |||

| + | *'''index''' | ||

| + | :specifies whether the specific field should be indexed or not (ie. whether the index should look for hits within this field) | ||

| + | *'''store''' | ||

| + | :specifies whether the field should be stored in its original format to be returned in the results from a query. | ||

| + | *'''return''' | ||

| + | :specifies whether a stored field should be returned in the results from a query. A field must have been stored to be returned. (This element is not available in the currently deployed indices) | ||

| + | *'''tokenize''' | ||

| + | :specifies whether the field should be tokenized. Should usually contain "yes". | ||

| + | *'''sort''' | ||

| + | :Not used | ||

| + | *'''boost''' | ||

| + | :Not used | ||

| + | |||

| + | For more complex content types, one can also specify sub-fields as in the following example: | ||

| + | |||

| + | <index-type> | ||

| + | <field-list> | ||

| + | <field name="contents"> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | |||

| + | <span style="color:green"><nowiki>// subfields of contents</nowiki></span> | ||

| + | <field name="title"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | |||

| + | <span style="color:green"><nowiki>// subfields of title which itself is a subfield of contents</nowiki></span> | ||

| + | <field name="bookTitle"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="chapterTitle"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field> | ||

| + | |||

| + | <field name="foreword"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="startChapter"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="endChapter"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field> | ||

| + | |||

| + | <span style="color:green"><nowiki>// not a subfield</nowiki></span> | ||

| + | <field name="references"> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | |||

| + | </field-list> | ||

| + | </index-type> | ||

| + | |||

| + | |||

| + | Querying the field "contents" in an index using this IndexType would return hitsin all its sub-fields, which is all fields except references. Querying the field "title" would return hits in both "bookTitle" and "chapterTitle" in addition to hits in the "title" field. Querying the field "startChapter" would only return hits in from "startChapter" since this field does not contain any sub-fields. Please be aware that using sub-fields adds extra fields in the index, and therefore uses more disks pace. | ||

| + | |||

| + | We currently have five standard index types, loosely based on the available metadata schemas. However any data can be indexed using each, as long as the RowSet follows the IndexType: | ||

| + | *index-type-default-1.0 (DublinCore) | ||

| + | *index-type-TEI-2.0 | ||

| + | *index-type-eiDB-1.0 | ||

| + | *index-type-iso-1.0 | ||

| + | *index-type-FT-1.0 | ||

| + | |||

| + | The IndexType of a FullTextIndexManagement Service resource can be changed as long as no FullTextIndexUpdater resources have connected to it. The reason for this limitation is that the processing of fields should be the same for all documents in an index; all documents in an index should be handled according to the same IndexType. | ||

| + | |||

| + | The IndexType of a FullTextIndexLookup Service resource is originally retrieved from the FullTextIndexManagement Service resource it is connected to. However, the "returned" property can be changed at any time in order to change which fields are returned. Keep in mind that only fields which have a "stored" attribute set to "yes" can have their "returned" field altered to return content. | ||

| + | |||

| + | ===Query language=== | ||

| + | The Full Text Index receives CQL queries and transforms them into Lucene queries. Queries using wildcards will not return usable query statistics. | ||

| + | |||

| + | ===Statistics=== | ||

| + | |||

| + | ===Linguistics=== | ||

| + | The linguistics component is used in the '''Full Text Index'''. | ||

| + | |||

| + | Two linguistics components are available; the '''language identifier module''', and the '''lemmatizer module'''. | ||

| + | |||

| + | The language identifier module is used during feeding in the FullTextBatchUpdater to identify the language in the documents. | ||

| + | The lemmatizer module is used by the FullTextLookup module during search operations to search for all possible forms (nouns and adjectives) of the search term. | ||

| + | |||

| + | The language identifier module has two real implementations (plugins) and a dummy plugin (doing nothing, returning always "nolang" when called). The lemmatizer module contains one real implementation (one plugin) (no suitable alternative was found to make a second plugin), and a dummy plugin (always returning an empty String ""). | ||

| + | |||

| + | Fast has provided proprietary technology for one of the language identifier modules (Fastlangid) and the lemmatizer module (Fastlemmatizer). The modules provided by Fast require a valid license to run (see later). The license is a 32 character long string. This string must be provided by Fast (contact Stefan Debald, setfan.debald@fast.no), and saved in the appropriate configuration file (see install a lingustics license). | ||

| + | |||

| + | The current license is valid until end of March 2008. | ||

| + | |||

| + | ====Plugin implementation==== | ||

| + | The classes implementing the plugin framework for the language identifier and the lemmatizer are in the SVN module common. The package is: | ||

| + | org/gcube/indexservice/common/linguistics/lemmatizerplugin | ||

| + | and | ||

| + | org/gcube/indexservice/common/linguistics/langidplugin | ||

| + | |||

| + | The class LanguageIdFactory loads an instance of the class LanguageIdPlugin. | ||

| + | The class LemmatizerFactory loads an instance of the class LemmatizerPlugin. | ||

| + | |||

| + | The language id plugins implements the class org.gcube.indexservice.common.linguistics.langidplugin.LanguageIdPlugin. | ||

| + | The lemmatizer plugins implements the class org.gcube.indexservice.common.linguistics.lemmatizerplugin.LemmatizerPlugin. | ||

| + | The factory use the method: | ||

| + | Class.forName(pluginName).newInstance(); | ||

| + | when loading the implementations. | ||

| + | The parameter pluginName is the package name of the plugin class to be loaded and instantiated. | ||

| + | |||

| + | ====Language Identification==== | ||

| + | There are two real implementations of the language identification plugin available in addition to the dummy plugin that always returns "nolang". | ||

| + | |||

| + | The plugin implementations that can be selected when the FullTextBatchUpdaterResource is created: | ||

| + | |||

| + | org.gcube.indexservice.common.linguistics.jtextcat.JTextCatPlugin | ||

| + | |||

| + | org.gcube.indexservice.linguistics.fastplugin.FastLanguageIdPlugin | ||

| + | |||

| + | org.gcube.indexservice.common.linguistics.languageidplugin.DummyLangidPlugin | ||

| + | |||

| + | =====JTextCat===== | ||

| + | The JTextCat is maintained by http://textcat.sourceforge.net/. It is a light weight text categorization language tool in Java. It implements the N-Gram-Based Text Categorization algorithms that is described here: | ||

| + | http://citeseer.ist.psu.edu/68861.html | ||

| + | It supports the languages: German, English, French, Spanish, Italian, Swedish, Polish, Dutch, Norwegian, Finnish, Albanian, Slovakian, Slovenian, Danish and Hungarian. | ||

| + | |||

| + | The JTexCat is loaded and accessed by the plugin: | ||

| + | org.gcube.indexservice.common.linguistics.jtextcat.JTextCatPlugin | ||

| + | |||

| + | The JTextCat contains no config - or bigram files since all the statistical data about the languages are contained in the package itself. | ||

| + | |||

| + | The JTextCat is delivered in the jar file: textcat-1.0.1.jar. | ||

| + | |||

| + | The license for the JTextCat: | ||

| + | http://www.gnu.org/copyleft/lesser.html | ||

| + | |||

| + | =====Fastlangid===== | ||

| + | The Fast language identification module is developed by Fast. It supports "all" languages used on the web. The tools is implemented in C++. The C++ code is loaded as a shared library object. | ||

| + | The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. | ||

| + | The shared library objects are compiled on Linux RHE3 and RHE4. | ||

| + | |||

| + | The Java native interface is generated using Swig. | ||

| + | |||

| + | The Fast langid module is loaded by the plugin (using the LanguageIdFactory) | ||

| + | |||

| + | org.gcube.indexservice.linguistics.fastplugin.FastLanguageIdPlugin | ||

| + | |||

| + | The plugin loads the shared object library, and when init is called, instantiate the native C++ objects that identifies the languages. | ||

| + | |||

| + | The Fastlangid is in the SVN module: | ||

| + | trunk/linguistics/fastlinguistics/fastlangid | ||

| + | |||

| + | The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file config.txt | ||

| + | |||

| + | The shared library object is called liblangid.so | ||

| + | |||

| + | The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/langid. | ||

| + | |||

| + | The org_gcube_indexservice_langid.jar contains the plugin FastLangidPlugin (that is loaded by the LanguageIdFactory) and the Java native interface to the shared library object. | ||

| + | |||

| + | The shared library object liblangid.so is deployed in the $GLOBUS_LOCATION/lib catalogue. | ||

| + | |||

| + | The license for the Fastlangid plugin: | ||

| + | |||

| + | =====Language Identifier Usage===== | ||

| + | |||

| + | The language identifier is used by the Full Text Updater in the Full Text Index. | ||

| + | The plugin to use for an updater is decided when the resource is created, as a part of the create resource call. | ||

| + | (see Full Text Updater). The parameter is the package name of the implementation to be loaded and used to identify the language. | ||

| + | |||

| + | The language identification module and the lemmatizer module are loaded at runtime by using a factory that loads the implementation that is going to be used. | ||

| + | |||

| + | The feeded documents may contain the language per field in the document. If present this specified language is used when indexing the document. In this case the language id module is not used. | ||

| + | If no language is specified in the document, and there is a language identification plugin loaded, the FullTextIndexUpdater Service will try to identify the language of the field using the loaded plugin for language identification. | ||

| + | Since language is assigned at the Collections level in Diligent, all fields of all documents in a language aware collection should contain a "lang" attribute with the language of the collection. | ||

| + | |||

| + | A language aware query can be performed at a query or term basis: | ||

| + | *the query "_querylang_en: car OR bus OR plane" will look for English occurrences of all the terms in the query. | ||

| + | *the queries "car OR _lang_en:bus OR plane" and "car OR _lang_en_title:bus OR plane" will only limit the terms "bus" and "title:bus" to English occurrences. (the version without a specified field will not work in the currently deployed indices) | ||

| + | *Since language is specified at a collection level, language aware queries should only be used for language neutral collections. | ||

| + | |||

| + | ==== Lemmatization ==== | ||

| + | There is one real implementations of the lemmatizer plugin available in addition to the dummy plugin that always returns "" (empty string). | ||

| + | |||

| + | The plugin implementations is selected when the FullTextLookupResource is created: | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin | ||

| + | |||

| + | org.diligentproject.indexservice.common.linguistics.languageidplugin.DummyLemmatizerPlugin | ||

| + | |||

| + | =====Fastlemmatizer===== | ||

| + | |||

| + | The Fast lemmatizer module is developed by Fast. The lemmatizer modules depends on .aut files (config files) for the language to be lemmatized. Both expansion and reduction is supported, but expansion is used. The terms (noun and adjectives) in the query are expanded. | ||

| + | |||

| + | The lemmatizer is configured for the following languages: German, Italian, Portuguese, French, English, Spanish, Netherlands, Norwegian. | ||

| + | To support more languages, additional .aut files must be loaded and the config file LemmatizationQueryExpansion.xml must be updated. | ||

| + | |||

| + | The lemmatizer is implemented in C++. The C++ code is loaded as a shared library object. The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. The shared library objects are compiled on Linux RHE3 and RHE4. | ||

| + | |||

| + | The Java native interface is generated using Swig. | ||

| + | |||

| + | The Fast lemmatizer module is loaded by the plugin (using the LemmatizerIdFactory) | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin | ||

| + | |||

| + | The plugin loads the shared object library, and when init is called, instantiate the native C++ objects. | ||

| + | |||

| + | The Fastlemmatizer is in the SVN module: trunk/linguistics/fastlinguistics/fastlemmatizer | ||

| + | |||

| + | The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file LemmatizerConfigQueryExpansion.xml | ||

| + | The shared library object is called liblemmatizer.so | ||

| + | |||

| + | The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/lemmatizer. | ||

| + | |||

| + | The org_diligentproject_indexservice_lemmatizer.jar contains the plugin FastLemmatizerPlugin (that is loaded by the LemmatizerFactory) and the Java native interface to the shared library. | ||

| + | |||

| + | The shared library liblemmatizer.so is deployed in the $GLOBUS_LOCATION/lib catalogue. | ||

| + | |||

| + | The '''$GLOBUS_LOCATION/lib''' must therefore be include in the '''LD_LIBRARY_PATH''' environment variable. | ||

| + | |||

| + | ===== Fast lemmatizer configuration ===== | ||

| + | The LemmatizerConfigQueryExpansion.xml contains the paths to the .aut files that is loaded when a lemmatizer is instanciated. | ||

| + | |||

| + | <lemmas active="yes" parts_of_speech="NA">etc/lemmatizer/resources/dictionaries/lemmatization/en_NA_exp.aut</lemmas> | ||

| + | |||

| + | The path is relative to the env variable GLOBUS_LOCATION. If this path is wrong, it the Java machine will core dump. | ||

| + | |||

| + | The license for the Fastlemmatizer plugin: | ||

| + | |||

| + | ===== Fast lemmatizer logging ===== | ||

| + | The lemmatizer logs info, debug and error messages to the file "lemmatizer.txt" | ||

| + | |||

| + | ===== Lemmatization Usage ===== | ||

| + | The FullTextIndexLookup Service uses expansion during lemmatization; a word (of a query) is expanded into all known versions of the word. It is of course important to know the language of the query in order to know which words to expand the query with. Currently the same methods used to specify language for a language aware query is used is used to specify language for the lemmatization process. A way of separating these two specifications (such that lemmatization can be performed without performing a language aware query) will be made available shortly... | ||

| + | |||

| + | ==== Linguistics Licenses ==== | ||

| + | The current license key for the fastalngid and fastlemmatizer is valid through March 2008. | ||

| + | |||

| + | If a new license is required please contact: Stefan.debald@fast.no to get a new license key. | ||

| + | |||

| + | The license must be installed both in the Fastlangid and the Fastlemmatizer module. | ||

| + | |||

| + | The fastlangid license is installed by updating the SVN text file: | ||

| + | '''linguistics/fastlinguistics/fastlangid/etc/langid/config.txt''' | ||

| + | |||

| + | Use a text editor and replace the 32 character license string with the new license string: | ||

| + | |||

| + | // The license key | ||

| + | // Contact stefand.debald@fast.no for new license key: | ||

| + | LICSTR=KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG | ||

| + | |||

| + | A running system is updated by replacing the license string in the file: | ||

| + | '''$GLOBUS_LOCATION/etc/langid/config.txt''' | ||

| + | as described above. | ||

| + | |||

| + | The fastlemmatizer license is installed by updating the SVN text file: | ||

| + | |||

| + | '''linguistics/fastlinguistics/fastlemmatizer/etc/LemmatizationConfigQueryExpansion.xml''' | ||

| + | |||

| + | Use a text editor and replace the 32 character license string with the new license string: | ||

| + | <lemmatization default_mode="query_expansion" default_query_language="en" license="KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG"> | ||

| + | |||

| + | The running system is updated by replacing the license string in the file: | ||

| + | '''$GLOBUS_LOCATION/etc/lemmatizer/LemmatizationConfigQueryExpansion.xml''' | ||

| + | |||

| + | ===Partitioning=== | ||

| + | In order to handle situations where an Index replication does not fit on a single node, partitioning has been implemented for the FullTextIndexLookup Service; in cases where there is not enough space to perform an update/addition on the FullTextIndexLookup Service resource, a new resource will be created to handle all the content which didn't fit on the first resource. The partitioning is handled automatically and is transparent when performing a query, however the possibility of enabling/disabling partitioning will be added in the future. In the deployed Indices partitioning has been disabled due to problems with the creation of statistics. Will be fixed shortly. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | ==Usage Example== | ||

| + | ===Create a Management Resource=== | ||

| + | |||

| + | <span style="color:green">//Get the factory portType</span> | ||

| + | <nowiki>String managementFactoryURI = "http://some.domain.no:8080/wsrf/services/gcube/index/FullTextIndexManagementFactoryService";</nowiki> | ||

| + | FullTextIndexManagementFactoryServiceAddressingLocator managementFactoryLocator = new FullTextIndexManagementFactoryServiceAddressingLocator(); | ||

| + | |||

| + | managementFactoryEPR = new EndpointReferenceType(); | ||

| + | managementFactoryEPR.setAddress(new Address(managementFactoryURI)); | ||

| + | managementFactory = managementFactoryLocator | ||

| + | .getFullTextIndexManagementFactoryPortTypePort(managementFactoryEPR); | ||

| + | |||

| + | <span style="color:green">//Create generator resource and get endpoint reference of WS-Resource.</span> | ||

| + | org.gcube.indexservice.fulltextindexmanagement.stubs.CreateResource managementCreateArguments = | ||

| + | new org.gcube.indexservice.fulltextindexmanagement.stubs.CreateResource(); | ||

| + | managementCreateArguments.setIndexTypeName(indexType));<span style="color:green">//Optional (only needed if not provided in RS)</span> | ||

| + | managementCreateArguments.setIndexID(indexID);<span style="color:green">//Optional (should usually not be set, and the service will create the ID)</span> | ||

| + | managementCreateArguments.setCollectionID("myCollectionID"); | ||

| + | managementCreateArguments.setContentType("MetaData"); | ||

| + | |||

| + | org.gcube.indexservice.fulltextindexmanagement.stubs.CreateResourceResponse managementCreateResponse = | ||

| + | managementFactory.createResource(managementCreateArguments); | ||

| + | |||

| + | managementInstanceEPR = managementCreateResponse.getEndpointReference(); | ||

| + | String indexID = managementCreateResponse.getIndexID(); | ||

| + | |||

| + | ===Create an Updater Resource and start feeding=== | ||

| + | |||

| + | <span style="color:green">//Get the factory portType</span> | ||

| + | <nowiki>updaterFactoryURI = "http://some.domain.no:8080/wsrf/services/gcube/index/FullTextIndexUpdaterFactoryService";</nowiki> <span style="color:green">//could be on any node</span> | ||

| + | updaterFactoryEPR = new EndpointReferenceType(); | ||

| + | updaterFactoryEPR.setAddress(new Address(updaterFactoryURI)); | ||

| + | updaterFactory = updaterFactoryLocator | ||

| + | .getFullTextIndexUpdaterFactoryPortTypePort(updaterFactoryEPR); | ||

| + | |||

| + | |||

| + | <span style="color:green">//Create updater resource and get endpoint reference of WS-Resource</span> | ||

| + | org.gcube.indexservice.fulltextindexupdater.stubs.CreateResource updaterCreateArguments = | ||

| + | new org.gcube.indexservice.fulltextindexupdater.stubs.CreateResource(); | ||

| + | |||

| + | <span style="color:green">//Connect to the correct Index</span> | ||

| + | updaterCreateArguments.setMainIndexID(indexID); | ||

| + | |||

| + | <span style="color:green">//Now let's insert some data into the index... Firstly, get the updater EPR.</span> | ||

| + | org.gcube.indexservice.fulltextindexupdater.stubs.CreateResourceResponse updaterCreateResponse = updaterFactory | ||

| + | .createResource(updaterCreateArguments); | ||

| + | updaterInstanceEPR = updaterCreateResponse.getEndpointReference(); | ||

| + | |||

| + | |||

| + | <span style="color:green">//Get updater instance PortType</span> | ||

| + | updaterInstance = updaterInstanceLocator.getFullTextIndexUpdaterPortTypePort(updaterInstanceEPR); | ||

| + | |||

| + | |||

| + | <span style="color:green">//read the EPR of the ResultSet containing the ROWSETs to feed into the index </span> | ||

| + | BufferedReader in = new BufferedReader(new FileReader(eprFile)); | ||

| + | String line; | ||

| + | resultSetLocator = ""; | ||

| + | while((line = in.readLine())!=null){ | ||

| + | resultSetLocator += line; | ||

| + | } | ||

| + | |||

| + | <span style="color:green">//Tell the updater to start gathering data from the ResultSet</span> | ||

| + | updaterInstance.process(resultSetLocator); | ||

| + | |||

| + | ===Create a Lookup resource and perform a query=== | ||

| + | |||

| + | <span style="color:green">//Let's put it on another node for fun...</span> | ||

| + | <nowiki>lookupFactoryURI = "http://another.domain.no:8080/wsrf/services/gcube/index/FullTextIndexLookupFactoryService";</nowiki> | ||

| + | FullTextIndexLookupFactoryServiceAddressingLocator lookupFactoryLocator = new FullTextIndexLookupFactoryServiceAddressingLocator(); | ||

| + | EndpointReferenceType lookupFactoryEPR = null; | ||

| + | EndpointReferenceType lookupEPR = null; | ||

| + | FullTextIndexLookupFactoryPortType lookupFactory = null; | ||

| + | FullTextIndexLookupPortType lookupInstance = null; | ||

| + | |||

| + | <span style="color:green">//Get factory portType</span> | ||

| + | lookupFactoryEPR= new EndpointReferenceType(); | ||

| + | lookupFactoryEPR.setAddress(new Address(lookupFactoryURI)); | ||

| + | lookupFactory =lookupFactoryLocator.getFullTextIndexLookupFactoryPortTypePort(factoryEPR); | ||

| + | |||

| + | <span style="color:green">//Create resource and get endpoint reference of WS-Resource</span> | ||

| + | org.gcube.indexservice.fulltextindexlookup.stubs.CreateResource lookupCreateResourceArguments = | ||

| + | new org.gcube.indexservice.fulltextindexlookup.stubs.CreateResource(); | ||

| + | org.gcube.indexservice.fulltextindexlookup.stubs.CreateResourceResponse lookupCreateResponse = null; | ||

| + | |||

| + | lookupCreateResourceArguments.setMainIndexID(indexID); | ||

| + | lookupCreateResponse = lookupFactory.createResource( lookupCreateResourceArguments); | ||

| + | lookupEPR = lookupCreateResponse.getEndpointReference(); | ||

| + | |||

| + | <span style="color:green">//Get instance PortType</span> | ||

| + | lookupInstance = instanceLocator.getFullTextIndexLookupPortTypePort(instanceEPR); | ||

| + | |||

| + | <span style="color:green">//Perform a query</span> | ||

| + | String query = "good OR evil"; | ||

| + | String epr = lookupInstance.query(query); | ||

| + | |||

| + | <span style="color:green">//Print the results to screen. (refer to the [[ResultSet Framework]] page for a more detailed explanation)</span> | ||

| + | RSXMLReader reader=null; | ||

| + | ResultElementBase[] results; | ||

| + | |||

| + | try{ | ||

| + | <span style="color:green">//create a reader for the ResultSet we created</span> | ||

| + | reader = RSXMLReader.getRSXMLReader(new RSLocator(epr)); | ||

| + | |||

| + | <span style="color:green">//Print each part of the RS to std.out</span> | ||

| + | System.out.println("<Results>"); | ||

| + | do{ | ||

| + | System.out.println(" <Part>"); | ||

| + | if (reader.getNumberOfResults() > 0){ | ||

| + | results = reader.getResults(ResultElementGeneric.class); | ||

| + | for(int i = 0; i < results.length; i++ ){ | ||

| + | System.out.println(" "+results[i].toXML()); | ||

| + | } | ||

| + | } | ||

| + | System.out.println(" </Part>"); | ||

| + | if(!reader.getNextPart()){ | ||

| + | break; | ||

| + | } | ||

| + | } | ||

| + | while(true); | ||

| + | System.out.println("</Results>"); | ||

| + | } | ||

| + | catch(Exception e){ | ||

| + | e.printStackTrace(); | ||

| + | } | ||

| + | |||

| + | ===Getting statistics from a Lookup resource=== | ||

| + | |||

| + | String statsLocation = lookupInstance.createStatistics(new CreateStatistics()); | ||

| + | |||

| + | <span style="color:green">//Connect to a CMS Running Instance</span> | ||

| + | EndpointReferenceType cmsEPR = new EndpointReferenceType(); | ||

| + | <nowiki>cmsEPR.setAddress(new Address("http://swiss.domain.ch:8080/wsrf/services/gcube/contentmanagement/ContentManagementServiceService"));</nowiki> | ||

| + | ContentManagementServiceServiceAddressingLocator cmslocator = new ContentManagementServiceServiceAddressingLocator(); | ||

| + | cms = cmslocator.getContentManagementServicePortTypePort(cmsEPR); | ||

| + | |||

| + | <span style="color:green">//Retrieve the statistics file from CMS</span> | ||

| + | GetDocumentParameters getDocumentParams = new GetDocumentParameters(); | ||

| + | getDocumentParams.setDocumentID(statsLocation); | ||

| + | getDocumentParams.setTargetFileLocation(BasicInfoObjectDescription.RAW_CONTENT_IN_MESSAGE); | ||

| + | DocumentDescription description = cms.getDocument(getDocumentParams); | ||

| + | |||

| + | <span style="color:green">//Write the statistics file from memory to disk </span> | ||

| + | File downloadedFile = new File("Statistics.xml"); | ||

| + | DecompressingInputStream input = new DecompressingInputStream( | ||

| + | new BufferedInputStream(new ByteArrayInputStream(description.getRawContent()), 2048)); | ||

| + | BufferedOutputStream output = new BufferedOutputStream( new FileOutputStream(downloadedFile), 2048); | ||

| + | byte[] buffer = new byte[2048]; | ||

| + | int length; | ||

| + | while ( (length = input.read(buffer)) >= 0){ | ||

| + | output.write(buffer, 0, length); | ||

| + | } | ||

| + | input.close(); | ||

| + | output.close(); | ||

| + | --> | ||

| + | |||

| + | <!--=Geo-Spatial Index= | ||

| + | ==Implementation Overview== | ||

| + | ===Services=== | ||

| + | The geo index is implemented through three services, in the same manner as the full text index. They are all implemented according to the Factory pattern: | ||

| + | *The '''GeoIndexManagement Service''' represents an index manager. There is a one to one relationship between an Index and a Management instance, and their life-cycles are closely related; an Index is created by creating an instance (resource) of GeoIndexManagement Service, and an index is removed by terminating the corresponding GeoIndexManagement resource. The GeoIndexManagement Service should be seen as an interface for managing the life-cycle and properties of an Index, but it is not responsible for feeding or querying its index. In addition, a GeoIndexManagement Service resource does not store the content of its Index locally, but contains references to content stored in Content Management Service. | ||

| + | *The '''GeoIndexUpdater Service''' is responsible for feeding an Index. One GeoIndexUpdater Service resource can only update a single Index, but one Index can be updated by multiple GeoIndexUpdater Service resources. Feeding is accomplished by instantiating a GeoIndexUpdater Service resources with the EPR of the GeoIndexManagement resource connected to the Index to update, and connecting the updater resource to a ResultSet containing the content to be fed to the Index. | ||

| + | *The '''GeoIndexLookup Service''' is responsible for creating a local copy of an index, and exposing interfaces for querying and creating statistics for the index. One GeoIndexLookup Service resource can only replicate and lookup a single instance, but one Index can be replicated by any number of GeoIndexLookup Service resources. Updates to the Index will be propagated to all GeoIndexLookup Service resources replicating that Index. | ||

| + | |||

| + | It is important to note that none of the three services have to reside on the same node; they are only connected through WebService calls, the [[IS-Notification | gCube notifications' framework]] and the [[ Content Manager (NEW) | gCube Content Management System]]. The following illustration shows the information flow and responsibilities for the different services used to implement the Geo Index: | ||

| + | [[Image:GeneralIndexDesign.png|frame|none|Generic Editor]] | ||

| + | |||

| + | ===Underlying Technology=== | ||

| + | Geo-Spatial Index uses [http://geotools.org/ Geotools] as its underlying technology. The documents hosted in a Geo-Spatial Index Lookup belong to different collections and language. For each language of each collection, the Geo-Spatial Index Lookup uses a seperate R-tree to index the corresponding documents. As we will describe in the following section, the CQL queries received by a Geo Index Lookup may refer to specific languages and collections. Through this design we aim at high performance for complicated queries that involve many collections and languages. | ||

| + | |||

| + | ===CQL capabilities implementation=== | ||

| + | Geo-Spatial Index Lookup supports one custom CQL relation. The "geosearch" relation has a number of modifiers, that specify the collection and language of the results, the inclusion type of the query, a refiner for filtering further the results and a ranker for computing a score for each result. All of the modifiers are optional. Let's see the following example in order to understand better the geosearch relation and its modifiers: | ||

| + | |||

| + | <pre> | ||

| + | geo geosearch/colID="colA"/lang="en"/inclusion="0"/ranker="rankerA false arg1 arg2"/refiner="refinerA arg1 arg2 arg3" "1 1 1 10 10 1 10 10" | ||

| + | </pre> | ||

| + | |||

| + | In this example the results will be the documents that belong to collection with ID "colA", they are in English and they intersect with the polygon defined by points (1,1), (1,10), (10,1), (10,10). These results will be filtered by the refiner with ID "refinerA" that will take "arg1 arg2 arg3" as arguments for the filtering operation, will be ordered by rankerA that will take "arg1 arg2" as arguments for the ranking operation and will be returned as the output for this simple CQL query. The "false" indication to the ranker modifier signifies that we don't want reverse ordering of the results(true signifies that the higher score must be placed at the end). There are three inclusion types. 0 is "intersects", 1 is "contains" (documents that are contained in the specified polygon) and 2 is "inside" (documents that are inside the specified polygon). The next sections will provide the details for the refiners and rankers. | ||

| + | |||

| + | A complete CQL query for a Geo-Spatial Index Lookup will contain many CQL geosearch triples, connected with AND, OR, NOT operators. The approach we follow in order to execute CQL queries, is to apply boolean algebra rules, and transform the initial query to an equivalent one. We aim at producing a query which is a union of operations that each refers to a single R-tree. Additionally we apply "cut-off" rules that eliminate parts of the initial query that have a zero number of results. Consider the following example of a "cut-off" rule: | ||

| + | |||

| + | <pre> | ||

| + | (geo geosearch/colID="colA"/inclusion="1" <P1>) AND (geo geosearch/colID="colA"/inclusion="1" <P2>) | ||

| + | </pre> | ||

| + | |||

| + | Here we want documents of collection "colA" that are contained in polygon P1 AND are also contained in polygon P2. Note that if the collections in the two criteria were different then we could eliminate this subquery, since it could not produce any result(each document belongs to one collection only). Since the two criteria specify the same collection, we must examine the relation of the two polygons. If the two polygons do not intersect then | ||

| + | there is no area in which the documents should be contained, so no document can satisfy the conjunction of the 2 criteria. This is depicted in the following figure: | ||

| + | |||

| + | [[Image:GeoInter.jpg|500px|frame|center|Intersection of polygons P1 and P2]] | ||

| + | |||

| + | The transformation of a initial CQL query to a union of R-tree operations is depicted in the following figure: | ||

| + | |||

| + | [[Image:GeoXform.jpg|500px|frame|center|Geo-Spatial Index Lookup transformation of CQL queries]] | ||

| + | |||

| + | Each R-tree operation refers to a lookup operation for a given polygon on a single R-tree. The MergeSorter component implements the union of the individual R-tree operations, based on the scores of the results. Flow control is supported by the MergeSorter component in order to pause and synchronize the workers that execute the single R-tree operations, depending on the behavior of the client that reads the results. | ||

| + | |||

| + | ===RowSet=== | ||

| + | The content to be fed into a Geo Index, must be served as a [[ResultSet Framework|ResultSet]] containing XML documents conforming to the GeoROWSET schema. This is a very simple schema, declaring that an object (ROW element) should containan id, start and end X coordinates (x1-mandatory and x2-set to equal x1 if not provided) as well as start and end Y coordinates (y1-mandatory and y2-set to equal y1 if not provided). In addition, and of any number of FIELD elements containing a name attribute and information to be stored and perhaps used for [[Index Management Framework#Refinement|refinement]] of a query or [[Index Management Framework#Ranking|ranking]] of results. In a similar fashion with fulltext indices, a row in a GeoROWSET may contain only a number of all the fields specified in the [[Index Management Framework#GeoIndexType|IndexType]]. The following is a simple but valid GeoROWSET containing two objects: | ||

| + | <pre> | ||

| + | <ROWSET colID="colA" lang="en"> | ||

| + | <ROW id="doc1" x1="4321" y1="1234"> | ||

| + | <FIELD name="StartTime">2001-05-27T14:35:25.523</FIELD> | ||

| + | <FIELD name="EndTime">2001-05-27T14:38:03.764</FIELD> | ||

| + | </ROW> | ||

| + | <ROW id="doc1" x1="1337" x2="4123" y1="1337" y2="6534"> | ||

| + | <FIELD name="StartTime">2001-06-27</FIELD> | ||

| + | <FIELD name="EndTime">2001-07-27</FIELD> | ||

| + | </ROW> | ||

| + | </ROWSET> | ||

| + | </pre> | ||

| + | |||

| + | The attributes colID and lang specify the collection ID and the language of the documents under the <ROWSET> element. The first one is required, while the second one is optional. | ||

| + | |||

| + | ===GeoIndexType=== | ||

| + | Which fields may be present in the [[Index Management Framework#RowSet_2|RowSet]], and how these fields are to be handled by the Geo Index is specified through a GeoIndexType; an XML document conforming to the GeoIndexType schema. Which GeoIndexType to use for a specific GeoIndex instance, is specified by supplying a GeoIndexType ID during initialization of the GeoIndexManagement resource. A GeoIndexType contains a field list which contains all the fields which can be stored in order to be presented in the query results or used for refinement. The following is a possible IndexType for the type of ROWSET shown above: | ||

| + | |||

| + | <pre> | ||

| + | <index-type> | ||

| + | <field-list> | ||

| + | <field name="StartTime"> | ||

| + | <type>date</type> | ||

| + | <return>yes</return> | ||

| + | </field> | ||

| + | <field name="EndTime"> | ||

| + | <type>date</type> | ||

| + | <return>yes</return> | ||

| + | </field> | ||

| + | </field-list> | ||

| + | </index-type> | ||

| + | </pre> | ||

| + | |||

| + | Fields present in the ROWSET but not in the IndexType will be skipped. The two elements under each "field" element are used to define how that field should be handled. The meaning and expected content of each of them is explained bellow: | ||

| + | |||

| + | *'''type''' specifies the data type of the field. Accepted values are: | ||

| + | **SHORT - A number fitting into a Java "short" | ||

| + | **INT - A number fitting into a Java "short" | ||

| + | **LONG - A number fitting into a Java "short" | ||

| + | **DATE - A date in the format yyyy-MM-dd'T'HH:mm:ss.s where only yyyy is mandatory | ||

| + | **FLOAT - A decimal number fitting into a Java "float" | ||

| + | **DOUBLE - A decimal number fitting into a Java "double" | ||

| + | **STRING - A string with a maximum length of 100 (or so...) | ||

| + | *'''return''' specifies whether the field should be returned in the results from a query. "yes" and "no" are the only accepted values. | ||

| + | |||

| + | ===Plugin Framework=== | ||

| + | As explained in the [[Geographical/Spatial Index#GeoIndexType|GeoIndexType]] section, which fields a GeoIndex instance should contain can be dynamically specified through a GeoIndexType provided during GeoIndexManagement initialization. However, since new GeoIndexTypes can be added at any time with any number of new fields, there is no way for the GeoIndex itself to know how to use the information in such fields in any meaningful manner when processing a query; a static generic algorithm for processing such information would drastically limit the usefulness of the information. In order to allow for dynamic introduction of field evaluation algorithms capable of handling the dynamic nature of IndexTypes, a plugin framework was introduced. The framework allows for the creation of GeoIndexType-specific evaluators handling ranking and refinement. | ||

| + | |||

| + | ====Ranking==== | ||

| + | The results of a query are sorted according to their rank, and their ranks are also returned to the caller. A RankEvaluator plugin is used to determine the rank of objects. It is provided with the query region, Object data, GeoIndexType and an optional set of plugin specific arguments, and is expected to use this information in order to return a meaningful rank of each object. | ||

| + | |||

| + | ====Refinement==== | ||

| + | The GeoIndex uses TwoStep processing in order to process a query. Firstly, a very efficient filtering step will all possible hits (along with some false hits) using the minimal bounding rectangle (mbr) of the query region. Then, a more costly refinement step will use additional object and query information in order to eliminate all the false hits. While the filtering step is handled internally in the index, the refinement step is handled by a refiner plugin. It is provided with the query region, Object data, GeoIndexType and an optional set of plugin specific arguments, and is expected to use this information in order to determine whether an object is within a query or not. | ||

| + | |||

| + | ====Creating a Rank Evaluator==== | ||

| + | A RankEvaluator plugin has to extend the abstract class org.gcube.indexservice.geo.ranking.RankEvaluator which contains three abstract methods: | ||

| + | |||

| + | *abstract public void initialize(String args[]) -- a method called during the initiation of the RankEvaluator plugin, providing the plugin with any arguments provided in the code. All arguments are given as Strings, and it's up to the plugin to parse the string into the datatype needed by the plugin. | ||

| + | *abstract public boolean isIndexTypeCompatible(GeoIndexType indexType) -- should be able to determine whether this plugin can be used by an index conforming to the GeoIndexType argument | ||

| + | *abstract public double rank(Object entry) -- the method that calculates the rank of an entry. | ||

| + | |||

| + | |||

| + | In addition, the RankEvaluator abstract class implements two other methods worth noting | ||

| + | *final public void init(Polygon polygon, InclusionType containmentMethod, GeoIndexType indexType, String args[]) -- initialized the protected variables Polygon polygon, Envelope envelope, InclusionType containmentMethod and GeoIndexType indexType, before calling initialize() using the last argument. This means that all the four protected variables are available in the initialize() method. | ||

| + | *protected Object getDataField(String field, Data data) -- a method used to retrieve a the contents of a specific GeoIndexType field from a org.geotools.index.Data object conforming to the GeoIndexType used by the plugin. | ||

| + | |||

| + | |||

| + | Ok, simple enough... So let's create a RankEvaluator plugin. We'll assume that for a certain use case, entries which span over a long period of time are of less interest than objects wich span over a short period of time. Since we're dealing with TimeSpans, we'll assume that the data stored in the index will have a "StartTime" field and an "EndTime" field, in accordance with the [[Geographical/Spatial Index#GeoIndexType|GeoIndexType]] created earlier. | ||

| + | |||

| + | The first thing we need to do, is to create a class which extends RankEvaluator: | ||

| + | <pre> | ||

| + | package org.mojito.ranking; | ||

| + | import org.gcube.indexservice.geo.ranking.RankEvaluator; | ||

| + | |||

| + | public class SpanSizeRanker extends RankEvaluator{ | ||

| + | |||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Next, we'll implement the isIndexTypeCompatible method. To do this, we need a way of determine if the fields we need are present in the GeoIndexType argument. Luckily, GeoIndexType contains a method called ''containsField'' which expects the String name and GeoIndexField.DataType (date, double, float, int, long, short or string) type of the field in question as arguments. In addition, we'll implement the initialize() method, which we'll leave empty as the plugin we are creating doesn't need to handle any arguments. | ||

| + | <pre> | ||

| + | package org.mojito.ranking; | ||

| + | |||

| + | import org.gcube.indexservice.common.GeoIndexField; | ||

| + | import org.gcube.indexservice.common.GeoIndexType; | ||

| + | import org.gcube.indexservice.geo.ranking.RankEvaluator; | ||

| + | |||

| + | public class SpanSizeRanker extends RankEvaluator{ | ||

| + | public void initialize(String[] args) {} | ||

| + | |||

| + | public boolean isIndexTypeCompatible(GeoIndexType indexType) { | ||

| + | return indexType.containsField("StartTime", GeoIndexField.DataType.DATE) && | ||

| + | indexType.containsField("EndTime", GeoIndexField.DataType.DATE); | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | Last, but not least... We need to implement the Rank() method. This is of course the method which calculates a rank for an entry, based on the query polygon, any extra arguments and the different fields of the entry. In our implementation, we'll simply calculate the timespan, and devide 1 by this number in order to get a quick and dirty rank. Keep in mind that this method is not called for all the entries resulting from the R-Tree filtering step, but only a subset roughly fitting the resultset page size. This means that somewhat computationally heavy operation can be performed (if needed) without drastically lowering response time. Please also note how the getDataField() method is used in order retrieve the evaluated fields from the entry data, and how the result is cast to ''Long'' (even though we are dealing with dates). The reason for this is that the GeoIndex internally represents a date as a long containing the number of seconds from the Epoch. If we wanted to evaluate the Minimal Bouning Rectangle (MBR) of the entries, we could access them through ''entry.getBounds()''. | ||

| + | <pre> | ||

| + | package org.mojito.ranking; | ||

| + | |||

| + | import org.gcube.indexservice.common.GeoIndexField; | ||

| + | import org.gcube.indexservice.common.GeoIndexType; | ||

| + | import org.gcube.indexservice.geo.ranking.RankEvaluator; | ||

| + | import org.geotools.index.Data; | ||

| + | import org.geotools.index.rtree.Entry; | ||

| + | |||

| + | |||

| + | public class SpanSizeRanker extends RankEvaluator{ | ||

| + | public void initialize(String[] args) {} | ||

| + | |||

| + | public boolean isIndexTypeCompatible(GeoIndexType indexType) { | ||

| + | return indexType.containsField("StartTime", GeoIndexField.DataType.DATE) && | ||

| + | indexType.containsField("EndTime", GeoIndexField.DataType.DATE); | ||

| + | } | ||

| + | |||

| + | public double rank(Object obj){ | ||

| + | Entry entry = (Entry)obj; | ||

| + | Data data = (Data)entry.getData(); | ||

| + | Long entryStartTime = (Long) this.getDataField("StartTime", data); | ||

| + | Long entryEndTime = (Long) this.getDataField("EndTime", data); | ||

| + | long spanSize = entryEndTime - entryStartTime; | ||

| + | |||

| + | return 1/(spanSize + 1); | ||

| + | } | ||

| + | |||

| + | } | ||

| + | </pre> | ||

| + | |||

| + | |||

| + | And there we are! Our first working RankEvaluator plugin. | ||

| + | |||

| + | ====Creating a Refiner==== | ||

| + | A Refiner plugin has to extend the abstract class org.diligentproject.indexservice.geo.refinement.Refiner which contains three abstract methods: | ||

| + | |||

| + | *abstract public void initialize(String args[]) -- a method called during the initiation of the RankEvaluator plugin, providing the plugin with any arguments provided in the code. All arguments are given as Strings, and it's up to the plugin to parse the string into the datatype needed by the plugin. | ||

| + | *abstract public boolean isIndexTypeCompatible(GeoIndexType indexType) -- should be able to determine whether this plugin can be used by an index conforming to the GeoIndexType argument | ||

| + | *abstract public List<Entry> refine(List<Entry> entries); -- the method responsible for refining a list of results. | ||

| + | |||

| + | |||

| + | In addition, the Refiner abstract class implements two other methods worth noting | ||