Difference between revisions of "File-Based Access"

(→Architecture) |

(→Large Deployment) |

||

| (31 intermediate revisions by 3 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | Part of the [[Data Access and Storage Facilities]], a cluster of components within the system focus on standards-based and structured access and storage of files of arbitrary size. | |

| − | + | ||

| − | + | ||

| + | This document outlines their design rationale, key features, and high-level architecture, as well as the options for their deployment. | ||

== Overview == | == Overview == | ||

| + | Access and storage of unstructured bytestreams, or files, can be provided through a standards-based, POSIX-like API which supports the organisation and operations normally associated with local file systems whilst offering scalable and fault-tolerant remote storage. | ||

| + | API and remote storage are provided by a set of components, most noticeably a client library and a service based on a range of site-local back-ends, including MongoDB and Terrastore. | ||

| − | The | + | The library acts a facade to the service and allows clients to download, upload, remove, add, and list files. |

| − | + | ||

| − | + | ||

| − | Files may | + | Files have owners and owners may define access rights to files, allowing private, public, or group-based access. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| + | Through the use of metadata, the library allows hierarchical organisations of the data against the flat storage provided by the service's back-ends. | ||

=== Key features === | === Key features === | ||

| − | The subsystem | + | The subsystem has the following features: |

| − | ; | + | ;structured file storage |

| − | : | + | :Clients can create folder hierarchies, where folders are encoded as file metadata and do not require direct support in the storage back-end. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ;secure file storage | |

| + | : File access is authenticated against access rights set by file owners, including private, group, and public access rights; | ||

| + | ;scalable file storage | ||

| + | :files are stored in chunks and chunks are distributed across clusters of servers based on the workload of individual servers; | ||

| + | ;fault-tolerant file storage: | ||

| + | :file are asynchronously replicated across servers of clusters for data recovery and redundancy. | ||

| + | |||

| + | == Design == | ||

=== Philosophy=== | === Philosophy=== | ||

| − | Navigating through folders on a remote storage system, having the ability to download and upload files, | + | Navigating through folders on a remote storage system, having the ability to download and upload files, a familiar POSIX-like interface and a scalable and fault-tolerand storage backend system, these are all key design goals for the subsystem |

| − | The library | + | The library has ben designed to preserve a unified interface that aligns with their generality and encapsulates them from the variety of File Storage Service Backend. |

The two layer: core and wrapper library permit the use of the library in standalone mode or in the Gcube framework. | The two layer: core and wrapper library permit the use of the library in standalone mode or in the Gcube framework. | ||

| − | |||

=== Architecture=== | === Architecture=== | ||

| Line 56: | Line 44: | ||

The library is divided in two layer: a core library and a wrapper library. | The library is divided in two layer: a core library and a wrapper library. | ||

The core library is for generic purpose use, external to gCube framework. | The core library is for generic purpose use, external to gCube framework. | ||

| − | The wrapper library is | + | The wrapper library is thought for use internal on gCube framework. |

The interaction between these two levels permits the use of the library within the framework gCube. | The interaction between these two levels permits the use of the library within the framework gCube. | ||

| − | The wrapper library interacts with IS | + | The wrapper library interacts with IS to discover server resources that will be used from the core library. |

The core library interacts with a File Storage Service backend. | The core library interacts with a File Storage Service backend. | ||

The file Storage Service has the responsability of data storing. | The file Storage Service has the responsability of data storing. | ||

At this time there are 2 kind of file storage service supported: Terrastore and MongoDB. | At this time there are 2 kind of file storage service supported: Terrastore and MongoDB. | ||

| − | |||

File based access is provided by the following components: | File based access is provided by the following components: | ||

| − | |||

;Core library: | ;Core library: | ||

| Line 71: | Line 57: | ||

;Wrapper library: | ;Wrapper library: | ||

| − | : | + | :the wrapper library for the gCube framework, it has the task of harvesting the configuration resources made available in the framework Gcube and pass them to the core library |

;File Storage Service: | ;File Storage Service: | ||

| − | : | + | :the service responsible of remote data storage, it's invoked by the core library and can be based on differents technology like MongoDB, Terrastore. |

| Line 82: | Line 68: | ||

| − | [[Image: | + | [[Image:FileAccessGraph_2.jpeg|frame|center|File Access Architecture]] |

== Deployment == | == Deployment == | ||

| Line 92: | Line 78: | ||

=== Large Deployment === | === Large Deployment === | ||

| − | A large deployment consists of an instalation of a cluster of server dedicated to storage. Our current implementation uses a MongoDB File Storage Service. The servers are organized into MongoDB | + | A large deployment consists of an instalation of a cluster of server dedicated to storage. Our current implementation uses a MongoDB File Storage Service. The current version is 2.0.1. The servers are organized into MongoDB replica set cluster: replica sets are a form of asynchronous master/slave replication, adding automatic failover and automatic recovery of cluster's member nodes |

| − | + | In a production situation, the replica set ensure high availability, automated failover, data redundancy and disaster recovery. | |

| − | In a production situation, | + | |

| − | |||

| + | [[Image:largeDeploymentArch4.jpeg|frame|center|Large Deployment Architecture]] | ||

=== Small Deployment === | === Small Deployment === | ||

| − | A small deployment consists | + | A small deployment consists of an installation of a single server dedicated to storage. |

| + | In this case, the installation is very simple but it's not guaranteed the failover and data replication, also is not guaranteed the horizontal scalability | ||

| + | Given all this, we just think that single server deployment isn’t the best way to get true durability. We think the right path to durability is replication on many node. That’s why us current deployment is of kind "large deployment" | ||

| + | |||

| + | |||

| + | [[Image:smallDeploymentArch4.jpeg|frame|center|Small Deployment Architecture]] | ||

| + | |||

| + | == Use Cases == | ||

| + | |||

| + | |||

| + | === Well suited use cases=== | ||

| + | |||

| + | The subsystem is particularly suited to support sharing and storing of a big number of files. | ||

| + | |||

| + | The library core can operate in standalone mode, without the wrapper library. This allows to adapt the library also in environments other than gCube. | ||

| + | |||

| + | Theoretically it would be possible to share files even from different environments. Obviously, only if clients had shared login credentials and resources. | ||

| + | |||

| + | The library is able to handle files of large size without loss of performance. This is thanks to the management of files in chunks. | ||

| + | |||

| + | === Less well suited use cases=== | ||

| + | |||

| + | The scalability is achieved only through static deployments of larger clusters. | ||

| + | In the case where there was the need to aggregate more resources than those provided at deployment time, this could not be done if not in a static manner. | ||

| + | |||

| + | The current installation based on MongoDB backend works well on small and large files. It has a performance loss on file size very very small, smaller than 2 KB. | ||

| + | There will be a little more space overhead in MongoDB than on the filesystem. | ||

Latest revision as of 12:30, 11 September 2013

Part of the Data Access and Storage Facilities, a cluster of components within the system focus on standards-based and structured access and storage of files of arbitrary size.

This document outlines their design rationale, key features, and high-level architecture, as well as the options for their deployment.

Contents

Overview

Access and storage of unstructured bytestreams, or files, can be provided through a standards-based, POSIX-like API which supports the organisation and operations normally associated with local file systems whilst offering scalable and fault-tolerant remote storage.

API and remote storage are provided by a set of components, most noticeably a client library and a service based on a range of site-local back-ends, including MongoDB and Terrastore.

The library acts a facade to the service and allows clients to download, upload, remove, add, and list files.

Files have owners and owners may define access rights to files, allowing private, public, or group-based access.

Through the use of metadata, the library allows hierarchical organisations of the data against the flat storage provided by the service's back-ends.

Key features

The subsystem has the following features:

- structured file storage

- Clients can create folder hierarchies, where folders are encoded as file metadata and do not require direct support in the storage back-end.

- secure file storage

- File access is authenticated against access rights set by file owners, including private, group, and public access rights;

- scalable file storage

- files are stored in chunks and chunks are distributed across clusters of servers based on the workload of individual servers;

- fault-tolerant file storage

- file are asynchronously replicated across servers of clusters for data recovery and redundancy.

Design

Philosophy

Navigating through folders on a remote storage system, having the ability to download and upload files, a familiar POSIX-like interface and a scalable and fault-tolerand storage backend system, these are all key design goals for the subsystem The library has ben designed to preserve a unified interface that aligns with their generality and encapsulates them from the variety of File Storage Service Backend. The two layer: core and wrapper library permit the use of the library in standalone mode or in the Gcube framework.

Architecture

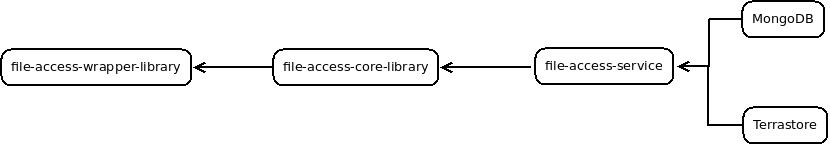

The library is divided in two layer: a core library and a wrapper library. The core library is for generic purpose use, external to gCube framework. The wrapper library is thought for use internal on gCube framework. The interaction between these two levels permits the use of the library within the framework gCube. The wrapper library interacts with IS to discover server resources that will be used from the core library. The core library interacts with a File Storage Service backend. The file Storage Service has the responsability of data storing. At this time there are 2 kind of file storage service supported: Terrastore and MongoDB.

File based access is provided by the following components:

- Core library

- Implements a high-level facade to the remote APIs of the File Storage Service. The core dialogues directly with a File storage Service that is responsibles for storing data. This level has the responsibility to split files into chunks if the size exceeds a certain threshold, to build the metadata such as: owner, type of object (file or directory), access permissions, etc. .. It also has the task of issuing commands to the File Storage System for the construction of the tree of folders by metadatas if any were needed

- Wrapper library

- the wrapper library for the gCube framework, it has the task of harvesting the configuration resources made available in the framework Gcube and pass them to the core library

- File Storage Service

- the service responsible of remote data storage, it's invoked by the core library and can be based on differents technology like MongoDB, Terrastore.

The following diagram illustrates the dependencies between the components of the subsystem:

Deployment

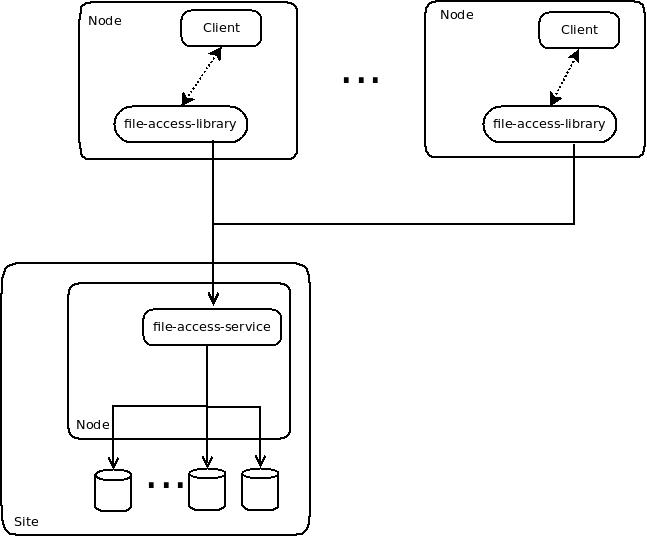

The deployment of this library has the focus on installing the File Storage System. The File Storage System is installed in a static, not dynamic capabilities based on the load of requests. Therefore it is very important to choose the correct installation according to the needs. As the number of servers dedicated to storage of data, not only increases the storage capacity, but it also improves the balance of the data and therefore the response time. On the other hand, if the storage requirements are few and the number of servers is large, there will be a waste of resources that will be little used

Large Deployment

A large deployment consists of an instalation of a cluster of server dedicated to storage. Our current implementation uses a MongoDB File Storage Service. The current version is 2.0.1. The servers are organized into MongoDB replica set cluster: replica sets are a form of asynchronous master/slave replication, adding automatic failover and automatic recovery of cluster's member nodes In a production situation, the replica set ensure high availability, automated failover, data redundancy and disaster recovery.

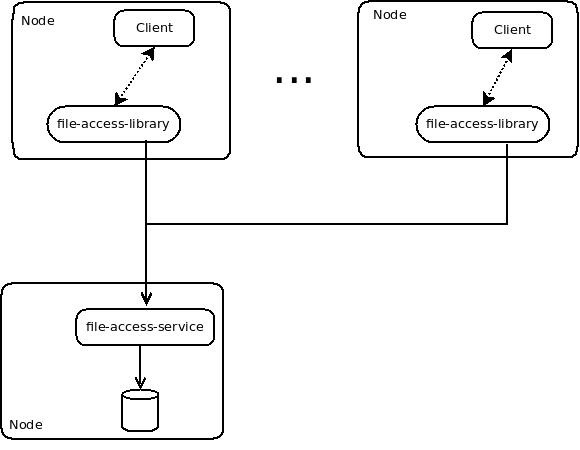

Small Deployment

A small deployment consists of an installation of a single server dedicated to storage. In this case, the installation is very simple but it's not guaranteed the failover and data replication, also is not guaranteed the horizontal scalability Given all this, we just think that single server deployment isn’t the best way to get true durability. We think the right path to durability is replication on many node. That’s why us current deployment is of kind "large deployment"

Use Cases

Well suited use cases

The subsystem is particularly suited to support sharing and storing of a big number of files.

The library core can operate in standalone mode, without the wrapper library. This allows to adapt the library also in environments other than gCube.

Theoretically it would be possible to share files even from different environments. Obviously, only if clients had shared login credentials and resources.

The library is able to handle files of large size without loss of performance. This is thanks to the management of files in chunks.

Less well suited use cases

The scalability is achieved only through static deployments of larger clusters. In the case where there was the need to aggregate more resources than those provided at deployment time, this could not be done if not in a static manner.

The current installation based on MongoDB backend works well on small and large files. It has a performance loss on file size very very small, smaller than 2 KB. There will be a little more space overhead in MongoDB than on the filesystem.