Difference between revisions of "FHNManager Installation"

(→Define Service Profile) |

(→Edit properties file) |

||

| Line 116: | Line 116: | ||

== Edit properties file == | == Edit properties file == | ||

| − | + | Please edit the YAML service.properties file available in WEB.INF/classes folder and replace the ''STORAGE_DIR: /home/"user_name"/fhnmanager'' path with user home path (e.g., ''STORAGE_DIR: /home/ngalante/fhnmanager''); such folder will contain the persistency of nodes created by using the service. | |

An example of nodes created by the service and stored in ''nodes.yml'' file is shown below: | An example of nodes created by the service and stored in ''nodes.yml'' file is shown below: | ||

Revision as of 17:03, 5 May 2016

The Federated Hosting Node Manager (FHNM) is the core part of the gCube-FedCloud integration.

Contents

Usage

Maven coordinates

The maven artifact coordinates are:

<dependency> <groupId>org.gcube.resources.federation</groupId> <artifactId>fhn-manager-service</artifactId> <version>1.0.0-SNAPSHOT</version> <packaging>war</packaging> </dependency>

Deployment

Deploy the war file related to the service into the SmartGears Container (tomcat Webapp folder)

Authentication

In order to use the Federated Cloud, it is necessary to register a certificate to join a Virtual Organisation (VO) of the cloud. In case of integration with EGI it is necessary to generate a VOMS proxy in order to obtain authorization attributes to be embedded in X.509 proxy certificates, needed to access FedCloud sites.

Ideally, the VRE Manager should have to import the credentials related to own VRE directly from the gCube IS; actually the service foresees an interface for the future integration with the IS, but currently we're using VRE Manager certificates (actually a second-level proxy of the manager's cert. The first one is stored, encrypted, in the gCube information system; the second one is generated by the service just before accessing FedCloud and it's really short-lived; usually it is available at /tmp folder).

Most, if not all, gCube users do not own a x509 certificate; they access the gCube portal with username/password and the usage of external infrastructures is completely transparent to them. Basically the gCube Information System will contain the .pem certificated associated to each VRE or Infrastructure Manager. Such certificates will be used by the service to create a runtime VOMS proxy in order to interact with FedCloud. If any certificates is provided, no interaction with FedCloud will be allowed.

Details about the installation of VOMS clients for your system are available at [How to use the rOCCI Client]

Configuration Steps

For a correct configuration of the service, please refer to subsections below:

Target definition

First, it is necessary to configure the target cloud platforms to add to configuration. To achieve that, please edit vmproviders.yml file (available to ../classes/ folder) and insert data (a list if you desire to add multiple resources) as follows:

---

credentials: {type: x509,

encodedCredentails: /tmp/x509up_u1004}

endpoint: https://carach5.ics.muni.cz:11443/

id: 4-1

name: Cesnet-Metacloud

resourceTemplates:

nodeTemplates:

- refId: 3-1

---Credentials will specify:

- the path to the second level Proxy certificate

- the typology (x509)

Other fields are related to:

- the endpoint (please refer to [AppDb] for a list of sites exposing occi and supporting gCube smartExecutor Virtual Appliance)

- the provider ID

- the provider name

- the reference to node template file

The resource templates describe the resources (in terms of memory, number of cores, etc..) In this case, it is left to blank; actually it is extracted directly from the fhn-occi-connector(and stored manually in ResourceTemplates.yml file as GUI requirement); In the next releases, the development of a caching system could be considered. An example of resource template representation is shown in the following:

<resourceTemplates> <resourceTemplate> <id>http://fedcloud.egi.eu/occi/compute/flavour/1.0#mem_small</id> <cores>1</cores> <memory>4294967296</memory> <name>Small Instance - 1 core and 4 GB RAM</name> <vmProvider refId="4-1"/> </resourceTemplate>

Define OSTemplate

Node Templates must be defined in nodetemplates.yml file (available to ../classes/ folder).

--- id: 3-1 os: name osVersion: name: description: version: diskSize: script: https://appdb.egi.eu/storage/cs/vapp/15819120-7ee4-4b85-818a-d9bd755a61f0/devsec-init osTemplateId: http://occi.carach5.ics.muni.cz/occi/infrastructure/os_tpl#uuid_gcubesmartexecutor_fedcloud_warg_139 serviceProfile: refId: 2-1

Fields of interest are the following:

- the Node template ID

- the URL of the contextualisation script that will be used to instantiate a virtual machine able to be monitored by the d4Science infrastructure

- the id related to the image of the OS template obtained by [AppDb] (in this case, just the one related to SmartExecutor is considered)

- the reference to service profile

Define Service Profile

According to domain model, the service profile defines the software specifications running on the instance. It must be defined in serviceprofiles.yml file (available to ../classes/ folder). An example of service profiles definition can be found below:

---

deployedSoftware:

- refId: s2

description: gCube Smart Executor

creationDate: 06-Oct-2015

version: 1.2.0

id: 2-1

minRam: 4294967296

minCores: 1

suggestedRam: 8589934592

suggestedCores: 2

---Edit properties file

Please edit the YAML service.properties file available in WEB.INF/classes folder and replace the STORAGE_DIR: /home/"user_name"/fhnmanager path with user home path (e.g., STORAGE_DIR: /home/ngalante/fhnmanager); such folder will contain the persistency of nodes created by using the service. An example of nodes created by the service and stored in nodes.yml file is shown below:

---

!!org.gcube.resources.federation.fhnmanager.api.type.Node

hostname: hostname

id: 4-1@https://carach5.ics.muni.cz:11443/compute/68051

nodeTemplate: {refId: 3-1}

resourceTemplate: {refId: 'http://fedcloud.egi.eu/occi/compute/flavour/1.0#small'}

serviceProfile: {refId: 2-1}

status: waiting

vmProvider: {refId: 4-1}

workload: {allTimeAverageWorkload: 0.5, lastDayWorkload: 0.7, lastHourWorkload: 0.2,

nowWorkload: 0.8}

---

!!org.gcube.resources.federation.fhnmanager.api.type.Node

hostname: stoor180.meta.zcu.cz

id: 4-1@https://carach5.ics.muni.cz:11443/compute/68102

nodeTemplate: {refId: 3-1}

resourceTemplate: {refId: 'http://fedcloud.egi.eu/occi/compute/flavour/1.0#small'}

serviceProfile: {refId: 2-1}

status: active

vmProvider: {refId: 4-1}

workload: {allTimeAverageWorkload: 0.5, lastDayWorkload: 0.7, lastHourWorkload: 0.2,

nowWorkload: 0.8}

---

!!org.gcube.resources.federation.fhnmanager.api.type.Node

hostname: stoor154.meta.zcu.cz

id: 4-1@https://carach5.ics.muni.cz:11443/compute/68942

nodeTemplate: {refId: 3-1}

resourceTemplate: {refId: 'http://fedcloud.egi.eu/occi/compute/flavour/1.0#small'}

serviceProfile: {refId: 2-1}

status: suspended

vmProvider: {refId: 4-1}

#workload: nullSince the nodes are created/started/stopped/deleted via fhn-occi-connector, it could take time to retrieve consistent information (e.g., the status attribute, is initially populated as "waiting" and just actually later it becomes "active"). To solve this kind of issue, a mechanism of synchronization between the data retrieved from the connector and the persistency currently stored locally from the service has been implemented (in the future, such persistency will be stored directly in the gCube Information System repository).

Client installation

In order to test the functionalities proposed by the service, a rest client library has been developed.

Maven coordinates

<dependency> <groupId>org.gcube.resources.federation</groupId> <artifactId>fhn-manager-client</artifactId> <version>1.0.0-SNAPSHOT</version> </dependency>

Client Testing

The set of RestAPIs call can be performed by using the ClientTest.java class.

ScopeProvider.instance.set("/gcube/devsec"); //set the scope of interest here FHNManager client = FHNManagerProxy.getService(new URL("http://"hostname":"port"/fhn-manager-service/rest")).build(); FHNManagerClient client = FHNManagerProxy.getService().build(); client.allServiceProfiles(); client.createNode(vmProviderId, serviceProfileId, resourceTemplateId); client.findNodes(vmProviderId, serviceProfileId); client.findResourceTemplate(vmProviderid); client.findVMProviders(serviceProfileId); client.getNodeById(NodeId); client.getVMProviderbyId(vmProviderid); client.startNode(NodeId); client.stopNode(NodeId); client.deleteNode(NodeId);

Alternatively, it is possible to test the functionalities (that later will be provided by way of the GUI) directly from browser by accessing to:

http://hostname:port/fhn-manager-service/rest/serviceprofiles http://hostname:port/fhn-manager-service/rest/nodes/create?vmProviderId=""&serviceProfileId=""&resourceTemplateId="" http://hostname:port/fhn-manager-service/rest/nodes?vmProviderId=""&serviceProfileId="" http://hostname:port/fhn-manager-service/rest/resourceTemplate?vmProviderid="" http://hostname:port/fhn-manager-service/rest/vmproviders?serviceProfileId="" http://hostname:port/fhn-manager-service/rest/nodes/nodeId http://hostname:port/fhn-manager-service/rest/vmproviders/vmProviderid http://hostname:port/fhn-manager-service/rest/start?NodeId="" http://hostname:port/fhn-manager-service/rest/nodes/stop?NodeId="" http://hostname:port/fhn-manager-service/rest/delete?NodeId=""

An example of running service is available here: http://fedcloud.res.eng.it/fhn-manager-service/rest/*

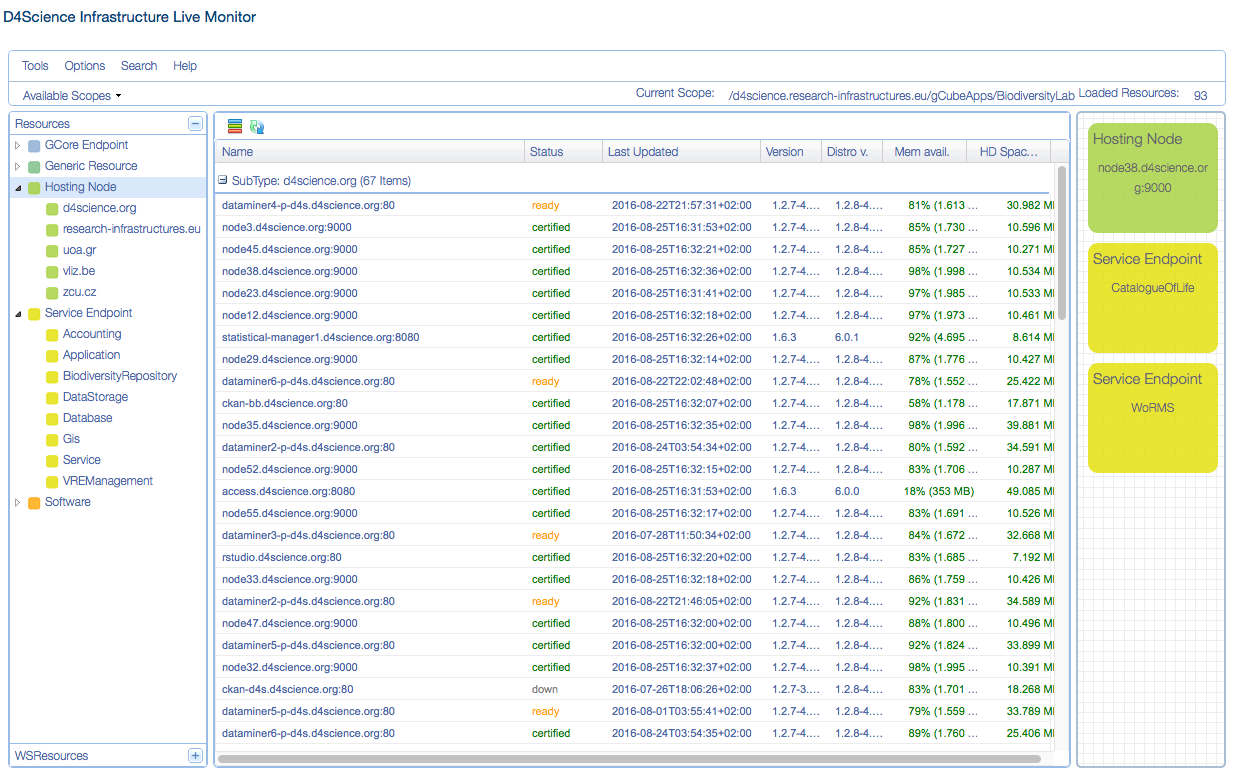

Nodes Monitoring

The created instances will appear in the D4Science Monitoring system. Data collected is analysed to produce alarms and/or take countermeasures in case of problems with one or more resources.