Data Transfer 2

Contents

Overview

The implementation of a reliable data transfer mechanisms between the nodes of a gCube-based Hybrid Data Infrastructure is one of the main objectives when dealing with large set of multi-type datasets distributed across different repositories.

To promote an efficient and optimized consumption of these data resources, a number of components have been designed to meet the data transfer requirements.

This document outlines the design rationale, key features, and high-level architecture, the options for their deployment and as well some use cases.

Key features

The components belonging to this class are responsible for:

- Point to Point transfer

- direct transfer invocation to a gCube Node

- reliable data transfer between Infrastructure Data Sources and Data Storages

- by exploiting the uniform access interfaces provided by gCube and standard transfer protocols

- automatic transfer optimization

- by exploiting best available transfer options between invoker and target nodes

- advanced and extensible post transfer processing

- plugin - oriented implementation to serve advanced use case

Design

Philosophy

In a Hybrid Data e-Infrastructure, transferring data between nodes is a crucial feature. Given the heterogeneous nature of datasets, applications, networks capabilities and restrictions, efficient data-transfers can be problematic. Negotiation of best-efficient-available-transfer method is essential in order to achieve a global efficiency, while an extensible set of post operations lets application serve specific needs among common ones. We wanted to provide a light-weight solution that could fit the evolving nature of the infrastructure and its communities needs.

Architecture

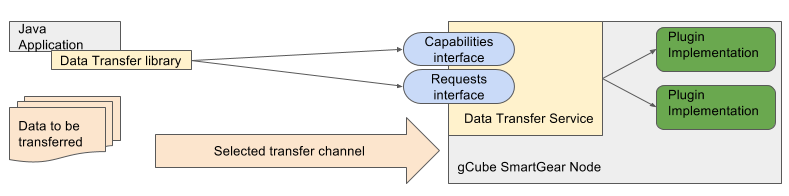

The architecture of Data Transfer is a simple client server model, respectively implemented by data-transfer-library and data-transfer-service.

The service is a simple REST server, implemented as a gCube SmartGear web application. It is designed exploiting the plugin design pattern, so that it can be easily extended without introducing hard dependencies between the architecture's components. It also exposes interfaces aimed to :

- get node's capabilities in terms of available plugins and supported transfer method;

- submit and monitor transfer requests

The client is a java library that :

- contacts the server asking for its capabilities in order to decide the best efficient transfer way between the two sides;

- submits a transfer request through the selected transfer channel

- monitors its status/outcome.

Optionally, a transfer request can specify an ordered set of plugin invocation, that are executed after the transfer has been completed. These execution are monitored by the client as part of the atomic transfer process.

The picture summarize the described architecture.

Deployment

As described in the section [Architecture], the Data Transfer suite is composed by three main components :

- data-transfer-library is a java library that can be used by any other java application. Thus the two need to be in the same classpath.

- data-transfer-service is a gCube Smart Gear REST service, thus it needs to be hosted in a Smart Gear installation.

- plugin implementations are discovered at runtime by the service, thus they need to be deployed along with the data-transfer-service itself.

Large Deployment

Small Deployment

Use Cases

Well suited use cases

The Data Transfer is designed mainly for point to point transfers, so its use it's most suited for moving datasets where they need to be stored and processed. The automatic transfer means negotiation mostly helps in situations where the remote nodes are in heterogeneous networks and relative restrictions (i.e. cloud computing). Implemented plugins can help to deploy and prepare data locally to those storage technologies that have particular needs (i.e. Thredds).

Less well suited use cases

More advanced use cases such as scheduled tasks and parallel transfers with strong requirements on data coherence between nodes are beyond the scope of the current implementation, and needs to be managed by the user application.