Difference between revisions of "Data Transformation"

(→Programs) |

Luca.frosini (Talk | contribs) |

||

| (111 intermediate revisions by 5 users not shown) | |||

| Line 1: | Line 1: | ||

| − | == | + | == gCube Data Transformation Service == |

| + | |||

=== Introduction === | === Introduction === | ||

| + | The gCube Data Transformation service is responsible for transforming content and metadata among different formats and specifications. gDTS lies on top of Content and Metadata Management services. It interoperates with these components in order to retrieve information objects and store the transformed ones. Transformations can be performed offline and on demand on a single object or on a group of objects. | ||

| + | |||

| + | gDTS employs a variety of pluggable converters in order to transform digital objects between arbitrary content types, and takes advantage of extended information on the content types to achieve the selection of the appropriate conversion elements. | ||

| + | |||

| + | As already mentioned, the main functionality of the gCube Data Transformation Service is to convert digital objects from one content format to another. The conversions will be performed by transformation programs which either have been previously defined and stored or are composed on-the-fly during the transformation process. Every transformation program (except for those which are composed on-the-fly) is stored in the IS. | ||

| + | |||

| + | The gCube Data Transformation Service is presumed to offer a lot of benefits in many aspects of gCube. Presentation layer benefits from the production of alternative representations of multimedia documents. Generation of thumbnails, transformations of objects to specific formats that are required by some presentation applications and projection of multimedia files with variable quality/bitrate are just some examples of useful transformations over multimedia documents. In addition, as conversion tool for textual documents, it will offer online projection of documents in html format and moreover any other downloadable formats as pdf or ps. Annotation UI can be implemented more straightforward on selected logical groups of content types (e.g. images) without caring about the details of the content and the support offered by the browsers. Finally, by utilizing the functionality of Metadata Broker, homogenization of metadata with variable schemas can be achieved. | ||

| + | |||

| + | === Concepts === | ||

| + | |||

| + | ==== Content Type ==== | ||

| + | |||

| + | In gDTS the content type identification and notation conforms to the MIME type specification described in RFC 2045 and RFC 2046. This provides compliance with mainstream applications such as browsers, mail clients, etc. In this context, a document’s content type is defined by the media type, the subtype identifier plus a set of parameters, specified in an “attribute=value” notation. This extra information is exploited by data converters capable of interpreting it. | ||

| + | |||

| + | Some examples of content formats of information objects are: | ||

| + | |||

| + | - Mimetype=”image/png”, width=”500”, height=”500” that denotes that the object’s format is the well known portable network graphics and the image’s width and height is 500 pixel. | ||

| + | |||

| + | - Mimetype=”text/xml”, schema=”dc”, language=”en”, denotes that the object is an xml document with schema Dublin Core and language English. | ||

| + | |||

| + | ==== Transformation Units ==== | ||

| + | |||

| + | A transformation unit describes the way a program can be used in order to perform a transformation from one or more source content type to a target content type. The transformation unit determines the behaviour of each program by providing proper program parameters potentially drawn from the content type representation. Program parameters may contain string literals and/or ranges (via wildcards) in order to denote source content types. In addition, the wildcard ‘-’ can be used to force the presence of a program parameter in the content types set by the caller which uses this specific transformation unit. | ||

| + | Furthermore, transformation units may reference other transformation units and use them as “black-box” components in a transformation process. Thus, each transformation unit is identified by the pair (transformation program id, transformation unit id). | ||

| + | |||

| + | ==== Transformations' Graph ==== | ||

| − | + | Through transformation units new content types and program capabilities are published to gDTS. These units compose a transformation graph, with nodes and edges that correspond to content types and transformation units respectively. During initialisation, transformation graph is constructed locally through the published information stored in IS and gets updated periodically. Using this graph we are able to find a path of transformation units so as to perform an object transformation from its content type (source) to a target content type. | |

==== Transformation Programs ==== | ==== Transformation Programs ==== | ||

| − | + | A transformation program is an xml document describing one or more possible transformations from a source content type to a target content type. Each transformation program references to at most one program and it contains one or more transformation units for each possible transformation. Transformation programs are stored in the IS. | |

| − | Each transformation program consists of: | + | Complex transformation processes are also described by ''transformation programs''. Each transformation program can reference other transformation programs and use them as “black-box” components in the transformation process it defines. Each transformation program consists of: |

* One or more data input definitions. Each one defines the schema, language and type (record, ResultSet or collection) of the data that must be mapped to the particular input. | * One or more data input definitions. Each one defines the schema, language and type (record, ResultSet or collection) of the data that must be mapped to the particular input. | ||

* One or more input variables. Each one of them is placeholder for an additional string value which must be passed to the transformation program at run-time. | * One or more input variables. Each one of them is placeholder for an additional string value which must be passed to the transformation program at run-time. | ||

| Line 15: | Line 42: | ||

'''Note''': The name of the input or output schema must be given in the format '''''SchemaName=SchemaURI''''', where SchemaName is the name of the schema and SchemaURI is the URI of its definition, e.g. '''<nowiki>DC=http://dublincore.org/schemas/xmls/simpledc20021212.xsd</nowiki>'''. | '''Note''': The name of the input or output schema must be given in the format '''''SchemaName=SchemaURI''''', where SchemaName is the name of the schema and SchemaURI is the URI of its definition, e.g. '''<nowiki>DC=http://dublincore.org/schemas/xmls/simpledc20021212.xsd</nowiki>'''. | ||

| + | |||

| + | Samples of transformation programs can be found on [https://gcube.wiki.gcube-system.org/gcube/index.php/Creating_Indices_at_the_VO_Level#DataTransformation_Programs Creating Indices at the VO Level] admin guide. | ||

==== Transformation Rules ==== | ==== Transformation Rules ==== | ||

| Line 41: | Line 70: | ||

A ''program'' (not to be confused with ''transformation program'') is the Java class which performs the actual transformation on the input data. A transformation rule is just a XML description of the interface (inputs and output) of a program. | A ''program'' (not to be confused with ''transformation program'') is the Java class which performs the actual transformation on the input data. A transformation rule is just a XML description of the interface (inputs and output) of a program. | ||

| − | + | Each program can define any number of methods, but when the transformation rule which references it is executed, the service will use reflection in order to locate the correct method to call based on the input and output types defined in the transformation rule that initiates the call to the program's transformation method. The execution process is the following: | |

| − | * A client invokes | + | * A client invokes DTS requesting the execution of a transformation program. |

* For each transformation rule found in the transformation program: | * For each transformation rule found in the transformation program: | ||

| − | ** | + | ** DTS reads the schema, language and type of the transformation rule's inputs, as well as the actual payloads given as inputs. The output format descriptor is also read. |

| − | ** Based on this information, | + | ** Based on this information, DTS constructs one or more DataSource and a DataSink object, which are wrapper classes around the transformation rule's input and output descriptors. |

** The program to be invoked for the transformation is read from the transformation rule. | ** The program to be invoked for the transformation is read from the transformation rule. | ||

| − | ** | + | ** A transformation plan is constructed which is passed to workflowDTSAdaptor in order to construct an execution plan implementing this transformation. |

| − | * | + | ** DTS uses reflection in order to locate the transformation method to be called inside the program. This is done through the input and output descriptors of the transformation rule. |

| − | ** | + | |

| − | *** | + | Generally speaking, the main logic in a program will be something like this: |

| − | ** | + | * while (source.hasNext()) do the following: |

| − | * | + | ** sourceElement = source.getNext(); |

| + | ** (transform sourceElement to produce 'transformedPayload') | ||

| + | ** destElement = sink.getNewDataElement(sourceElement, transformedPayload); | ||

| + | ** sink.writeNext(destElement); | ||

| + | * sink.finishedWriting(); | ||

=== Implementation Overview === | === Implementation Overview === | ||

| − | The | + | The gCube Data Transformation Service is primarily comprised by the Data Transformation Service component which basically implements the WS-Interface of the Service, the Data Transformation Library which carries out the basic functionality of the Service i.e. the selection of the appropriate conversion element and the execution of the transformation over the information objects, a set of data handlers which are responsible to fetch and return/store the information objects and finally the conversion elements “Programs” that perform the conversions. |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ==== Data Transformation Service ==== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | The Data Transformation Service component implements the WS-Interface of the Service. Basically, it is the entry point for the services that Data Transformation Library provides. It's main operation is to check the parameters of the invocation, instantiate the data handlers, invoke the appropriate method of Data Transformation Library and inform clients for any possible faults. | |

| − | + | A Data Transformation Service's RI operates successfully over multiple scopes by keeping any necessary information for each scope independently. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | === | + | ==== Data Transformation Library ==== |

| − | + | Inside the Data Transformation Library it is implemented the core functionality of gDTS. The Data Transformation Library contains variable packages which are responsible for different features of gDTS. The basic class of the Data Transformation Library is the DTSCore which is the class responsible to orchestrate the rest of the components. | |

| + | A DTSCore instance contains a transformations graph which is responsible to determine the transformation that will be performed (if the transformation program / unit is not explicitly set), as well as an information manager (IManager) which is the class responsible to fetch information about the transformation programs. The implementation of IManager that is currently used by the gDTS is the ISManager which fetches, publishes and queries transformation programs from the [[gCore Based Information System]]. | ||

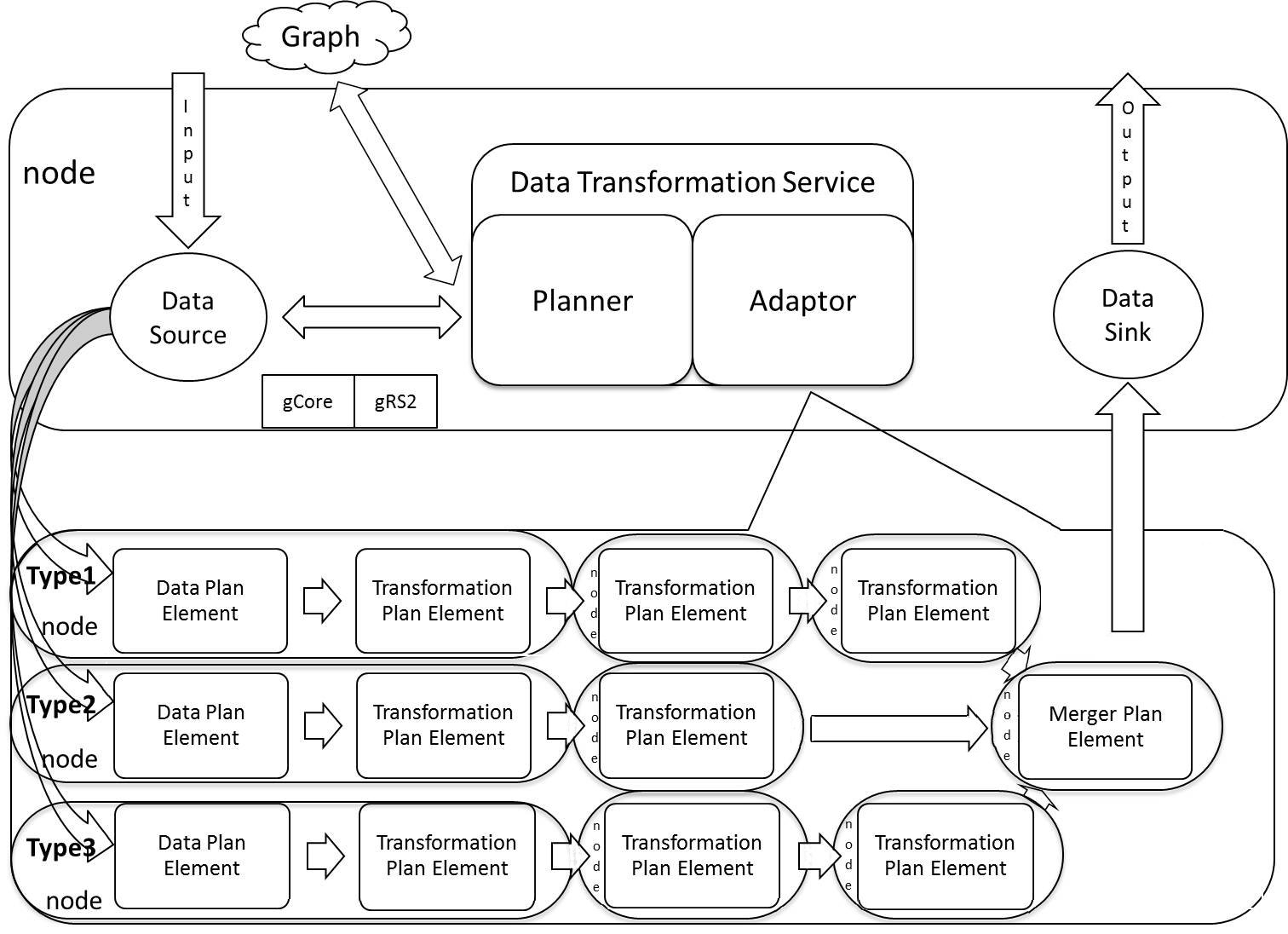

| − | + | The following diagram depicts the operations applied by Data Transformation Library on data elements when a request for a transformation is made and a transformation unit has not been explicitly set. | |

| − | + | [[Image:Data_transformation_deployment_large.jpg|thumb|none|756px|Data Transformation Operational Diagram]] | |

| − | + | For each data element the Data Elements Broker reads its content type and by utilizing the transformations graph, determines the proper Transformation Unit that is going to perform the transformation. Each transformation path, consisted by Transformation Units, is added to the transformation plan that will be passed to WorkflowDTSAdaptor. Then each object which has the same content type is also ignored. If another object comes from the Data Source which has a different content type, the Data Elements Broker uses again the Transformations Graph and a new Transformation plan is created. If the graph does not manage to find any applicable transformation unit for a data element, it is just ignored as well as the rest which have the same content type. | |

| − | + | ||

| − | + | Apart from the core functionality, in the Data Transformation Library are also contained the interfaces of the [[Data_Transformation#Data_Transformation_Programs | Programs]] and [[Data_Transformation#Data_Transformation_Handlers | Data Handlers]] which have to be adopted by any program or data handler implementation. Finally, report and query packages contain classes for the reporting and querying functionalities respectively. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ==== WorkflowDTSAdaptor ==== | |

| − | + | WorkflowDTSAdaptor takes a transformatin plan as an input, provided by [https://gcube.wiki.gcube-system.org/gcube/index.php/Data_Transformation#Data_Transformation_Library Data Transformation Library]. For each plan a new [https://gcube.wiki.gcube-system.org/gcube/index.php/Execution_Engine#Execution_Plan Execution Plan] is instantiated as a transformation chain, described by the transformation plan. In this way, a different transformation chain is created for every content type of data elements provided by the source. Then transformation plan retrieves every data element of that particular content type and applies the transformation. A Data Bridge and Program instances are created and the data element is appended to the source Data Bridge of the Transformation Unit. | |

| − | + | ||

| − | + | In the Transformation Unit then, the data elements are transformed one by one by the program and the result is appended into the target data bridge contained in the transformation unit. Then these objects are finally merged by the Data Source Merger which reads in parallel objects from all the transformation chains and appends them into the Data Sink. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ==== Data Transformation Handlers ==== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | The | + | The gDTS has to perform some procedures in order to fetch and store content. These procedures are totally independent from the basic functionality of the gDTS which is to transform one or more objects into different content formats and they shall not affect it by any means. So whenever the gDTS is invoked, the caller-supplied data is automatically wrapped in a data source object. In a similar way, the output of the transformation is wrapped in a data sink object. The source and sink objects can then be used by the invoked java program in order to read each source object sequentially and write its transformed counterpart to the destination. This processing of data objects is done homogenously because of the abstraction provided by the data sources and data sinks, no matter what the nature of the original source and destination is. |

| − | + | The clients identify the appropriate data handler by its name in the input/output type parameter contained in each transform method of gDTS. Then, the service loads dynamically the java class of the data handler that corresponds to this type. | |

| − | + | The available Data Handlers are: | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ===== Data Sources ===== | |

| + | <table border="1" > | ||

| + | <tr style="white-space: nowrap; text-align: left;"><th>Data Source Name</th><th>Input Name</th><th>Input Value</th><th>Input Parameters</th><th>Description</th></tr> | ||

| + | <tr><td>TMDataSource</td><td>TMDataSource</td><td>content collection id</td><td>NA</td><td>Fetches all the trees that belong to a tree collection.</td></tr> | ||

| + | <tr><td>RSBlobDataSource</td><td>RSBlob</td><td>result set locator</td><td>NA</td><td>Gets as input content of a result set with blob elements.</td></tr> | ||

| + | <tr><td>FTPDataSource</td><td>FTP</td><td>host name</td><td>username, password, directory, port</td><td>Downloads content from an ftp server.</td></tr> | ||

| + | <tr><td>URIListDataSource</td><td>URIList</td><td>url</td><td>NA</td><td>Fetches content from urls that are contained in a file whose location is set as input value.</td></tr> | ||

| + | </table> | ||

| − | + | ===== Data Sinks ===== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | <table border="1" > | |

| − | + | <tr style="white-space: nowrap; text-align: left;"><th>Data Sink Name</th><th>Output Name</th><th>Output Value</th><th>Output Parameters</th><th>Description</th></tr> | |

| − | + | <tr><td>RSBlobDataSink</td><td>RSBlob</td><td>NA</td><td>NA</td><td>Puts data into a result set with blob elements.</td></tr> | |

| − | + | <tr><td>RSXMLDataSink</td><td>RSXML</td><td>NA</td><td>NA</td><td>Puts (xml) data into a result set with xml elements.</td></tr> | |

| − | + | <tr><td>FTPDataSink</td><td>FTP</td><td>host name</td><td>username, password, port, directory</td><td>Stores objects in an ftp server.</td></tr> | |

| − | + | </table> | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ===== Data Bridges ===== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | <table border="1" > | |

| − | + | <tr style="white-space: nowrap; text-align: left;"><th>Data Bridge Name</th><th>Parameters</th><th>Description</th></tr> | |

| − | + | <tr><td>RSBlobDataBridge</td><td>NA</td><td>RSBlobDataBridge is used as a buffer of data elements. Utilizes RS in order to keep objects in the disk.</td></tr> | |

| − | + | <tr><td>REFDataBridge</td><td>flowControled = "true|false", limit</td><td>Keeps references to data elements. If flow control is enabled a maximum number of #limit data elements can exist in the bridge.</td></tr> | |

| − | + | <tr><td>FilterDataBridge</td><td>NA</td><td>Filters the contents of a Data Source by a content format.</td></tr> | |

| − | + | ||

| − | + | </table> | |

| − | + | ||

| − | + | ==== Data Transformation Programs ==== | |

| − | + | ||

| − | + | The available transformations that the gDTS can use reside externally to the service, as separate Java classes called Programs (not to be confused with ‘Transformation Programs’). Each program is an independent, self-describing entity that encapsulates the logic of the transformation process it performs. The gDTS loads these required programs dynamically as the execution proceeds and supplies them with the input data that must be transformed. Since the loading is done at run-time, extending the gDTS transformation capabilities by adding programs is a trivial task. The new program has to be written as a java class and referenced in the classpath variable, so that it can be located when required. | |

| − | + | ||

| − | + | The gDTS provides helper functionality to simplify the creation of new programs. This functionality is exposed to the program author through a set of abstract java classes, which are included in the gCube Data Transformation Library. | |

| − | + | ||

| − | + | The available Program implementations are: | |

| − | + | ||

| − | + | <table border="1" > | |

| − | + | <tr style="white-space: nowrap; text-align: left;"><th>Name</th><th>Description</th></tr> | |

| − | </ | + | <tr><td>DocToTextTransformer</td><td>Extacts plain text from msword documents</td></tr> |

| + | <tr><td>ExcelToTextTransformer</td><td>Extacts plain text from ms-excel documents</td></tr> | ||

| + | <tr><td>FtsRowset_Transformer</td><td>Creates full text rowsets from xml documents</td></tr> | ||

| + | <tr><td>FwRowset_Transformer</td><td>Creates forward rowsets from xml documents</td></tr> | ||

| + | <tr><td>GeoRowset_Transformer</td><td>Creates geo rowsets from xml documents</td></tr> | ||

| + | <tr><td>ImageMagickWrapperTP</td><td>Currently is able to convert images from to any image type, create thumbnails, watermarking images. Any other operation of image magick library can be incorporated</td></tr> | ||

| + | <tr><td>PDFToJPEGTransformer</td><td>Creates jpeg images from a page of a pdf document</td></tr> | ||

| + | <tr><td>PDFToTextHTMLTransformer</td><td>Converts a pdf document to html or text</td></tr> | ||

| + | <tr><td>PPTToTextTransformer</td><td>Extacts plain text from powerpoint documents</td></tr> | ||

| + | <tr><td>TextToFtsRowset_Transformer</td><td>Creates full text rowsets from plain text</td></tr> | ||

| + | <tr><td>XSLT_Transformer</td><td>Applies an xslt to an xml document</td></tr> | ||

| + | <tr><td>AggregateFTS_Transformer</td><td>Transform metadata documents coming from multiple metadata collections to a single FTS rowset</td></tr> | ||

| + | <tr><td>AggregateFWD_Transformer</td><td>Transform metadata documents coming from multiple metadata collections to a single FWD rowset</td></tr> | ||

| + | <tr><td>Zipper</td><td>Zips single or multi part files</td></tr> | ||

| + | <tr><td>GnuplotWrapperTP</td><td>Creates a plot descibed by the gnuplot script</td></tr> | ||

| + | <tr><td>GraphvizWrapperTP</td><td>Creates a graph using Graphviz library</td></tr> | ||

| + | </table> | ||

| + | |||

| + | === Client Library === | ||

| + | ==== Maven coordinates ==== | ||

| + | <dependency> | ||

| + | <groupId>org.gcube.data-transformation</groupId> | ||

| + | <artifactId>dts-client-library</artifactId> | ||

| + | <version>...</version> | ||

| + | </dependency> | ||

| − | ==== | + | ==== Creating full text rowsets from tree collection ==== |

| − | + | The first example demonstrates how it is possible to create full text rowsets from a tree collection. In the input field of the request we set as input type the content collection data source input type which is ''TMDataSource'' and as value the tree collection id (see [[Data_Transformation#Data_Sources | Data Sources]]). In the output field is specified that the result of the transformation will be appended into a result set which is created by the data sink and returned in the response (see [[Data_Transformation#Data_Sources | Data Sinks]]). Finally, the transformation procedure of DTS that is used in this example ''transformData'' is able to identify by itself the appropriate transformation units that will be used to transform the input data to the target content type ''<nowiki>text/xml, schemaURI="http://ftrowset.xsd"</nowiki>''. The target content type is specified in the respective request parameter. | |

<pre> | <pre> | ||

| − | + | import gr.uoa.di.madgik.grs.record.GenericRecord; | |

| + | import gr.uoa.di.madgik.grs.record.field.StringField; | ||

| + | import static org.gcube.data.streams.dsl.Streams.convert; | ||

| − | import java. | + | import java.net.URI; |

| + | import java.net.URISyntaxException; | ||

| + | import java.util.Arrays; | ||

| + | import java.util.concurrent.TimeUnit; | ||

| − | import org. | + | import org.gcube.common.clients.ClientRuntime; |

| − | import org. | + | import org.gcube.common.scope.api.ScopeProvider; |

| − | import org. | + | import org.gcube.data.streams.Stream; |

| − | import org. | + | import org.gcube.datatransformation.client.library.beans.Types.*; |

| − | import org. | + | import org.gcube.datatransformation.client.library.exceptions.DTSException; |

| − | import org. | + | import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI; |

| − | import org. | + | import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL; |

| − | public class | + | public class DTSClient_CreateFTRowsetFromContent { |

| − | + | ||

| − | + | ||

| − | public void | + | public static void main(String[] args) throws Exception { |

| − | + | String scope = args[0]; | |

| − | + | String id = args[1]; | |

| − | + | ScopeProvider.instance.set(scope); | |

| − | + | DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build(); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | TransformDataWithTransformationUnit request = new TransformDataWithTransformationUnit(); | |

| − | + | request.tpID = "$FtsRowset_Transformer"; | |

| − | + | request.transformationUnitID = "6"; | |

| − | + | /* INPUT */ | |

| − | + | Input input = new Input(); | |

| + | input.inputType = "TMDataSource"; | ||

| + | input.inputValue = id; | ||

| + | request.inputs = Arrays.asList(input); | ||

| − | + | /* OUTPUT */ | |

| + | request.output = new Output(); | ||

| + | request.output.outputType = "RS2"; | ||

| − | + | /* TARGET CONTENT TYPE */ | |

| − | + | request.targetContentType = new ContentType(); | |

| + | request.targetContentType.mimeType = "text/xml"; | ||

| + | Parameter param = new Parameter("schemaURI", "http://ftrowset.xsd"); | ||

| + | |||

| + | request.targetContentType.parameters = Arrays.asList(param); | ||

| − | + | /* PROGRAM PARAMETERS */ | |

| − | + | Parameter xsltParameter1 = new Parameter("xslt:1", "$BrokerXSLT_DwC_anylanguage_to_ftRowset_anylanguage"); | |

| − | + | Parameter xsltParameter2 = new Parameter("xslt:2", "$BrokerXSLT_Properties_anylanguage_to_ftRowset_anylanguage"); | |

| − | + | Parameter xsltParameter3 = new Parameter("xslt:3", "$BrokerXSLT_PROVENANCE_anylanguage_to_ftRowset_anylanguage"); | |

| − | + | Parameter xsltParameter4 = new Parameter("finalftsxslt", "$BrokerXSLT_wrapperFT"); | |

| − | + | ||

| − | + | Parameter indexTypeParameter = new Parameter("indexType", "ft_2.0"); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | request.tProgramUnboundParameters = Arrays.asList(xsltParameter1, xsltParameter2, xsltParameter3, xsltParameter4, indexTypeParameter); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | request.filterSources = false; | |

| − | + | request.createReport = false; | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | TransformDataWithTransformationUnitResponse response = null; | |

| − | + | ||

| − | + | ||

| − | + | ||

try { | try { | ||

| − | + | response = proxyRandom.transformDataWithTransformationUnit(request); | |

| − | + | } catch (DTSException e) { | |

| − | + | e.printstacktrace(); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | } catch ( | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | e. | + | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

} | } | ||

| + | String output = response.output; | ||

} | } | ||

} | } | ||

</pre> | </pre> | ||

| − | + | ==== Creating forward rowsets from tree collection ==== | |

| − | The | + | The first example demonstrates how it is possible to create forward rowsets from a tree collection. In the input field of the request we set as input type the content collection data source input type which is ''TMDataSource'' and as value the tree collection id (see [[Data_Transformation#Data_Sources | Data Sources]]). In the output field is specified that the result of the transformation will be appended into a result set which is created by the data sink and returned in the response (see [[Data_Transformation#Data_Sources | Data Sinks]]). Finally, the transformation procedure of DTS that is used in this example ''transformData'' is able to identify by itself the appropriate transformation units that will be used to transform the input data to the target content type ''<nowiki>text/xml, schemaURI="http://fwrowset.xsd"</nowiki>''. The target content type is specified in the respective request parameter. |

<pre> | <pre> | ||

| − | + | import java.net.URI; | |

| − | + | import java.net.URISyntaxException; | |

| − | + | import java.util.Arrays; | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | import org.gcube.common.clients.ClientRuntime; | |

| + | import org.gcube.common.scope.api.ScopeProvider; | ||

| + | import org.gcube.datatransformation.client.library.beans.Types.*; | ||

| + | import org.gcube.datatransformation.client.library.exceptions.DTSException; | ||

| + | import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI; | ||

| + | import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL; | ||

| − | + | public class DTSClient_CreateFWRowsetFromContent { | |

| − | + | public static void main(String[] args) throws Exception { | |

| + | String scope = args[0]; | ||

| + | String id = args[1]; | ||

| + | ScopeProvider.instance.set(scope); | ||

| + | DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build(); | ||

| − | + | TransformDataWithTransformationUnit request = new TransformDataWithTransformationUnit(); | |

| + | request.tpID = "$FwRowset_Transformer"; | ||

| + | request.transformationUnitID = "1"; | ||

| − | + | /* INPUT */ | |

| − | + | Input input = new Input(); | |

| − | + | input.inputType = "TMDataSource"; | |

| − | + | input.inputValue = id; | |

| − | + | request.inputs = Arrays.asList(input); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | /* OUTPUT */ | |

| + | request.output = new Output(); | ||

| + | request.output.outputType = "RS2"; | ||

| − | + | /* TARGET CONTENT TYPE */ | |

| + | request.targetContentType = new ContentType(); | ||

| + | request.targetContentType.mimeType = "text/xml"; | ||

| + | Parameter param = new Parameter("schemaURI", "http://fwrowset.xsd"); | ||

| + | |||

| + | request.targetContentType.parameters = Arrays.asList(param); | ||

| − | + | /* PROGRAM PARAMETERS */ | |

| − | + | Parameter xsltParameter1 = new Parameter("xslt:1", "$BrokerXSLT_DwC_anylanguage_to_fwRowset_anylanguage"); | |

| − | + | Parameter xsltParameter2 = new Parameter("finalfwdxslt", "$BrokerXSLT_wrapperFWD"); | |

| − | + | ||

| − | + | request.tProgramUnboundParameters = Arrays.asList(xsltParameter1, xsltParameter2); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | request.filterSources = false; | |

| − | + | request.createReport = false; | |

| − | === | + | TransformDataWithTransformationUnitResponse response = null; |

| + | try { | ||

| + | response = proxyRandom.transformDataWithTransformationUnit(request); | ||

| + | } catch (DTSException e) { | ||

| + | e.printStackTrace(); | ||

| + | } | ||

| + | String output = response.output; | ||

| + | } | ||

| + | } | ||

| + | </pre> | ||

| − | + | ==== Finding applicable transformation units ==== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | This example demonstrates how it is possible to search for transformation units that are able to perform a transformation from a source to a target content type. In this example we are trying to find one or more transformation units that can transform a gif image to jpeg format. | |

| − | + | <pre> | |

| + | import org.gcube.common.clients.ClientRuntime; | ||

| + | import org.gcube.common.scope.api.ScopeProvider; | ||

| + | import org.gcube.datatransformation.client.library.beans.Types.*; | ||

| + | import org.gcube.datatransformation.client.library.exceptions.DTSException; | ||

| + | import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI; | ||

| + | import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL; | ||

| − | + | public class FindApplicableTransformationUnitsClient { | |

| + | |||

| + | public static void main(String[] args) throws Exception { | ||

| + | ScopeProvider.instance.set(args[0]); | ||

| + | DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build(); | ||

| − | + | FindApplicableTransformationUnits request = new FindApplicableTransformationUnits(); | |

| + | |||

| + | request.sourceContentType = new ContentType(); | ||

| + | request.sourceContentType.mimeType = "image/gif"; | ||

| − | + | request.targetContentType = new ContentType(); | |

| − | + | request.targetContentType.mimeType = "image/jpeg"; | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | request.createAndPublishCompositeTP = false; | |

| − | + | FindApplicableTransformationUnitsResponse output = null; | |

| − | + | ||

try { | try { | ||

| − | + | output = proxyRandom.findApplicableTransformationUnits(request); | |

| − | + | } catch (DTSException e) { | |

| − | + | e.printstacktrace(); | |

| − | + | } | |

| − | + | ||

| − | + | for(TPAndTransformationUnit tr : output.TPAndTransformationUnitIDs) { | |

| − | + | System.out.println(tr.transformationProgramID + " " + tr.transformationUnitID); | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

} | } | ||

} | } | ||

} | } | ||

</pre> | </pre> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Latest revision as of 14:01, 19 October 2016

Contents

gCube Data Transformation Service

Introduction

The gCube Data Transformation service is responsible for transforming content and metadata among different formats and specifications. gDTS lies on top of Content and Metadata Management services. It interoperates with these components in order to retrieve information objects and store the transformed ones. Transformations can be performed offline and on demand on a single object or on a group of objects.

gDTS employs a variety of pluggable converters in order to transform digital objects between arbitrary content types, and takes advantage of extended information on the content types to achieve the selection of the appropriate conversion elements.

As already mentioned, the main functionality of the gCube Data Transformation Service is to convert digital objects from one content format to another. The conversions will be performed by transformation programs which either have been previously defined and stored or are composed on-the-fly during the transformation process. Every transformation program (except for those which are composed on-the-fly) is stored in the IS.

The gCube Data Transformation Service is presumed to offer a lot of benefits in many aspects of gCube. Presentation layer benefits from the production of alternative representations of multimedia documents. Generation of thumbnails, transformations of objects to specific formats that are required by some presentation applications and projection of multimedia files with variable quality/bitrate are just some examples of useful transformations over multimedia documents. In addition, as conversion tool for textual documents, it will offer online projection of documents in html format and moreover any other downloadable formats as pdf or ps. Annotation UI can be implemented more straightforward on selected logical groups of content types (e.g. images) without caring about the details of the content and the support offered by the browsers. Finally, by utilizing the functionality of Metadata Broker, homogenization of metadata with variable schemas can be achieved.

Concepts

Content Type

In gDTS the content type identification and notation conforms to the MIME type specification described in RFC 2045 and RFC 2046. This provides compliance with mainstream applications such as browsers, mail clients, etc. In this context, a document’s content type is defined by the media type, the subtype identifier plus a set of parameters, specified in an “attribute=value” notation. This extra information is exploited by data converters capable of interpreting it.

Some examples of content formats of information objects are:

- Mimetype=”image/png”, width=”500”, height=”500” that denotes that the object’s format is the well known portable network graphics and the image’s width and height is 500 pixel.

- Mimetype=”text/xml”, schema=”dc”, language=”en”, denotes that the object is an xml document with schema Dublin Core and language English.

Transformation Units

A transformation unit describes the way a program can be used in order to perform a transformation from one or more source content type to a target content type. The transformation unit determines the behaviour of each program by providing proper program parameters potentially drawn from the content type representation. Program parameters may contain string literals and/or ranges (via wildcards) in order to denote source content types. In addition, the wildcard ‘-’ can be used to force the presence of a program parameter in the content types set by the caller which uses this specific transformation unit. Furthermore, transformation units may reference other transformation units and use them as “black-box” components in a transformation process. Thus, each transformation unit is identified by the pair (transformation program id, transformation unit id).

Transformations' Graph

Through transformation units new content types and program capabilities are published to gDTS. These units compose a transformation graph, with nodes and edges that correspond to content types and transformation units respectively. During initialisation, transformation graph is constructed locally through the published information stored in IS and gets updated periodically. Using this graph we are able to find a path of transformation units so as to perform an object transformation from its content type (source) to a target content type.

Transformation Programs

A transformation program is an xml document describing one or more possible transformations from a source content type to a target content type. Each transformation program references to at most one program and it contains one or more transformation units for each possible transformation. Transformation programs are stored in the IS.

Complex transformation processes are also described by transformation programs. Each transformation program can reference other transformation programs and use them as “black-box” components in the transformation process it defines. Each transformation program consists of:

- One or more data input definitions. Each one defines the schema, language and type (record, ResultSet or collection) of the data that must be mapped to the particular input.

- One or more input variables. Each one of them is placeholder for an additional string value which must be passed to the transformation program at run-time.

- Exactly one data output definition, which contains the output data type (record, ResultSet or collection), schema and language.

- One or more transformation rule definitions.

Note: The name of the input or output schema must be given in the format SchemaName=SchemaURI, where SchemaName is the name of the schema and SchemaURI is the URI of its definition, e.g. DC=http://dublincore.org/schemas/xmls/simpledc20021212.xsd.

Samples of transformation programs can be found on Creating Indices at the VO Level admin guide.

Transformation Rules

Transformation rules are the building block of transformation programs. Each transformation program always contains at least one transformation rule. Transformation rules describe simple transformations and execute in the order in which they are defined inside the transformation program. Usually the output of a transformation rule is the input of the next one. So, a transformation program can be thought of as a chain of transformation rules which work together in order to perform the complex transformation defined by the whole transformation program.

Each transformation rule consists of:

- One or more data input definitions. Each definition contains the schema, language, type (record, ResultSet, collection or input variable) and data reference of the input it describes. Each one of these elements (except for the 'type' element) can be either a literal value, or a reference to another value defined inside the transformation program (using XPath syntax).

- Exactly one data output, which can be:

- A definition that contains the output data type (record, ResultSet or collection), schema and language.

- A reference to the transformation program‘s output (using XPath syntax). This is the way to express that the output of this transformation rule will also be the output of the whole transformation program, so such a reference is only valid for the transformation program‘s final rule.

- The name of the underlying program to execute in order to do the transformation, using standard 'packageName.className' syntax.

A transformation rule can also be a reference to another transformation program. This way, whole transformation programs can be used as parts of the execution of another transformation program. The reference can me made using the unique id of the transformation program being referenced and a set of value assignments to its data inputs and variables.

Note: The name of the input or output schema must be given in the format SchemaName=SchemaURI, where SchemaName is the name of the schema and SchemaURI is the URI of its definition, e.g. DC=http://dublincore.org/schemas/xmls/simpledc20021212.xsd.

Variable fields inside data input/output definitions

Inside the definition of data inputs and outputs of transformation programs and transformation rules, any field except for 'Type' can be declared as a variable field. Just like inputs variables, variable fields get their values by run-time assignments. In order to declare an element as a variable field of its parent element, one needs to include 'isVariable=true' in the element's definition. When the caller invokes a broker operation in order to transform some metadata, he/she can provide a set of value assignments to the input variables and variable fields of the transformation program definition. But the caller has access only to the variables of the whole transformation program, not the internal transformation rules. However, transformation rules can also contain variable fields in their input/output definitions. Since the caller cannot explicitly assign values to them, such variable fields must contain an XPath expression as their value, which points to another element inside the transformation program that contains the value to be assigned. These references are resolved when each transformation rule is executed, so if, for example, a variable field of a transformation rule's input definition points to a variable field of the previous transformation rule's output definition, it is guaranteed that the referenced element's value will be there at the time of execution of the second transformation rule. It is important to note that every XPath expression should specify an absolute location inside the document, which basically means it should start with '/'.

There is a special case where the language and schema fields of a transformation program's data input definition can be automatically get values assigned to them, without requiring the caller to do so. This can happen when the type of the particular data input is set to collection. In this case, the Metadata Broker Service automatically retrieves the format of the metadata collection described by the ID that is given through the Reference field of the data input definition and assigns the actual schema descriptor and language identifier of the collection to the respective variable fields of the data input definition. If any of these fields already contain values, these values are compared with the ones retrieved from the metadata collection's profile, and if they are different the execution of the transformation program stops and an exception is thrown by the Metadata Broker service. Note that the automatic value assignment works only on data inputs of transformation programs and NOT on data inputs of individual transformation rules.

Programs

A program (not to be confused with transformation program) is the Java class which performs the actual transformation on the input data. A transformation rule is just a XML description of the interface (inputs and output) of a program.

Each program can define any number of methods, but when the transformation rule which references it is executed, the service will use reflection in order to locate the correct method to call based on the input and output types defined in the transformation rule that initiates the call to the program's transformation method. The execution process is the following:

- A client invokes DTS requesting the execution of a transformation program.

- For each transformation rule found in the transformation program:

- DTS reads the schema, language and type of the transformation rule's inputs, as well as the actual payloads given as inputs. The output format descriptor is also read.

- Based on this information, DTS constructs one or more DataSource and a DataSink object, which are wrapper classes around the transformation rule's input and output descriptors.

- The program to be invoked for the transformation is read from the transformation rule.

- A transformation plan is constructed which is passed to workflowDTSAdaptor in order to construct an execution plan implementing this transformation.

- DTS uses reflection in order to locate the transformation method to be called inside the program. This is done through the input and output descriptors of the transformation rule.

Generally speaking, the main logic in a program will be something like this:

- while (source.hasNext()) do the following:

- sourceElement = source.getNext();

- (transform sourceElement to produce 'transformedPayload')

- destElement = sink.getNewDataElement(sourceElement, transformedPayload);

- sink.writeNext(destElement);

- sink.finishedWriting();

Implementation Overview

The gCube Data Transformation Service is primarily comprised by the Data Transformation Service component which basically implements the WS-Interface of the Service, the Data Transformation Library which carries out the basic functionality of the Service i.e. the selection of the appropriate conversion element and the execution of the transformation over the information objects, a set of data handlers which are responsible to fetch and return/store the information objects and finally the conversion elements “Programs” that perform the conversions.

Data Transformation Service

The Data Transformation Service component implements the WS-Interface of the Service. Basically, it is the entry point for the services that Data Transformation Library provides. It's main operation is to check the parameters of the invocation, instantiate the data handlers, invoke the appropriate method of Data Transformation Library and inform clients for any possible faults.

A Data Transformation Service's RI operates successfully over multiple scopes by keeping any necessary information for each scope independently.

Data Transformation Library

Inside the Data Transformation Library it is implemented the core functionality of gDTS. The Data Transformation Library contains variable packages which are responsible for different features of gDTS. The basic class of the Data Transformation Library is the DTSCore which is the class responsible to orchestrate the rest of the components. A DTSCore instance contains a transformations graph which is responsible to determine the transformation that will be performed (if the transformation program / unit is not explicitly set), as well as an information manager (IManager) which is the class responsible to fetch information about the transformation programs. The implementation of IManager that is currently used by the gDTS is the ISManager which fetches, publishes and queries transformation programs from the gCore Based Information System.

The following diagram depicts the operations applied by Data Transformation Library on data elements when a request for a transformation is made and a transformation unit has not been explicitly set.

For each data element the Data Elements Broker reads its content type and by utilizing the transformations graph, determines the proper Transformation Unit that is going to perform the transformation. Each transformation path, consisted by Transformation Units, is added to the transformation plan that will be passed to WorkflowDTSAdaptor. Then each object which has the same content type is also ignored. If another object comes from the Data Source which has a different content type, the Data Elements Broker uses again the Transformations Graph and a new Transformation plan is created. If the graph does not manage to find any applicable transformation unit for a data element, it is just ignored as well as the rest which have the same content type.

Apart from the core functionality, in the Data Transformation Library are also contained the interfaces of the Programs and Data Handlers which have to be adopted by any program or data handler implementation. Finally, report and query packages contain classes for the reporting and querying functionalities respectively.

WorkflowDTSAdaptor

WorkflowDTSAdaptor takes a transformatin plan as an input, provided by Data Transformation Library. For each plan a new Execution Plan is instantiated as a transformation chain, described by the transformation plan. In this way, a different transformation chain is created for every content type of data elements provided by the source. Then transformation plan retrieves every data element of that particular content type and applies the transformation. A Data Bridge and Program instances are created and the data element is appended to the source Data Bridge of the Transformation Unit.

In the Transformation Unit then, the data elements are transformed one by one by the program and the result is appended into the target data bridge contained in the transformation unit. Then these objects are finally merged by the Data Source Merger which reads in parallel objects from all the transformation chains and appends them into the Data Sink.

Data Transformation Handlers

The gDTS has to perform some procedures in order to fetch and store content. These procedures are totally independent from the basic functionality of the gDTS which is to transform one or more objects into different content formats and they shall not affect it by any means. So whenever the gDTS is invoked, the caller-supplied data is automatically wrapped in a data source object. In a similar way, the output of the transformation is wrapped in a data sink object. The source and sink objects can then be used by the invoked java program in order to read each source object sequentially and write its transformed counterpart to the destination. This processing of data objects is done homogenously because of the abstraction provided by the data sources and data sinks, no matter what the nature of the original source and destination is.

The clients identify the appropriate data handler by its name in the input/output type parameter contained in each transform method of gDTS. Then, the service loads dynamically the java class of the data handler that corresponds to this type.

The available Data Handlers are:

Data Sources

| Data Source Name | Input Name | Input Value | Input Parameters | Description |

|---|---|---|---|---|

| TMDataSource | TMDataSource | content collection id | NA | Fetches all the trees that belong to a tree collection. |

| RSBlobDataSource | RSBlob | result set locator | NA | Gets as input content of a result set with blob elements. |

| FTPDataSource | FTP | host name | username, password, directory, port | Downloads content from an ftp server. |

| URIListDataSource | URIList | url | NA | Fetches content from urls that are contained in a file whose location is set as input value. |

Data Sinks

| Data Sink Name | Output Name | Output Value | Output Parameters | Description |

|---|---|---|---|---|

| RSBlobDataSink | RSBlob | NA | NA | Puts data into a result set with blob elements. |

| RSXMLDataSink | RSXML | NA | NA | Puts (xml) data into a result set with xml elements. |

| FTPDataSink | FTP | host name | username, password, port, directory | Stores objects in an ftp server. |

Data Bridges

| Data Bridge Name | Parameters | Description |

|---|---|---|

| RSBlobDataBridge | NA | RSBlobDataBridge is used as a buffer of data elements. Utilizes RS in order to keep objects in the disk. |

| REFDataBridge | flowControled = "true|false", limit | Keeps references to data elements. If flow control is enabled a maximum number of #limit data elements can exist in the bridge. |

| FilterDataBridge | NA | Filters the contents of a Data Source by a content format. |

Data Transformation Programs

The available transformations that the gDTS can use reside externally to the service, as separate Java classes called Programs (not to be confused with ‘Transformation Programs’). Each program is an independent, self-describing entity that encapsulates the logic of the transformation process it performs. The gDTS loads these required programs dynamically as the execution proceeds and supplies them with the input data that must be transformed. Since the loading is done at run-time, extending the gDTS transformation capabilities by adding programs is a trivial task. The new program has to be written as a java class and referenced in the classpath variable, so that it can be located when required.

The gDTS provides helper functionality to simplify the creation of new programs. This functionality is exposed to the program author through a set of abstract java classes, which are included in the gCube Data Transformation Library.

The available Program implementations are:

| Name | Description |

|---|---|

| DocToTextTransformer | Extacts plain text from msword documents |

| ExcelToTextTransformer | Extacts plain text from ms-excel documents |

| FtsRowset_Transformer | Creates full text rowsets from xml documents |

| FwRowset_Transformer | Creates forward rowsets from xml documents |

| GeoRowset_Transformer | Creates geo rowsets from xml documents |

| ImageMagickWrapperTP | Currently is able to convert images from to any image type, create thumbnails, watermarking images. Any other operation of image magick library can be incorporated |

| PDFToJPEGTransformer | Creates jpeg images from a page of a pdf document |

| PDFToTextHTMLTransformer | Converts a pdf document to html or text |

| PPTToTextTransformer | Extacts plain text from powerpoint documents |

| TextToFtsRowset_Transformer | Creates full text rowsets from plain text |

| XSLT_Transformer | Applies an xslt to an xml document |

| AggregateFTS_Transformer | Transform metadata documents coming from multiple metadata collections to a single FTS rowset |

| AggregateFWD_Transformer | Transform metadata documents coming from multiple metadata collections to a single FWD rowset |

| Zipper | Zips single or multi part files |

| GnuplotWrapperTP | Creates a plot descibed by the gnuplot script |

| GraphvizWrapperTP | Creates a graph using Graphviz library |

Client Library

Maven coordinates

<dependency> <groupId>org.gcube.data-transformation</groupId> <artifactId>dts-client-library</artifactId> <version>...</version> </dependency>

Creating full text rowsets from tree collection

The first example demonstrates how it is possible to create full text rowsets from a tree collection. In the input field of the request we set as input type the content collection data source input type which is TMDataSource and as value the tree collection id (see Data Sources). In the output field is specified that the result of the transformation will be appended into a result set which is created by the data sink and returned in the response (see Data Sinks). Finally, the transformation procedure of DTS that is used in this example transformData is able to identify by itself the appropriate transformation units that will be used to transform the input data to the target content type text/xml, schemaURI="http://ftrowset.xsd". The target content type is specified in the respective request parameter.

import gr.uoa.di.madgik.grs.record.GenericRecord;

import gr.uoa.di.madgik.grs.record.field.StringField;

import static org.gcube.data.streams.dsl.Streams.convert;

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Arrays;

import java.util.concurrent.TimeUnit;

import org.gcube.common.clients.ClientRuntime;

import org.gcube.common.scope.api.ScopeProvider;

import org.gcube.data.streams.Stream;

import org.gcube.datatransformation.client.library.beans.Types.*;

import org.gcube.datatransformation.client.library.exceptions.DTSException;

import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI;

import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL;

public class DTSClient_CreateFTRowsetFromContent {

public static void main(String[] args) throws Exception {

String scope = args[0];

String id = args[1];

ScopeProvider.instance.set(scope);

DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build();

TransformDataWithTransformationUnit request = new TransformDataWithTransformationUnit();

request.tpID = "$FtsRowset_Transformer";

request.transformationUnitID = "6";

/* INPUT */

Input input = new Input();

input.inputType = "TMDataSource";

input.inputValue = id;

request.inputs = Arrays.asList(input);

/* OUTPUT */

request.output = new Output();

request.output.outputType = "RS2";

/* TARGET CONTENT TYPE */

request.targetContentType = new ContentType();

request.targetContentType.mimeType = "text/xml";

Parameter param = new Parameter("schemaURI", "http://ftrowset.xsd");

request.targetContentType.parameters = Arrays.asList(param);

/* PROGRAM PARAMETERS */

Parameter xsltParameter1 = new Parameter("xslt:1", "$BrokerXSLT_DwC_anylanguage_to_ftRowset_anylanguage");

Parameter xsltParameter2 = new Parameter("xslt:2", "$BrokerXSLT_Properties_anylanguage_to_ftRowset_anylanguage");

Parameter xsltParameter3 = new Parameter("xslt:3", "$BrokerXSLT_PROVENANCE_anylanguage_to_ftRowset_anylanguage");

Parameter xsltParameter4 = new Parameter("finalftsxslt", "$BrokerXSLT_wrapperFT");

Parameter indexTypeParameter = new Parameter("indexType", "ft_2.0");

request.tProgramUnboundParameters = Arrays.asList(xsltParameter1, xsltParameter2, xsltParameter3, xsltParameter4, indexTypeParameter);

request.filterSources = false;

request.createReport = false;

TransformDataWithTransformationUnitResponse response = null;

try {

response = proxyRandom.transformDataWithTransformationUnit(request);

} catch (DTSException e) {

e.printstacktrace();

}

String output = response.output;

}

}

Creating forward rowsets from tree collection

The first example demonstrates how it is possible to create forward rowsets from a tree collection. In the input field of the request we set as input type the content collection data source input type which is TMDataSource and as value the tree collection id (see Data Sources). In the output field is specified that the result of the transformation will be appended into a result set which is created by the data sink and returned in the response (see Data Sinks). Finally, the transformation procedure of DTS that is used in this example transformData is able to identify by itself the appropriate transformation units that will be used to transform the input data to the target content type text/xml, schemaURI="http://fwrowset.xsd". The target content type is specified in the respective request parameter.

import java.net.URI;

import java.net.URISyntaxException;

import java.util.Arrays;

import org.gcube.common.clients.ClientRuntime;

import org.gcube.common.scope.api.ScopeProvider;

import org.gcube.datatransformation.client.library.beans.Types.*;

import org.gcube.datatransformation.client.library.exceptions.DTSException;

import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI;

import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL;

public class DTSClient_CreateFWRowsetFromContent {

public static void main(String[] args) throws Exception {

String scope = args[0];

String id = args[1];

ScopeProvider.instance.set(scope);

DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build();

TransformDataWithTransformationUnit request = new TransformDataWithTransformationUnit();

request.tpID = "$FwRowset_Transformer";

request.transformationUnitID = "1";

/* INPUT */

Input input = new Input();

input.inputType = "TMDataSource";

input.inputValue = id;

request.inputs = Arrays.asList(input);

/* OUTPUT */

request.output = new Output();

request.output.outputType = "RS2";

/* TARGET CONTENT TYPE */

request.targetContentType = new ContentType();

request.targetContentType.mimeType = "text/xml";

Parameter param = new Parameter("schemaURI", "http://fwrowset.xsd");

request.targetContentType.parameters = Arrays.asList(param);

/* PROGRAM PARAMETERS */

Parameter xsltParameter1 = new Parameter("xslt:1", "$BrokerXSLT_DwC_anylanguage_to_fwRowset_anylanguage");

Parameter xsltParameter2 = new Parameter("finalfwdxslt", "$BrokerXSLT_wrapperFWD");

request.tProgramUnboundParameters = Arrays.asList(xsltParameter1, xsltParameter2);

request.filterSources = false;

request.createReport = false;

TransformDataWithTransformationUnitResponse response = null;

try {

response = proxyRandom.transformDataWithTransformationUnit(request);

} catch (DTSException e) {

e.printStackTrace();

}

String output = response.output;

}

}

Finding applicable transformation units

This example demonstrates how it is possible to search for transformation units that are able to perform a transformation from a source to a target content type. In this example we are trying to find one or more transformation units that can transform a gif image to jpeg format.

import org.gcube.common.clients.ClientRuntime;

import org.gcube.common.scope.api.ScopeProvider;

import org.gcube.datatransformation.client.library.beans.Types.*;

import org.gcube.datatransformation.client.library.exceptions.DTSException;

import org.gcube.datatransformation.client.library.proxies.DTSCLProxyI;

import org.gcube.datatransformation.client.library.proxies.DataTransformationDSL;

public class FindApplicableTransformationUnitsClient {

public static void main(String[] args) throws Exception {

ScopeProvider.instance.set(args[0]);

DTSCLProxyI proxyRandom = DataTransformationDSL.getDTSProxyBuilder().build();

FindApplicableTransformationUnits request = new FindApplicableTransformationUnits();

request.sourceContentType = new ContentType();

request.sourceContentType.mimeType = "image/gif";

request.targetContentType = new ContentType();

request.targetContentType.mimeType = "image/jpeg";

request.createAndPublishCompositeTP = false;

FindApplicableTransformationUnitsResponse output = null;

try {

output = proxyRandom.findApplicableTransformationUnits(request);

} catch (DTSException e) {

e.printstacktrace();

}

for(TPAndTransformationUnit tr : output.TPAndTransformationUnitIDs) {

System.out.println(tr.transformationProgramID + " " + tr.transformationUnitID);

}

}

}