Difference between revisions of "Storage Manager"

| (91 intermediate revisions by 2 users not shown) | |||

| Line 3: | Line 3: | ||

= Overview = | = Overview = | ||

| − | + | A service providing functions for standards-based and structured access and storage of files of arbitrary size is a fundamental requirement for a wide range of system processes, including indexing, transfer, transformation, and presentation. Equally, it is a main driver for clients that interface the resources managed by the system or accessible through facilities available within the system. | |

| − | + | This service abstracts over the phisical storage and is capable of mounting several different store implementations, (by default clients can make use of the MongoDB store) presenting a unified interface to the clients and allowing them to download, upload, remove, add and list files or unstructured bytestreams (binary objects). The binary objects must have owners and owners may define access rights to files, allowing private, public, or shared (group-based) access. | |

| + | |||

| + | All the operations of this service are provided through a standards-based, POSIX-like API which supports the organisation and operations normally associated with local file systems whilst offering scalable and fault-tolerant remote storage | ||

= Key features = | = Key features = | ||

| + | The core of StorageManager is a java library named storage-manager-core that offers a interface for performing CRUD operation through a remote backend over Binary Large Objects (BLOB): | ||

| − | + | * Create | |

| + | * Read | ||

| + | * Update | ||

| + | * Delete | ||

| + | * Move | ||

| + | * Copy | ||

| − | + | = Design and Architecture = | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

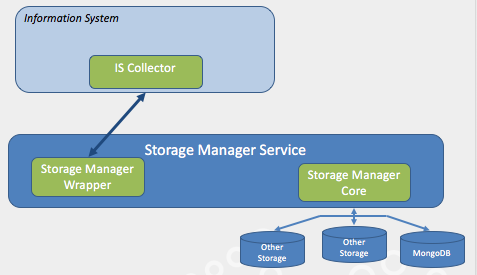

| − | + | [[File:Storage manager.png|thumb|caption|800 px |Figure 1. Storage Manager Architecture]] | |

| + | |||

| + | As shown in Figure 1 the core of the Storage Manager service is a software component named Storage Manager Core that offers APIs allowing to abstracts over the phisical storage. The Storage Manager Wrapper instead is a software component used to discover back-end information from the IS Collector service of the D4Science Infrastructure’s Information System. The separation between these 2 components is necessary to allow the usage of the service in different contexts other than the D4Science Infrastructure. | ||

| + | |||

| + | |||

| + | |||

| + | |||

| + | |||

| + | = Access Mode = | ||

There are 3 ways to access remote files: | There are 3 ways to access remote files: | ||

| Line 32: | Line 43: | ||

If the client is instantiated for example,with the private access mode, all the operations done with this client instance will be in private access mode. | If the client is instantiated for example,with the private access mode, all the operations done with this client instance will be in private access mode. | ||

| + | = Storage area types= | ||

| + | |||

| + | By default the files are stored on a persistent memory called "Persistent area" alternatively a client could choice the Volatile memory called "Volatile area" | ||

| + | |||

| + | ==Persistent area== | ||

| + | |||

| + | The files stored in the persistent area are permanents. This kind of area provide redundancy and high availability. | ||

| − | == | + | == Volatile area == |

| − | + | The binary objects added selecting this area are automatically '''removed after a period of 7 days''' from their first upload. | |

| − | + | ||

| − | The | + | |

The use of this area is intended for all clients that need to use storage for temporary data. | The use of this area is intended for all clients that need to use storage for temporary data. | ||

The use of VOLATILE area is very simple and very similar to the standard usage of storage-manager. | The use of VOLATILE area is very simple and very similar to the standard usage of storage-manager. | ||

| Line 45: | Line 61: | ||

* PERMANENT: for storing in the standard area: the files are deleting only manually | * PERMANENT: for storing in the standard area: the files are deleting only manually | ||

| − | The | + | The Volatile area is suggested to all clients that do not need to maintain data on the storage permanently. |

Through the use of this area the client doesn't need to perform the delete operation manually. | Through the use of this area the client doesn't need to perform the delete operation manually. | ||

It is strongly discouraged the usage of the VOLATILE storage area for storing data that will need to last more than seven days. | It is strongly discouraged the usage of the VOLATILE storage area for storing data that will need to last more than seven days. | ||

| + | = Supported Operations = | ||

| − | + | Inspired by Build Pattern, the StorageManger library implements many operations on remote files. The methods LFile and RFile refer to, respectively, Local File (if needed) and Remote File. | |

| − | + | The remote file could be identified by path or by id. The id is returned when the file is created. In the call's signature, these methods are preceded by a method that identify an operation, see examples below: | |

| − | + | ||

;put(boolean replace) | ;put(boolean replace) | ||

:put a local file in a remote directory. If replace is true then the remote file, if exists, will be replaced. | :put a local file in a remote directory. If replace is true then the remote file, if exists, will be replaced. | ||

: If the remote directory does not exist it will be automatically | : If the remote directory does not exist it will be automatically | ||

| − | : | + | :<code>put(true).LFile(“localPath”).RFile(“remotePath”);</code> |

| − | : | + | :<code>put(true).LFile(“localPath”).RFile(id);</code> |

| − | + | ||

;get() | ;get() | ||

:downloads a file from a remote path to a local directory | :downloads a file from a remote path to a local directory | ||

| − | : | + | :<code>get().LFile(“localPath”).RFile(“remotePath”);</code> |

| − | : | + | :<code>get().LFile(“localPath”).RFile(id);</code> |

;remove() | ;remove() | ||

:removes a remote file | :removes a remote file | ||

| − | : | + | :<code>remove().RFile(“remotePath”);</code> |

| − | + | :<code>remove().RFile(id);</code> | |

;removeDir() | ;removeDir() | ||

:removes a remote directory and all files in it. This operation is recursive. | :removes a remote directory and all files in it. This operation is recursive. | ||

| − | : | + | :<code>removeDir().RDir(“remoteDirPath”);</code> |

;showDir() | ;showDir() | ||

:shows the content of a directory | :shows the content of a directory | ||

| − | : | + | :<code>showDir.RFile(“remoteDirPath”);</code> |

;lock() | ;lock() | ||

:locks a remote file to prevent multiple access. It returns an id string for unlock the file. Remember that every lock has a TTL associated (Default: 180000 ms). | :locks a remote file to prevent multiple access. It returns an id string for unlock the file. Remember that every lock has a TTL associated (Default: 180000 ms). | ||

| − | : | + | :<code>lock().RFile(“remotePath”);</code> |

| − | : Otherwise: Download the file in "localFilePath" and Lock the remote file stored on backend server | + | :Otherwise: Download the file in "localFilePath" and Lock the remote file stored on backend server |

| − | : | + | :<code>lock().LFile("localFilePath").RFile("remotePath")</code> |

;unlock(String key4Unlock) | ;unlock(String key4Unlock) | ||

:if the file is locked, unlocks the remote file. | :if the file is locked, unlocks the remote file. | ||

| − | : | + | :<code>unlock(“key4Unlock”).RFile(“remotePath”);</code> |

:Otherwise: Upload the new File stored in the local FileSystem at: "localFilePath" and unlock the file on the backend server | :Otherwise: Upload the new File stored in the local FileSystem at: "localFilePath" and unlock the file on the backend server | ||

| − | : | + | :<code>unlock("key4Unlock").LFile("localFilePath").RFile("remotePath")</code> |

;getTTL() | ;getTTL() | ||

:returns the TimeToLive associated to a remote file if the file is currently locked | :returns the TimeToLive associated to a remote file if the file is currently locked | ||

| − | : | + | :<code>getTTL().RFile("remoteDirPath");</code> |

;setTTL(int milliseconds) | ;setTTL(int milliseconds) | ||

:resets the TimeToLive to a remote file that is currently locked. This operation is consented max 5 times for any file. | :resets the TimeToLive to a remote file that is currently locked. This operation is consented max 5 times for any file. | ||

| − | : | + | :<code>setTTL(180000).RFile("remoteDirPath");</code> |

;getUrl() | ;getUrl() | ||

:returns the smp url of a file stored in the repository | :returns the smp url of a file stored in the repository | ||

:this method is not compatible with private access mode | :this method is not compatible with private access mode | ||

| − | : | + | :<code>getUrl().RFile(“remotePath”);</code> |

| − | : | + | :<code>getUrl().RFile(id);</code> |

;getHttpUrl() | ;getHttpUrl() | ||

:returns the http url of a file stored in the repository | :returns the http url of a file stored in the repository | ||

:this method is not compatible with private access mode | :this method is not compatible with private access mode | ||

| − | : | + | :<code>getUrl().RFile(“remotePath”);</code> |

| − | + | :getUrl().RFile(id);</code> | |

| − | + | ||

;copy() | ;copy() | ||

| Line 116: | Line 130: | ||

:return the id of the new file stored in the repository | :return the id of the new file stored in the repository | ||

:this method is available only in version 2.0.0 | :this method is available only in version 2.0.0 | ||

| − | : | + | :<code>copy().RFile(“remotePath1”).Rfile(“remotePath2”);</code> |

;moveFile() | ;moveFile() | ||

| Line 122: | Line 136: | ||

:return the id of the file stored in the repository | :return the id of the file stored in the repository | ||

:this method is available only in version 2.0.0 or later | :this method is available only in version 2.0.0 or later | ||

| − | : | + | :<code>moveFile().from(“remoteFilePath1”).to(“remoteFilePath2”);</code> |

;moveDir() | ;moveDir() | ||

:moves a remote directory from a remote directory path to another remote directory path | :moves a remote directory from a remote directory path to another remote directory path | ||

:this method is available only in version 2.0.0 or later | :this method is available only in version 2.0.0 or later | ||

| − | : | + | :<code>moveDir().from(“remoteDirPath1”).to(“remoteDirPath2”);</code> |

;link() | ;link() | ||

| Line 133: | Line 147: | ||

:return the id of the new file linked in the repository | :return the id of the new file linked in the repository | ||

:this method is available only in version 2.0.0 | :this method is available only in version 2.0.0 | ||

| − | : | + | :<code>link().RFile(“remotePath1”).Rfile(“remotePath2”);</code> |

| + | = Getting-Started = | ||

| + | |||

| + | |||

| + | == Maven artifacts == | ||

| + | |||

| + | If a client want to use storage-manager libraries, it should import in the pom the following dependencies: | ||

| + | |||

| + | <source lang="xml" highlight="14-18"> | ||

| + | <dependency> | ||

| + | <groupId>org.gcube.contentmanagement</groupId> | ||

| + | <artifactId>storage-manager-core</artifactId> | ||

| + | <version>[2.0.0, 3.0.0)</version> | ||

| + | </dependency> | ||

| + | <dependency> | ||

| + | <groupId>org.gcube.contentmanagement</groupId> | ||

| + | <artifactId>storage-manager-wrapper</artifactId> | ||

| + | <version>[2.0.0, 3.0.0)</version> | ||

| + | </dependency> | ||

| + | </source> | ||

| + | |||

| + | Alternatively, if a client want to use the storage-manager out of the D4Science infrastructure, only the storage-manager-core dependency is needed: | ||

| + | |||

| + | <source lang="xml" highlight="14-18"> | ||

| + | <dependency> | ||

| + | <groupId>org.gcube.contentmanagement</groupId> | ||

| + | <artifactId>storage-manager-core</artifactId> | ||

| + | <version>[2.0.0, 3.0.0)</version> | ||

| + | </dependency> | ||

| + | </source> | ||

| + | |||

| + | |||

| + | ==Usage examples == | ||

| + | |||

| + | === Initialising Storage Manager === | ||

| + | |||

| + | The following code explains how to instatiate a client for performing remote operations on the storage repository: | ||

| + | <source lang="java"> | ||

| + | //the context in which you need to work | ||

| + | GCUBEScope scope = GCUBEScope.getScope("/gcube"); | ||

| + | |||

| + | // The Access type can be private | shared | public | ||

| + | IClient client=new StorageClient("ServiceClass", "ServiceName", "owner", AccessType.SHARED, scope).getClient(); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Upload a new file using the storage manager: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | //upload a new file in the storage: | ||

| + | String id=client.put(true).LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile("/img/pippo.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Download a file using the storage manager: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | client.get().LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile("/img/pippo.jpg");</code> | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Download a file using the storage manager by id | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | client.get().LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile(id); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Download a Stream using the storage manager: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | InputStream is = client.get().RFileAStream("/img/pippo.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | List the contents of a remote directory on the storage repository: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | List<StorageObject> list=client.showDir().RDir("img"); | ||

| + | </source> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

The following code explains how to lock a file on the storage repository: | The following code explains how to lock a file on the storage repository: | ||

| − | < | + | |

| − | + | ||

| − | </ | + | <source lang="java"> |

| + | String id=client.lock().RFile("/img/pluto.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

The following code explains how to unlock a file on the storage repository: | The following code explains how to unlock a file on the storage repository: | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | + | <source lang="java"> | |

| − | This is an example of | + | client.unlock(id).RFile("/img/pluto.jpg"); |

| + | </source> | ||

| + | |||

| + | |||

| + | Remove a file on the storage repository: | ||

| + | |||

| + | <source lang="java"> | ||

| + | client.remove().RFile("/img/pluto.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Remove a file by Id: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | client.remove()..RFile(id); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Remove a dir on the storage repository: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | client.removeDir().RDir("/img"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Move a directory on the storage repository from a remote path to another remote path: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | client.moveDir().from("/img/test1").to("/img/test2"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | Move a file from a remote path to another remote path: | ||

| + | |||

| + | <source lang="java"> | ||

| + | client.moveFile().from("/img/test1.xml").to("/img/test2.xml"); | ||

| + | </source> | ||

| + | |||

| + | === Scenario examples === | ||

| + | |||

| + | ;Scenario 1 | ||

| + | In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the sScope /gcube upload the file: /home/xxx/FilePerTest/test.jpg in shared mode in the VOLATILE area. | ||

| + | Another instance of the same service, s2 (identify by: ServiceClass: sc2, ServiceName: sn2) running in the same scope but on another node, download it. | ||

| + | |||

| + | The following code shows an upload operation example: | ||

| + | |||

| + | <source lang="java"> | ||

| + | String scope="/gcube"; | ||

| + | ScopeProvider.instance.set(scope); | ||

| + | IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); | ||

| + | String id=client.put(true).LFile("/home/xxx/FilePerTest/test.jpg").RFile("/img/test.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | The following code shows the download operation: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | String scope="/gcube"; | ||

| + | ScopeProvider.instance.set(scope); | ||

| + | IClient client=new StorageClient("sc2", "sn2", "s2", AccessType.SHARED, MemoryType.VOLATILE).getClient(); | ||

| + | String id=client.get().LFile("/home/yyy/FilePerTest/test.jpg").RFile("/img/test.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | The file is downloaded in the local directory: /home/yyy/FilePerTest/ where s2 is running and the file name is test.jpg | ||

| + | |||

| + | |||

| + | |||

| + | ;Scenario 2 | ||

| + | |||

| + | In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the scope /gcube uploads the file: /home/xxx/FilePerTest/test1.jpg in shared mode in VOLATILE area. | ||

| + | It then retrieves the url of the file uploaded by the method getUrl and passes it to another service s2 that is in running on another node. The s2 service retrieves the inputStream of the file that the service s1 had uploaded by the url. Remember, getUrl() method is not compatible with private access mode: | ||

| + | |||

| + | <source lang="java"> | ||

| + | String scope="/gcube"; | ||

| + | ScopeProvider.instance.set(scope); | ||

| + | IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); | ||

| + | String id=client.put(true).LFile("/home/xxx/FilePerTest/test1.jpg").RFile("/img/test1.jpg"); | ||

| + | String url=client.getUrl().RFile("/img/test1.jpg"); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | The s2 service retrieves the inputStream of the file by the url stored in the "url" variable: | ||

| + | |||

| + | |||

| + | <source lang="java"> | ||

| + | Handler.activateProtocol(); | ||

| + | URL smsHome = new URL("smp://img/test1.jpg5ezvFfBOLqbElsh5xYVgBTxt8lwt4fl"); | ||

| + | URLConnection uc = null; | ||

| + | uc = ( URLConnection ) smsHome.openConnection(); | ||

| + | InputStream is=uc.getInputStream(); | ||

| + | </source> | ||

| + | |||

| + | |||

| + | ;Scenario 3 | ||

| + | |||

| + | In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the scope /gcube uploads the file: /home/xxx/FilePerTest/test2.jpg in shared mode in VOLATILE area. | ||

| + | It then retrieves the url of the file uploaded by the method getHttpUrl and passes it to another service s2 that is in running on another node. The s2 service retrieves the inputStream of the file that the service s1 had uploaded by the url. | ||

| + | |||

| + | <source lang="java"> | ||

| + | String scope="/gcube"; | ||

| + | ScopeProvider.instance.set(scope); | ||

| + | IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); | ||

| + | String id=client.put(true).LFile("/home/xxx/FilePerTest/test2.jpg").RFile("/img/test2.jpg"); | ||

| + | String httpUrl=client.getHttpUrl().RFile("/img/test2.jpg"); | ||

| + | </source> | ||

| + | |||

| + | The s2 service retrieves the inputStream of the file by the url stored in the "url" variable: | ||

| + | |||

| + | <source lang="java"> | ||

| + | URL smsHome = new URL(httpUrl); | ||

| + | URLConnection uc = null; | ||

| + | uc = ( URLConnection ) smsHome.openConnection(); | ||

| + | InputStream is=uc.getInputStream(); | ||

| + | </source> | ||

| + | |||

| + | Alternatively, the httpUrl could be also resolved by a browser. This is an example of the httpUrl generated by getHttpUrl method: | ||

| + | |||

| + | http://data-d.d4science.org/T1VWSC9Kc0Q2ZlJzbHA0d1kvWjZFdmRjbkE1M1pLT09HbWJQNStIS0N6Yz0 | ||

| + | |||

| + | = Deployment = | ||

| + | In order to use the storage manager on a given VRE a particular a service endpoint specifying the address of the server used for storing data is needed. | ||

| + | |||

| + | This is an example of service endpoint used. | ||

<source lang="xml" highlight="14-18"> | <source lang="xml" highlight="14-18"> | ||

| Line 279: | Line 457: | ||

The values of entryName attributes of endpoint tag, in this case, are: server1, server2, server3. | The values of entryName attributes of endpoint tag, in this case, are: server1, server2, server3. | ||

The property named "type", specifies what kind of backend, in this case is a MongoDB server. | The property named "type", specifies what kind of backend, in this case is a MongoDB server. | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

[[Media:Example.ogg]] | [[Media:Example.ogg]] | ||

Latest revision as of 15:07, 6 July 2023

Contents

Overview

A service providing functions for standards-based and structured access and storage of files of arbitrary size is a fundamental requirement for a wide range of system processes, including indexing, transfer, transformation, and presentation. Equally, it is a main driver for clients that interface the resources managed by the system or accessible through facilities available within the system.

This service abstracts over the phisical storage and is capable of mounting several different store implementations, (by default clients can make use of the MongoDB store) presenting a unified interface to the clients and allowing them to download, upload, remove, add and list files or unstructured bytestreams (binary objects). The binary objects must have owners and owners may define access rights to files, allowing private, public, or shared (group-based) access.

All the operations of this service are provided through a standards-based, POSIX-like API which supports the organisation and operations normally associated with local file systems whilst offering scalable and fault-tolerant remote storage

Key features

The core of StorageManager is a java library named storage-manager-core that offers a interface for performing CRUD operation through a remote backend over Binary Large Objects (BLOB):

- Create

- Read

- Update

- Delete

- Move

- Copy

Design and Architecture

As shown in Figure 1 the core of the Storage Manager service is a software component named Storage Manager Core that offers APIs allowing to abstracts over the phisical storage. The Storage Manager Wrapper instead is a software component used to discover back-end information from the IS Collector service of the D4Science Infrastructure’s Information System. The separation between these 2 components is necessary to allow the usage of the service in different contexts other than the D4Science Infrastructure.

Access Mode

There are 3 ways to access remote files:

- Private

- Accessible only to the owner of the file

- Public

- Accessible to all users of the community

- Shared

- Accessible to all users of the same group of the owner

These types of access will be specified when instantiating the client. If the client is instantiated for example,with the private access mode, all the operations done with this client instance will be in private access mode.

Storage area types

By default the files are stored on a persistent memory called "Persistent area" alternatively a client could choice the Volatile memory called "Volatile area"

Persistent area

The files stored in the persistent area are permanents. This kind of area provide redundancy and high availability.

Volatile area

The binary objects added selecting this area are automatically removed after a period of 7 days from their first upload. The use of this area is intended for all clients that need to use storage for temporary data. The use of VOLATILE area is very simple and very similar to the standard usage of storage-manager. The only difference is that using the VOLATILE area the client must add an input parameter on the storage-manager client constructor. The new input parameter is an enumerated type and is called MemoryType, it can have only 2 values:

- VOLATILE: for storing in the VOLATILE area: the files are deleting automatically after 7 days

- PERMANENT: for storing in the standard area: the files are deleting only manually

The Volatile area is suggested to all clients that do not need to maintain data on the storage permanently. Through the use of this area the client doesn't need to perform the delete operation manually. It is strongly discouraged the usage of the VOLATILE storage area for storing data that will need to last more than seven days.

Supported Operations

Inspired by Build Pattern, the StorageManger library implements many operations on remote files. The methods LFile and RFile refer to, respectively, Local File (if needed) and Remote File. The remote file could be identified by path or by id. The id is returned when the file is created. In the call's signature, these methods are preceded by a method that identify an operation, see examples below:

- put(boolean replace)

- put a local file in a remote directory. If replace is true then the remote file, if exists, will be replaced.

- If the remote directory does not exist it will be automatically

put(true).LFile(“localPath”).RFile(“remotePath”);put(true).LFile(“localPath”).RFile(id);

- get()

- downloads a file from a remote path to a local directory

get().LFile(“localPath”).RFile(“remotePath”);get().LFile(“localPath”).RFile(id);

- remove()

- removes a remote file

remove().RFile(“remotePath”);remove().RFile(id);

- removeDir()

- removes a remote directory and all files in it. This operation is recursive.

removeDir().RDir(“remoteDirPath”);

- showDir()

- shows the content of a directory

showDir.RFile(“remoteDirPath”);

- lock()

- locks a remote file to prevent multiple access. It returns an id string for unlock the file. Remember that every lock has a TTL associated (Default: 180000 ms).

lock().RFile(“remotePath”);- Otherwise: Download the file in "localFilePath" and Lock the remote file stored on backend server

lock().LFile("localFilePath").RFile("remotePath")

- unlock(String key4Unlock)

- if the file is locked, unlocks the remote file.

unlock(“key4Unlock”).RFile(“remotePath”);- Otherwise: Upload the new File stored in the local FileSystem at: "localFilePath" and unlock the file on the backend server

unlock("key4Unlock").LFile("localFilePath").RFile("remotePath")

- getTTL()

- returns the TimeToLive associated to a remote file if the file is currently locked

getTTL().RFile("remoteDirPath");

- setTTL(int milliseconds)

- resets the TimeToLive to a remote file that is currently locked. This operation is consented max 5 times for any file.

setTTL(180000).RFile("remoteDirPath");

- getUrl()

- returns the smp url of a file stored in the repository

- this method is not compatible with private access mode

getUrl().RFile(“remotePath”);getUrl().RFile(id);

- getHttpUrl()

- returns the http url of a file stored in the repository

- this method is not compatible with private access mode

getUrl().RFile(“remotePath”);- getUrl().RFile(id);</code>

- copy()

- copies a remote file from a remote path to another remote path

- return the id of the new file stored in the repository

- this method is available only in version 2.0.0

copy().RFile(“remotePath1”).Rfile(“remotePath2”);

- moveFile()

- moves a remote file from a remote path to another remote path

- return the id of the file stored in the repository

- this method is available only in version 2.0.0 or later

moveFile().from(“remoteFilePath1”).to(“remoteFilePath2”);

- moveDir()

- moves a remote directory from a remote directory path to another remote directory path

- this method is available only in version 2.0.0 or later

moveDir().from(“remoteDirPath1”).to(“remoteDirPath2”);

- link()

- links a remote file from a remote path to another remote path as an hard link in Unix system

- return the id of the new file linked in the repository

- this method is available only in version 2.0.0

link().RFile(“remotePath1”).Rfile(“remotePath2”);

Getting-Started

Maven artifacts

If a client want to use storage-manager libraries, it should import in the pom the following dependencies:

<dependency> <groupId>org.gcube.contentmanagement</groupId> <artifactId>storage-manager-core</artifactId> <version>[2.0.0, 3.0.0)</version> </dependency> <dependency> <groupId>org.gcube.contentmanagement</groupId> <artifactId>storage-manager-wrapper</artifactId> <version>[2.0.0, 3.0.0)</version> </dependency>

Alternatively, if a client want to use the storage-manager out of the D4Science infrastructure, only the storage-manager-core dependency is needed:

<dependency> <groupId>org.gcube.contentmanagement</groupId> <artifactId>storage-manager-core</artifactId> <version>[2.0.0, 3.0.0)</version> </dependency>

Usage examples

Initialising Storage Manager

The following code explains how to instatiate a client for performing remote operations on the storage repository:

//the context in which you need to work GCUBEScope scope = GCUBEScope.getScope("/gcube"); // The Access type can be private | shared | public IClient client=new StorageClient("ServiceClass", "ServiceName", "owner", AccessType.SHARED, scope).getClient();

Upload a new file using the storage manager:

//upload a new file in the storage: String id=client.put(true).LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile("/img/pippo.jpg");

Download a file using the storage manager:

client.get().LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile("/img/pippo.jpg");</code>

Download a file using the storage manager by id

client.get().LFile("/home/rcirillo/FilePerTest/pippo.jpg").RFile(id);

Download a Stream using the storage manager:

InputStream is = client.get().RFileAStream("/img/pippo.jpg");

List the contents of a remote directory on the storage repository:

List<StorageObject> list=client.showDir().RDir("img");

The following code explains how to lock a file on the storage repository:

String id=client.lock().RFile("/img/pluto.jpg");

The following code explains how to unlock a file on the storage repository:

client.unlock(id).RFile("/img/pluto.jpg");

Remove a file on the storage repository:

client.remove().RFile("/img/pluto.jpg");

Remove a file by Id:

client.remove()..RFile(id);

Remove a dir on the storage repository:

client.removeDir().RDir("/img");

Move a directory on the storage repository from a remote path to another remote path:

client.moveDir().from("/img/test1").to("/img/test2");

Move a file from a remote path to another remote path:

client.moveFile().from("/img/test1.xml").to("/img/test2.xml");

Scenario examples

- Scenario 1

In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the sScope /gcube upload the file: /home/xxx/FilePerTest/test.jpg in shared mode in the VOLATILE area. Another instance of the same service, s2 (identify by: ServiceClass: sc2, ServiceName: sn2) running in the same scope but on another node, download it.

The following code shows an upload operation example:

String scope="/gcube"; ScopeProvider.instance.set(scope); IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); String id=client.put(true).LFile("/home/xxx/FilePerTest/test.jpg").RFile("/img/test.jpg");

The following code shows the download operation:

String scope="/gcube"; ScopeProvider.instance.set(scope); IClient client=new StorageClient("sc2", "sn2", "s2", AccessType.SHARED, MemoryType.VOLATILE).getClient(); String id=client.get().LFile("/home/yyy/FilePerTest/test.jpg").RFile("/img/test.jpg");

The file is downloaded in the local directory: /home/yyy/FilePerTest/ where s2 is running and the file name is test.jpg

- Scenario 2

In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the scope /gcube uploads the file: /home/xxx/FilePerTest/test1.jpg in shared mode in VOLATILE area. It then retrieves the url of the file uploaded by the method getUrl and passes it to another service s2 that is in running on another node. The s2 service retrieves the inputStream of the file that the service s1 had uploaded by the url. Remember, getUrl() method is not compatible with private access mode:

String scope="/gcube"; ScopeProvider.instance.set(scope); IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); String id=client.put(true).LFile("/home/xxx/FilePerTest/test1.jpg").RFile("/img/test1.jpg"); String url=client.getUrl().RFile("/img/test1.jpg");

The s2 service retrieves the inputStream of the file by the url stored in the "url" variable:

Handler.activateProtocol(); URL smsHome = new URL("smp://img/test1.jpg5ezvFfBOLqbElsh5xYVgBTxt8lwt4fl"); URLConnection uc = null; uc = ( URLConnection ) smsHome.openConnection(); InputStream is=uc.getInputStream();

- Scenario 3

In this example a GCUBE service s1 (identify by: ServiceClass: sc, ServiceName: sn), running in the scope /gcube uploads the file: /home/xxx/FilePerTest/test2.jpg in shared mode in VOLATILE area. It then retrieves the url of the file uploaded by the method getHttpUrl and passes it to another service s2 that is in running on another node. The s2 service retrieves the inputStream of the file that the service s1 had uploaded by the url.

String scope="/gcube"; ScopeProvider.instance.set(scope); IClient client=new StorageClient("sc", "sn", "s1", AccessType.SHARED, MemoryType.VOLATILE).getClient(); String id=client.put(true).LFile("/home/xxx/FilePerTest/test2.jpg").RFile("/img/test2.jpg"); String httpUrl=client.getHttpUrl().RFile("/img/test2.jpg");

The s2 service retrieves the inputStream of the file by the url stored in the "url" variable:

URL smsHome = new URL(httpUrl); URLConnection uc = null; uc = ( URLConnection ) smsHome.openConnection(); InputStream is=uc.getInputStream();

Alternatively, the httpUrl could be also resolved by a browser. This is an example of the httpUrl generated by getHttpUrl method:

http://data-d.d4science.org/T1VWSC9Kc0Q2ZlJzbHA0d1kvWjZFdmRjbkE1M1pLT09HbWJQNStIS0N6Yz0

Deployment

In order to use the storage manager on a given VRE a particular a service endpoint specifying the address of the server used for storing data is needed.

This is an example of service endpoint used.

<Resource version="0.4.x"> <ID>46083b40-a5e3-11e2-a210-9a433747c17a</ID> <Type>RuntimeResource</Type> <Scopes> <Scope>/gcube/devsec</Scope> <Scope>/gcube</Scope> <Scope>/gcube/devsec/devVRE</Scope> </Scopes> <Profile> <Category>DataStorage</Category> <Name>StorageManager</Name> <Description>Backend description for StorageManager library</Description> <Platform> <Name>storage-manager</Name> <Version>2</Version> <MinorVersion>0</MinorVersion> <RevisionVersion>0</RevisionVersion> <BuildVersion>0</BuildVersion> </Platform> <RunTime> <HostedOn>d4science.org</HostedOn> <GHN UniqueID="" /> <Status>READY</Status> </RunTime> <AccessPoint> <Description>MongoDB server</Description> <Interface> <Endpoint EntryName="server1">146.48.123.01</Endpoint> </Interface> <AccessData> <Username /> <Password>6vW1u92cpdgHzYAgIurn9w==</Password> </AccessData> <Properties> <Property> <Name>host</Name> <Value encrypted="false">nodeXX.d.d4science.research-infrastructures.eu</Value> </Property> <Property> <Name>priority</Name> <Value encrypted="false">default</Value> </Property> <Property> <Name>type</Name> <Value encrypted="false">MongoDB</Value> </Property> </Properties> </AccessPoint> <AccessPoint> <Description>MongoDB server</Description> <Interface> <Endpoint EntryName="server2">146.48.123.02</Endpoint> </Interface> <AccessData> <Username /> <Password>6vW1u92cpdgHzYAgIurn9w==</Password> </AccessData> <Properties> <Property> <Name>host</Name> <Value encrypted="false">nodeYY.d.d4science.research-infrastructures.eu</Value> </Property> <Property> <Name>priority</Name> <Value encrypted="false">default</Value> </Property> <Property> <Name>type</Name> <Value encrypted="false">MongoDB</Value> </Property> </Properties> </AccessPoint> </Profile> </Resource>

The "endpoint" tag specifies the ip of server. The resource can specify one or more servers. In this case there are three servers. If the servers are more than one then the second server has attribute value: "server2" and so on. The values of entryName attributes of endpoint tag, in this case, are: server1, server2, server3. The property named "type", specifies what kind of backend, in this case is a MongoDB server.