Difference between revisions of "Archive Import Service"

(→Aim and Scope of Component) |

m (→Service Implementation) |

||

| Line 15: | Line 15: | ||

== Service Implementation == | == Service Implementation == | ||

| − | Note: this section refers to the Architecture of the AIS as a stateful gCube service. The status of the compoenent is however currently that of a standalone tool. Please see the section current limitations and known issues for further details. | + | '''Note:''' this section refers to the Architecture of the AIS as a stateful gCube service. The status of the compoenent is however currently that of a standalone tool. Please see the section current limitations and known issues for further details. |

| − | The AIS is a stateful gCube service, following the factory approach. A stateless factory porttype, the ArchiveImportService porttype, allows to create stateful instances of the ArchiveImportTask porttype. Each import task is responsible for the execution of a single import script. It performs the import, maintains internally the status of the import (under the form of an annotated graph of resources), and provides notification about the status of the task. The resources it is responsible for are kept up to date by re-executing the import script at suitable time intervals. Beside acting as a factory for import tasks, the ArchiveImportService porttype also offers additional functionality related to the management of import scripts. Import scripts are generic resources inside the infrastructure. The porttype allows to publish new scripts, list and edit existing scripts, and validate them from a syntactic point of view. The semantics of the methods offered by the two porttypes are described in the following. | + | The AIS is a '''''stateful gCube service''''', following the factory approach. A stateless factory porttype, the '''<tt>ArchiveImportService</tt> porttype''', allows to create stateful instances of the '''<tt>ArchiveImportTask</tt> porttype'''. Each import task is responsible for the execution of a single import script. It performs the import, maintains internally the status of the import (under the form of an annotated graph of resources), and provides notification about the status of the task. The resources it is responsible for are kept up to date by re-executing the import script at suitable time intervals. Beside acting as a factory for import tasks, the ArchiveImportService porttype also offers additional functionality related to the management of import scripts. Import scripts are generic resources inside the infrastructure. The porttype allows to publish new scripts, list and edit existing scripts, and validate them from a syntactic point of view. The semantics of the methods offered by the two porttypes are described in the following. |

=== Archive Import Service Porttype=== | === Archive Import Service Porttype=== | ||

| − | + | * <tt>listImportScripts()</tt>: this method returns a list of all import scripts currently available in the VRE (as generic resources); | |

| − | + | * <tt>getImportScript(String importScriptIdentifier)</tt>: this method returns the import script corresponding to the given identifier; | |

| − | + | * <tt>saveImportScript(String importScriptIdentifier, String script, boolean isTemplate)</tt>: this method returns the import script corresponding to the given identifier; | |

| − | + | * <tt>validateImportScript(String importScriptIdentifier)</tt>: this method performs syntactic validation of the given import script; | |

| − | + | * <tt>getTask(String importScriptIdentifier, ImportParameters pars)</tt>: this method gets an instance of the ArchiveImportTask service dedicated to a given script. The parameters given in the signature represent the actual parameters needed to substitute to the formal parameters if the script is a template; | |

=== Archive Import Task Porttype === | === Archive Import Task Porttype === | ||

| − | + | * <tt>start(ImportOptions options)</tt> starts the import task with the given options. Options include a run mode (validate, build, simulation import, import); | |

| − | + | * <tt>stop(ImportOptions options)</tt> stops the import task; | |

| − | + | * <tt>getErrorDetails()</tt> this method is included to get detailed information about errors during the import task execution. Notice that this is additional to plain error notification given by the service. | |

| − | given by the service. | + | |

| − | + | ||

=== Import Task Status Notification === | === Import Task Status Notification === | ||

The ArchiveImportTask service maintains part of its state as a WS-resource. The properties of this resource are used to notify interested service about: | The ArchiveImportTask service maintains part of its state as a WS-resource. The properties of this resource are used to notify interested service about: | ||

| − | + | * the current phase of the import (e.g. graph building, importing etc.); | |

| − | + | * the current number of objects created in the GoR; | |

| − | + | * the current number of objects imported successfully; | |

| − | + | * the current number of objects whose import failed. | |

| − | + | ||

== Extensibility Features == | == Extensibility Features == | ||

Revision as of 17:55, 26 May 2009

Contents

Aim and Scope of Component

The Archive Import Service(AIS) is dedicated to "batch" import of resources which are external to the gCube infrastructure into the infrastructure itself. The term "importing" refers to the description of such resources and their logical relationships (e.g. the association between an image and a file containing metadata referring to it) inside the Information Organization stack of services. While the AIS is not strictly necessary for the creation and management of collections of resources in gCube, it makes possible in practice the creation of large collections, and their automated maintenance.

Logical Architecture

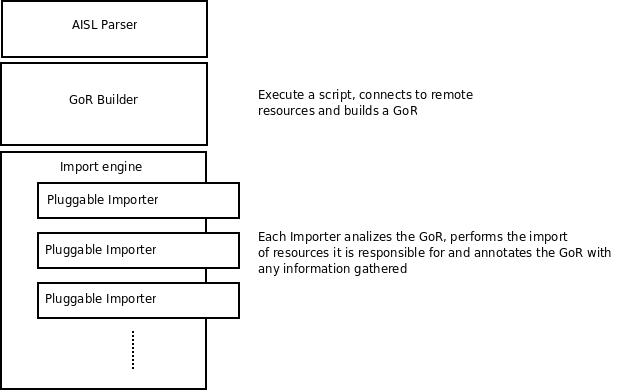

The task of importing a set of external resources is performed by building a description of the resources to import, in the form of a graph labelled on nodes and arcs with key-value pairs, called a graph of resources (GoR), and then processing this description accomplishing all steps needed to import the resources represented therein. The GoR is based on a custom data model, similar to the Information Object Model, and is built following a procedural description of how to build it expressed in a scripting language, called the Archive Import Service Language (AISL)'. Full details about this language and how to write an import script are given in the Administrator's guide. The actual import task is performed by a chain of pluggable software modules, called "importers", that in turn process the graph and can manipulate it, annotating it with additional knowledge produced during the import. Each importer is dedicated to import resources interacting with a specific subpart of the Information Organization set of Services. For instance, the metadata importer is responsible for the import of metadata, and it handles their import by interacting with the Metadata Management Service. The precise way in which importers handle the import task, and in particular how they define a specific description of the resources they need to consume inside a GoR is left to the single importers. This, and the pluggable nature of importers, makes possible to enable the import of new kind of content resources which might be defined in the infrastructure in the future.

The logical architecture of the AIS can be then depicted as follows:

Import state and incremental import

During the import of some resources, the corresponding GoR is kept updated with information regarding the actual resources created, such as their OIDs. The Graph of Resources is stored persistently by the service, so that a subsequent execution of the same import script is aware of the status of the import and can perform only the differential operations needed to maintain the status of the resources up-to-date. While this solution involves a partial duplication of information inside the infrastructure, it has been chosen because it introduces a complete decoupling between the AIS and other gCube services, which are thus not forced to offer additional information needed for incremental import in their interfaces.

Service Implementation

Note: this section refers to the Architecture of the AIS as a stateful gCube service. The status of the compoenent is however currently that of a standalone tool. Please see the section current limitations and known issues for further details.

The AIS is a stateful gCube service, following the factory approach. A stateless factory porttype, the ArchiveImportService porttype, allows to create stateful instances of the ArchiveImportTask porttype. Each import task is responsible for the execution of a single import script. It performs the import, maintains internally the status of the import (under the form of an annotated graph of resources), and provides notification about the status of the task. The resources it is responsible for are kept up to date by re-executing the import script at suitable time intervals. Beside acting as a factory for import tasks, the ArchiveImportService porttype also offers additional functionality related to the management of import scripts. Import scripts are generic resources inside the infrastructure. The porttype allows to publish new scripts, list and edit existing scripts, and validate them from a syntactic point of view. The semantics of the methods offered by the two porttypes are described in the following.

Archive Import Service Porttype

- listImportScripts(): this method returns a list of all import scripts currently available in the VRE (as generic resources);

- getImportScript(String importScriptIdentifier): this method returns the import script corresponding to the given identifier;

- saveImportScript(String importScriptIdentifier, String script, boolean isTemplate): this method returns the import script corresponding to the given identifier;

- validateImportScript(String importScriptIdentifier): this method performs syntactic validation of the given import script;

- getTask(String importScriptIdentifier, ImportParameters pars): this method gets an instance of the ArchiveImportTask service dedicated to a given script. The parameters given in the signature represent the actual parameters needed to substitute to the formal parameters if the script is a template;

Archive Import Task Porttype

- start(ImportOptions options) starts the import task with the given options. Options include a run mode (validate, build, simulation import, import);

- stop(ImportOptions options) stops the import task;

- getErrorDetails() this method is included to get detailed information about errors during the import task execution. Notice that this is additional to plain error notification given by the service.

Import Task Status Notification

The ArchiveImportTask service maintains part of its state as a WS-resource. The properties of this resource are used to notify interested service about:

- the current phase of the import (e.g. graph building, importing etc.);

- the current number of objects created in the GoR;

- the current number of objects imported successfully;

- the current number of objects whose import failed.

Extensibility Features

The functionality of the Archive Import Service can be extended in three main ways. It is possible to define new functions for the AISL, and to plug in software modules to interact with external resources through additional network protocols and to interact with new gCube content-related components.

Defining Functions for the AISL

AISL is a scripting language intended to create graphs of resources for subsequent import. Its type system and grammar, together with usage examples, are fully described in the Administrator's guide. Its main features are a tight integration with the AIS, in the sense that the creation of model objects are first citizens in the language, and the ability to treat in a way as much as possibile transparent to the user some tasks which are frequent during import, like accessing files at remote locations. Beside avoiding the complexity of full fledged programming languages like Java, its limited expressivity - tailored to import tasks only - prevents security issues related to the execution of code in remote systems. The language does not allow the definition of new functions/types of objects inside a program, but can be easily extended with new functionality by defining new functions as plugin modules. Adding a new function amounts to two steps:

- Creating a new java class implementing the AISLFunction class. This is more easily done by subclassing the AbstractAISLFunction class. See below for further details.

- Registering the function in the "Functions" class. This step will be removed in later released, which will implement automatic plugin-like registration

The AISLFunction interface provides a way to specify a number of signatures (number and type of arguments and return type) for an AISL function. It is design to allow for overloaded functions. The number and types of the parameters are used to perform a number of static checks on the invocation of the function. The method evaluate provides the main code to evaluated during an invocation of the function in an AISL script. In the case of overloaded functions, theis method should redirect to appropriate methods based on the number and types of the arguments.

public interface AISLFunction {

public String getName();

public void setFunctionDefinitions(FunctionDefinition ... defs);

public FunctionDefinition[] getFunctionDefinitions();

public Object evaluate(Object[] args) throws Exception;

public interface FunctionDefinition{

Class<?>[] getArgTypes();

Class<?> getReturnType();

}

}

A partial implementation of the AISLFunction interface is provided by the AbstractAISLFunction class. A developer can simply extend this class and then provide an appropriate constructor and implement the appropriate evaluate method. An example is given below. The function match returns a boolean value according to the match of a string value with a given regular expression pattern. Its signature is thus:

boolean match(string str, string pattern)

the class Match.class below is an implementation of this function. In the constructor, the function declares its name and its parameters. The method evaluate(Object[] args), which must be implemented to comply with the interface AISLFunction, performs some casting of the parameters and then redirects the evaluation to another evaluate function (Note, in this case, as the function is not overloaded, there is no actual need for a separate evaluate method, here it has been added for clarity).

public class Match extends AbstractAISLFunction{

public Match(){

setName("match");

setFunctionDefinitions(

new FunctionDefinitionImpl(Boolean.class, String.class, String.class)

);

}

public Object evaluate(Object[] args) throws Exception{

return evaluate((String)args[0], (String)args[1]);

}

private Boolean evaluate(String str, String pattern){

return str.matches(pattern);

}

}

Writing RemoteFile Adapters

When writing AISL scripts, the details of interaction with remote resources available on the network is hidden from the user, and encapsulated into the facilities related to a native data type of the language, the file type. The intention is to shield (almost) completely the user from such details, and presenting resources available through heterogeneous protocols via a homogeneous access mechanism.

A network resource is made available as file by invoking the getFile() function of the language. The function gets as argument a locator, which is a string (and optionally some parameters needed for authentication), and resolves, based on the form of the locator, which protocol to use and how to access the resource. To avoid excessive resource consumption, remote resources are not downloaded straight away. Instead, a file object acts as a placeholder, and content is made available on demand. Other properties of the resource, like for instance its length, last modification date or hash signature, are instead gathered (and possibly cached so to limit network usage). Of course, the availability of this information is related to the capabilities offered by the network protocol at hand. Once downloaded, content is also cached. In order to make a new protocol available to the AIS, it is sufficient to implement the RemoteFile interface and register the class to the RemoteFileFactory class. Some network protocols allow for a hierarchical, directory-style structuring of resources. This means basically that it is possible, from a given resource, to get a list of their children. For Hierarchical Resources, it is possible to implement the HierarchicalRemoteFile interface. If basic caching capabilities are acceptable, it is possible (but not mandatory) to extend instead the AbstractRemoteFile and AbstractHierarchicalRemoteFile classes. These classes already provide a standard implementation for a number of methods defined by the correonding interfaces.

Writing Importers

Importer are software modules that process the graph of resources and decide about import actions, interfacing with some gCube compoenent for content management, like for instance the Collection Management Service, the Content Management Service and the Metadata Management Service. Each importer is responsible for treating specific kind of resource (e.g. metadata), and essentially is the bridge between the archive import service and the services of the Information Organization stack responsible for managing that kind of resource. The precise way in which the importer performs the import is thus dependent on the specific subsystem the importer will interact with. Similarly, different importers will need to obtain different information about the resources to import. For instance, to import a document it is necessary to have its content or an url at which the content can be accessed. To create a metadata collection, it is necessary to specify some properties like the location of the schema of the objects contained in the collection. The Archive import Service already includes importers dedicated to the creation of content and metadata collections and to the creation of complex documents and metadata objects. Thus, the creation of a new importer is an activity which is only needed if a new kind of content is defined over the InfoObjectModel and facilities for its manipulation are offered by some new gCube component.

Defining Importer-Specific Types

Writing a new importer requires to know how to interact with such component, and how to manipulate a Graph of Resources. The data model handled by AISL features three main types of constructs:

- Resource

- Relationship

- Collection

A graph of resources is a graph composed by nodes (resources) and edges (relationships). Furthermore, nodes (resources) can be organized into sets (collections), that can in turn be connected using relationships. All constructs of the model can be annotated with properties, which are name/value pairs. The constructs above correspond internally to the three classes Resource, ResourceCollection and ResourceRelationship. In order to constrain the kind of properties that the model objects it manipulates must have, an importer must define a set of subtypes of the model object types. This can be done by subclassing the above mentioned classes. Which subtypes to implement, and the precise semantics of their properties, depend on the specific importer. For instance, the MetadataCollection Importer declares only one new type, the collection::metadata type, that specialized the type collection to allow for specific metadata collection-related properties. Notice that importers can also manipulate objects belonging to subclasses defined by other importers. For instance, the MetadataCollection importer needs to access properties of the ContentCollection subtype, defined by the ContentCollection importer, in order to be able to create metadata collections.

In order to define their own subtypes, importers must:

- Subclass the basic types as needed.

- Register the classes in the GraphOfResources class. This automatically extends the language with the new types.

- Publish the properties allowed for the new subtypes.

Regarding the last point, notice that he types defined by an importer and its properties must be publicly available, as AISL script developers must known which are the properties available for them and what is their semantics. Furthermore, subsequent importers in the chain may also need to access some properties. For example, an importer for metadata needs to access also model objects representing content to get their object id (internal identifier). Notice that in general an AISL script will not necessarily assign all properties defined by a subtype. Some of these properties may be conditionally needed, while come will only be written by an importer at import time. For example, when a new content object is imported, the importer must record into the GoR object the OID of the newly created object. For this reason, the specification of the subtypes defined by importers must also provide information about what properties are mandatory (i.e. they must be assigned during the creation of a GoR) and which properties are private (i.e. they should NOT be assigned during the creation of the GoR).

Defining Importer Logics

The actual logic of the import for a new importer is contained in a class that must simply implement the Importer interface, which is as follows:

public interface Importer{

public String getName();

public void importRepresentationGraph(GraphOfResources graph) throws RemoteException, ExecutionInterruptedException;

}

The first method must provide a human-readable name for the importer (for logging and status notification purposes). The second method will be passed, during operation, a GraphOfResources object, and must contain the logic needed for manipulating the objects in the graph, selecting the ones of interest and perform the actual import tasks.

Current Limitations and Known Issues

The AISL is currently released as a standalone client. The class org.gcube.contentmanagement.contentlayer.archiveimportservice.impl.AISLClient contains a client that performs the steps needed for the import: parsing and execution of the script, generation of the graph of resources, import of of the graph of resources. It accepts one or two arguments. The first one is the location (on the local file system) of a file containing an aisl script. The second argument is a boolean value. If it is set to true, the client will perform the creation of the graph of resources but will not start the importing. This is to ease debugging.

After the graph of resources is created, the client generates a dump of the graph in a file named resourcegraph.dump. The graph is serialized in an XML-like format. This is only for visualization and debugging purposes, and this format is not currently guaranteed to be valid (or even well formed) xml.