Difference between revisions of "Full Text Index"

(→Create a Updater Resource and start feeding) |

|||

| (62 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| + | [[Image:Alert_icon2.gif]] ''THIS SECTION OF GCUBE DOCUMENTATION IS CURRENTLY UNDER UPDATE.'' | ||

| + | |||

==Introduction== | ==Introduction== | ||

The Full Text Index is responsible for providing quick full text data retrieval capabilities in the DILIGENT environment. | The Full Text Index is responsible for providing quick full text data retrieval capabilities in the DILIGENT environment. | ||

| Line 9: | Line 11: | ||

*The '''FullTextIndexLookup Service''' is responsible for creating a local copy of an index, and exposing interfaces for querying and creating statistics for the index. One FullTextIndexLookup Service resource can only replicate and lookup a single instance, but one Index can be replicated by any number of FullTextIndexLookup Service resources. Updates to the Index will be propagated to all FullTextIndexLookup Service resources replicating that Index. | *The '''FullTextIndexLookup Service''' is responsible for creating a local copy of an index, and exposing interfaces for querying and creating statistics for the index. One FullTextIndexLookup Service resource can only replicate and lookup a single instance, but one Index can be replicated by any number of FullTextIndexLookup Service resources. Updates to the Index will be propagated to all FullTextIndexLookup Service resources replicating that Index. | ||

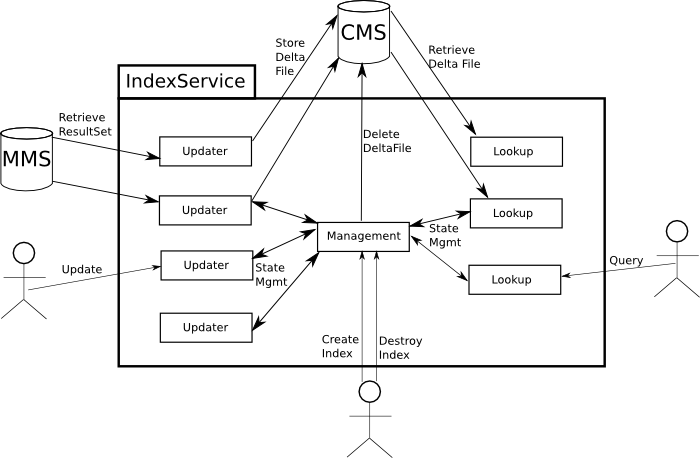

| − | It is important to note that none of the three services have to reside on the same | + | It is important to note that none of the three services have to reside on the same node; they are only connected through WebService calls and the DILIGENT CMS. The following illustration shows the information flow and responsibilities for the different services used to implement the Full Text Index: |

| − | + | [[Image:GeneralIndexDesign.png|frame|none|Generic Editor]] | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

===RowSet=== | ===RowSet=== | ||

| Line 75: | Line 64: | ||

</pre> | </pre> | ||

| − | Fields present in the ROWSET but not in the IndexType will be skipped. The elements under each "field" element are used to define that field should be handled, and they should contain either "yes" or "no". The meaning of each of them is explained bellow: | + | Fields present in the ROWSET but not in the IndexType will be skipped. The elements under each "field" element are used to define how that field should be handled, and they should contain either "yes" or "no". The meaning of each of them is explained bellow: |

*'''index''' | *'''index''' | ||

| Line 92: | Line 81: | ||

For more complex content types, one can also specify sub-fields as in the following example: | For more complex content types, one can also specify sub-fields as in the following example: | ||

| − | < | + | <index-type> |

| + | <field-list> | ||

| + | <field name="contents"> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | |||

| + | <span style="color:green"><nowiki><!-- subfields of contents --></nowiki></span> | ||

| + | <field name="title"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | |||

| + | <span style="color:green"><nowiki><!-- subfields of title which itself is a subfield of contents --></nowiki></span> | ||

| + | <field name="bookTitle"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="chapterTitle"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field> | ||

| + | |||

| + | <field name="foreword"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="startChapter"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | <field name="endChapter"> | ||

| + | <index>yes</index> | ||

| + | <store>yes</store> | ||

| + | <return>yes</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | </field> | ||

| + | |||

| + | <span style="color:green"><nowiki><!-- not a subfield --></nowiki></span> | ||

| + | <field name="references"> | ||

| + | <index>yes</index> | ||

| + | <store>no</store> | ||

| + | <return>no</return> | ||

| + | <tokenize>yes</tokenize> | ||

| + | <sort>no</sort> | ||

| + | <boost>1.0</boost> | ||

| + | </field> | ||

| + | |||

| + | </field-list> | ||

| + | </index-type> | ||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

| − | |||

Querying the field "contents" in an index using this IndexType would return hitsin all its sub-fields, which is all fields except references. Querying the field "title" would return hits in both "bookTitle" and "chapterTitle" in addition to hits in the "title" field. Querying the field "startChapter" would only return hits in from "startChapter" since this field does not contain any sub-fields. Please be aware that using sub-fields adds extra fields in the index, and therefore uses more disks pace. | Querying the field "contents" in an index using this IndexType would return hitsin all its sub-fields, which is all fields except references. Querying the field "title" would return hits in both "bookTitle" and "chapterTitle" in addition to hits in the "title" field. Querying the field "startChapter" would only return hits in from "startChapter" since this field does not contain any sub-fields. Please be aware that using sub-fields adds extra fields in the index, and therefore uses more disks pace. | ||

| Line 179: | Line 174: | ||

===Query language=== | ===Query language=== | ||

The Full Text Index uses the Lucene query language, but does not allow the use of fuzzy searches, proximity searches, range searches or boosting of a term. In addition, queries using wildcards will not return usable query statistics. | The Full Text Index uses the Lucene query language, but does not allow the use of fuzzy searches, proximity searches, range searches or boosting of a term. In addition, queries using wildcards will not return usable query statistics. | ||

| + | |||

| + | ===Statistics=== | ||

===Linguistics=== | ===Linguistics=== | ||

| − | The language of | + | The linguistics component is used in the '''Full Text Index'''. |

| + | |||

| + | Two linguistics components are available; the '''language identifier module''', and the '''lemmatizer module'''. | ||

| + | |||

| + | The language identifier module is used during feeding in the FullTextBatchUpdater to identify the language in the documents. | ||

| + | The lemmatizer module is used by the FullTextLookup module during search operations to search for all possible forms (nouns and adjectives) of the search term. | ||

| + | |||

| + | The language identifier module has two real implementations (plugins) and a dummy plugin (doing nothing, returning always "nolang" when called). The lemmatizer module contains one real implementation (one plugin) (no suitable alternative was found to make a second plugin), and a dummy plugin (always returning an empty String ""). | ||

| + | |||

| + | Fast has provided proprietary technology for one of the language identifier modules (Fastlangid) and the lemmatizer module (Fastlemmatizer). The modules provided by Fast require a valid license to run (see later). The license is a 32 character long string. This string must be provided by Fast (contact Stefan Debald, setfan.debald@fast.no), and saved in the appropriate configuration file (see install a lingustics license). | ||

| + | |||

| + | The current license is valid until end of March 2008. | ||

| + | |||

| + | ====Plugin implementation==== | ||

| + | The classes implementing the plugin framework for the language identifier and the lemmatizer are in the SVN module common. The package is: | ||

| + | org/diligentproject/indexservice/common/linguistics/lemmatizerplugin | ||

| + | and | ||

| + | org/diligentproject/indexservice/common/linguistics/langidplugin | ||

| + | |||

| + | The class LanguageIdFactory loads an instance of the class LanguageIdPlugin. | ||

| + | The class LemmatizerFactory loads an instance of the class LemmatizerPlugin. | ||

| + | |||

| + | The language id plugins implements the class org.diligentproject.indexservice.common.linguistics.langidplugin.LanguageIdPlugin. | ||

| + | The lemmatizer plugins implements the class org.diligentproject.indexservice.common.linguistics.lemmatizerplugin.LemmatizerPlugin. | ||

| + | The factory use the method: | ||

| + | Class.forName(pluginName).newInstance(); | ||

| + | when loading the implementations. | ||

| + | The parameter pluginName is the package name of the plugin class to be loaded and instantiated. | ||

| + | |||

| + | ====Language Identification==== | ||

| + | There are two real implementations of the language identification plugin available in addition to the dummy plugin that always returns "nolang". | ||

| + | |||

| + | The plugin implementations that can be selected when the FullTextBatchUpdaterResource is created: | ||

| + | |||

| + | org.diligentproject.indexservice.common.linguistics.jtextcat.JTextCatPlugin | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLanguageIdPlugin | ||

| + | |||

| + | org.diligentproject.indexservice.common.linguistics.languageidplugin.DummyLangidPlugin | ||

| + | |||

| + | =====JTextCat===== | ||

| + | The JTextCat is maintained by http://textcat.sourceforge.net/. It is a light weight text categorization language tool in Java. It implements the N-Gram-Based Text Categorization algorithms that is described here: | ||

| + | http://citeseer.ist.psu.edu/68861.html | ||

| + | It supports the languages: German, English, French, Spanish, Italian, Swedish, Polish, Dutch, Norwegian, Finnish, Albanian, Slovakian, Slovenian, Danish and Hungarian. | ||

| + | |||

| + | The JTexCat is loaded and accessed by the plugin: | ||

| + | org.diligentproject.indexservice.common.linguistics.jtextcat.JTextCatPlugin | ||

| + | |||

| + | The JTextCat contains no config - or bigram files since all the statistical data about the languages are contained in the package itself. | ||

| + | |||

| + | The JTextCat is delivered in the jar file: textcat-1.0.1.jar. | ||

| + | |||

| + | The license for the JTextCat: | ||

| + | http://www.gnu.org/copyleft/lesser.html | ||

| + | |||

| + | =====Fastlangid===== | ||

| + | The Fast language identification module is developed by Fast. It supports "all" languages used on the web. The tools is implemented in C++. The C++ code is loaded as a shared library object. | ||

| + | The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. | ||

| + | The shared library objects are compiled on Linux RHE3 and RHE4. | ||

| + | |||

| + | The Java native interface is generated using Swig. | ||

| + | |||

| + | The Fast langid module is loaded by the plugin (using the LanguageIdFactory) | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLanguageIdPlugin | ||

| + | |||

| + | The plugin loads the shared object library, and when init is called, instantiate the native C++ objects that identifies the languages. | ||

| + | |||

| + | The Fastlangid is in the SVN module: | ||

| + | trunk/linguistics/fastlinguistics/fastlangid | ||

| + | |||

| + | The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file config.txt | ||

| + | |||

| + | The shared library object is called liblangid.so | ||

| + | |||

| + | The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/langid. | ||

| + | |||

| + | The org_diligentproject_indexservice_langid.jar contains the plugin FastLangidPlugin (that is loaded by the LanguageIdFactory) and the Java native interface to the shared library object. | ||

| + | |||

| + | The shared library object liblangid.so is deployed in the $GLOBUS_LOCATION/lib catalogue. | ||

| + | |||

| + | The license for the Fastlangid plugin: | ||

| + | |||

| + | =====Language Identifier Usage===== | ||

| + | |||

| + | The language identifier is used by the Full Text Updater in the Full Text Index. | ||

| + | The plugin to use for an updater is decided when the resource is created, as a part of the create resource call. | ||

| + | (see Full Text Updater). The parameter is the package name of the implementation to be loaded and used to identify the language. | ||

| + | |||

| + | The language identification module and the lemmatizer module are loaded at runtime by using a factory that loads the implementation that is going to be used. | ||

| + | |||

| + | The feeded documents may contain the language per field in the document. If present this specified language is used when indexing the document. In this case the language id module is not used. | ||

| + | If no language is specified in the document, and there is a language identification plugin loaded, the FullTextIndexBatchUpdater Service will try to identify the language of the field using the loaded plugin for language identification. | ||

| + | Since language is assigned at the Collections level in Diligent, all fields of all documents in a language aware collection should contain a "lang" attribute with the language of the collection. | ||

A language aware query can be performed at a query or term basis: | A language aware query can be performed at a query or term basis: | ||

| Line 188: | Line 278: | ||

*Since language is specified at a collection level, language aware queries should only be used for language neutral collections. | *Since language is specified at a collection level, language aware queries should only be used for language neutral collections. | ||

| + | ==== Lemmatization ==== | ||

| + | There is one real implementations of the lemmatizer plugin available in addition to the dummy plugin that always returns "" (empty string). | ||

| + | |||

| + | The plugin implementations is selected when the FullTextLookupResource is created: | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin | ||

| + | |||

| + | org.diligentproject.indexservice.common.linguistics.languageidplugin.DummyLemmatizerPlugin | ||

| + | |||

| + | =====Fastlemmatizer===== | ||

| + | |||

| + | The Fast lemmatizer module is developed by Fast. The lemmatizer modules depends on .aut files (config files) for the language to be lemmatized. Both expansion and reduction is supported, but expansion is used. The terms (noun and adjectives) in the query are expanded. | ||

| + | |||

| + | The lemmatizer is configured for the following languages: German, Italian, Portuguese, French, English, Spanish, Netherlands, Norwegian. | ||

| + | To support more languages, additional .aut files must be loaded and the config file LemmatizationQueryExpansion.xml must be updated. | ||

| + | |||

| + | The lemmatizer is implemented in C++. The C++ code is loaded as a shared library object. The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. The shared library objects are compiled on Linux RHE3 and RHE4. | ||

| + | |||

| + | The Java native interface is generated using Swig. | ||

| + | |||

| + | The Fast lemmatizer module is loaded by the plugin (using the LemmatizerIdFactory) | ||

| + | |||

| + | org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin | ||

| + | |||

| + | The plugin loads the shared object library, and when init is called, instantiate the native C++ objects. | ||

| + | |||

| + | The Fastlemmatizer is in the SVN module: trunk/linguistics/fastlinguistics/fastlemmatizer | ||

| + | |||

| + | The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file LemmatizerConfigQueryExpansion.xml | ||

| + | The shared library object is called liblemmatizer.so | ||

| + | |||

| + | The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/lemmatizer. | ||

| + | |||

| + | The org_diligentproject_indexservice_lemmatizer.jar contains the plugin FastLemmatizerPlugin (that is loaded by the LemmatizerFactory) and the Java native interface to the shared library. | ||

| + | |||

| + | The shared library liblemmatizer.so is deployed in the $GLOBUS_LOCATION/lib catalogue. | ||

| + | |||

| + | The '''$GLOBUS_LOCATION/lib''' must therefore be include in the '''LD_LIBRARY_PATH''' environment variable. | ||

| + | |||

| + | ===== Fast lemmatizer configuration ===== | ||

| + | The LemmatizerConfigQueryExpansion.xml contains the paths to the .aut files that is loaded when a lemmatizer is instanciated. | ||

| + | |||

| + | <lemmas active="yes" parts_of_speech="NA">etc/lemmatizer/resources/dictionaries/lemmatization/en_NA_exp.aut</lemmas> | ||

| + | |||

| + | The path is relative to the env variable GLOBUS_LOCATION. If this path is wrong, it the Java machine will core dump. | ||

| + | |||

| + | The license for the Fastlemmatizer plugin: | ||

| + | |||

| + | ===== Fast lemmatizer logging ===== | ||

| + | The lemmatizer logs info, debug and error messages to the file "lemmatizer.txt" | ||

| + | |||

| + | ===== Lemmatization Usage ===== | ||

The FullTextIndexLookup Service uses expansion during lemmatization; a word (of a query) is expanded into all known versions of the word. It is of course important to know the language of the query in order to know which words to expand the query with. Currently the same methods used to specify language for a language aware query is used is used to specify language for the lemmatization process. A way of separating these two specifications (such that lemmatization can be performed without performing a language aware query) will be made available shortly... | The FullTextIndexLookup Service uses expansion during lemmatization; a word (of a query) is expanded into all known versions of the word. It is of course important to know the language of the query in order to know which words to expand the query with. Currently the same methods used to specify language for a language aware query is used is used to specify language for the lemmatization process. A way of separating these two specifications (such that lemmatization can be performed without performing a language aware query) will be made available shortly... | ||

| + | |||

| + | ==== Linguistics Licenses ==== | ||

| + | The current license key for the fastalngid and fastlemmatizer is valid through March 2008. | ||

| + | |||

| + | If a new license is required please contact: Stefan.debald@fast.no to get a new license key. | ||

| + | |||

| + | The license must be installed both in the Fastlangid and the Fastlemmatizer module. | ||

| + | |||

| + | The fastlangid license is installed by updating the SVN text file: | ||

| + | '''linguistics/fastlinguistics/fastlangid/etc/langid/config.txt''' | ||

| + | |||

| + | Use a text editor and replace the 32 character license string with the new license string: | ||

| + | |||

| + | // The license key | ||

| + | // Contact stefand.debald@fast.no for new license key: | ||

| + | LICSTR=KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG | ||

| + | |||

| + | A running system is updated by replacing the license string in the file: | ||

| + | '''$GLOBUS_LOCATION/etc/langid/config.txt''' | ||

| + | as described above. | ||

| + | |||

| + | The fastlemmatizer license is installed by updating the SVN text file: | ||

| + | |||

| + | '''linguistics/fastlinguistics/fastlemmatizer/etc/LemmatizationConfigQueryExpansion.xml''' | ||

| + | |||

| + | Use a text editor and replace the 32 character license string with the new license string: | ||

| + | <lemmatization default_mode="query_expansion" default_query_language="en" license="KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG"> | ||

| + | |||

| + | The running system is updated by replacing the license string in the file: | ||

| + | '''$GLOBUS_LOCATION/etc/lemmatizer/LemmatizationConfigQueryExpansion.xml''' | ||

===Partitioning=== | ===Partitioning=== | ||

| Line 199: | Line 371: | ||

==Usage Example== | ==Usage Example== | ||

===Create a Management Resource=== | ===Create a Management Resource=== | ||

| − | |||

| − | |||

| − | |||

| − | //Get the factory portType | + | <span style="color:green">//Get the factory portType</span> |

| − | managementFactoryEPR = new EndpointReferenceType(); | + | <nowiki>String managementFactoryURI = "http://some.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexManagementFactoryService";</nowiki> |

| − | managementFactoryEPR.setAddress(new Address( | + | FullTextIndexManagementFactoryServiceAddressingLocator managementFactoryLocator = new FullTextIndexManagementFactoryServiceAddressingLocator(); |

| − | managementFactory = managementFactoryLocator | + | |

| − | + | managementFactoryEPR = new EndpointReferenceType(); | |

| + | managementFactoryEPR.setAddress(new Address(managementFactoryURI)); | ||

| + | managementFactory = managementFactoryLocator | ||

| + | .getFullTextIndexManagementFactoryPortTypePort(managementFactoryEPR); | ||

| + | |||

| + | <span style="color:green">//Create generator resource and get endpoint reference of WS-Resource.</span> | ||

| + | org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResource managementCreateArguments = | ||

| + | new org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResource(); | ||

| + | managementCreateArguments.setIndexTypeName(indexType));<span style="color:green">//Optional (only needed if not provided in RS)</span> | ||

| + | managementCreateArguments.setIndexID(indexID);<span style="color:green">//Optional (should usually not be set, and the service will create the ID)</span> | ||

| + | managementCreateArguments.setCollectionID("myCollectionID"); | ||

| + | managementCreateArguments.setContentType("MetaData"); | ||

| + | |||

| + | org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResourceResponse managementCreateResponse = | ||

| + | managementFactory.createResource(managementCreateArguments); | ||

| + | |||

| + | managementInstanceEPR = managementCreateResponse.getEndpointReference(); | ||

| + | String indexID = managementCreateResponse.getIndexID(); | ||

| − | + | ===Create an Updater Resource and start feeding=== | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | ||

| − | ===Create | + | |

<span style="color:green">//Get the factory portType</span> | <span style="color:green">//Get the factory portType</span> | ||

| − | <nowiki>updaterFactoryURI = "http://some. | + | <nowiki>updaterFactoryURI = "http://some.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexBatchUpdaterFactoryService";</nowiki> <span style="color:green">//could be on any node</span> |

updaterFactoryEPR = new EndpointReferenceType(); | updaterFactoryEPR = new EndpointReferenceType(); | ||

updaterFactoryEPR.setAddress(new Address(updaterFactoryURI)); | updaterFactoryEPR.setAddress(new Address(updaterFactoryURI)); | ||

| Line 241: | Line 409: | ||

new org.diligentproject.indexservice.fulltextindexbatchupdater.stubs.CreateResource(); | new org.diligentproject.indexservice.fulltextindexbatchupdater.stubs.CreateResource(); | ||

| − | + | <span style="color:green">//Connect to the correct Index</span> | |

| − | updaterCreateArguments. | + | updaterCreateArguments.setMainIndexID(indexID); |

| − | + | ||

<span style="color:green">//Now let's insert some data into the index... Firstly, get the updater EPR.</span> | <span style="color:green">//Now let's insert some data into the index... Firstly, get the updater EPR.</span> | ||

| Line 264: | Line 431: | ||

<span style="color:green">//Tell the updater to start gathering data from the ResultSet</span> | <span style="color:green">//Tell the updater to start gathering data from the ResultSet</span> | ||

| − | updaterInstance. | + | updaterInstance.process(resultSetLocator); |

===Create a Lookup resource and perform a query=== | ===Create a Lookup resource and perform a query=== | ||

<span style="color:green">//Let's put it on another node for fun...</span> | <span style="color:green">//Let's put it on another node for fun...</span> | ||

| − | <nowiki>lookupFactoryURI = "http://another. | + | <nowiki>lookupFactoryURI = "http://another.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexLookupFactoryService";</nowiki> |

FullTextIndexLookupFactoryServiceAddressingLocator lookupFactoryLocator = new FullTextIndexLookupFactoryServiceAddressingLocator(); | FullTextIndexLookupFactoryServiceAddressingLocator lookupFactoryLocator = new FullTextIndexLookupFactoryServiceAddressingLocator(); | ||

EndpointReferenceType lookupFactoryEPR = null; | EndpointReferenceType lookupFactoryEPR = null; | ||

| Line 286: | Line 453: | ||

org.diligentproject.indexservice.fulltextindexlookup.stubs.CreateResourceResponse lookupCreateResponse = null; | org.diligentproject.indexservice.fulltextindexlookup.stubs.CreateResourceResponse lookupCreateResponse = null; | ||

| − | lookupCreateResourceArguments. | + | lookupCreateResourceArguments.setMainIndexID(indexID); |

lookupCreateResponse = lookupFactory.createResource( lookupCreateResourceArguments); | lookupCreateResponse = lookupFactory.createResource( lookupCreateResourceArguments); | ||

lookupEPR = lookupCreateResponse.getEndpointReference(); | lookupEPR = lookupCreateResponse.getEndpointReference(); | ||

| Line 305: | Line 472: | ||

reader = RSXMLReader.getRSXMLReader(new RSLocator(epr)); | reader = RSXMLReader.getRSXMLReader(new RSLocator(epr)); | ||

| + | <span style="color:green">//Print each part of the RS to std.out</span> | ||

System.out.println("<Results>"); | System.out.println("<Results>"); | ||

do{ | do{ | ||

| Line 325: | Line 493: | ||

e.printStackTrace(); | e.printStackTrace(); | ||

} | } | ||

| + | |||

| + | ===Getting statistics from a Lookup resource=== | ||

| + | |||

| + | String statsLocation = lookupInstance.createStatistics(new CreateStatistics()); | ||

| + | |||

| + | <span style="color:green">//Connect to a CMS Running Instance</span> | ||

| + | EndpointReferenceType cmsEPR = new EndpointReferenceType(); | ||

| + | <nowiki>cmsEPR.setAddress(new Address("http://swiss.domain.ch:8080/wsrf/services/diligentproject/contentmanagement/ContentManagementServiceService"));</nowiki> | ||

| + | ContentManagementServiceServiceAddressingLocator cmslocator = new ContentManagementServiceServiceAddressingLocator(); | ||

| + | cms = cmslocator.getContentManagementServicePortTypePort(cmsEPR); | ||

| + | |||

| + | <span style="color:green">//Retrieve the statistics file from CMS</span> | ||

| + | GetDocumentParameters getDocumentParams = new GetDocumentParameters(); | ||

| + | getDocumentParams.setDocumentID(statsLocation); | ||

| + | getDocumentParams.setTargetFileLocation(BasicInfoObjectDescription.RAW_CONTENT_IN_MESSAGE); | ||

| + | DocumentDescription description = cms.getDocument(getDocumentParams); | ||

| + | |||

| + | <span style="color:green">//Write the statistics file from memory to disk </span> | ||

| + | File downloadedFile = new File("Statistics.xml"); | ||

| + | DecompressingInputStream input = new DecompressingInputStream( | ||

| + | new BufferedInputStream(new ByteArrayInputStream(description.getRawContent()), 2048)); | ||

| + | BufferedOutputStream output = new BufferedOutputStream( new FileOutputStream(downloadedFile), 2048); | ||

| + | byte[] buffer = new byte[2048]; | ||

| + | int length; | ||

| + | while ( (length = input.read(buffer)) >= 0){ | ||

| + | output.write(buffer, 0, length); | ||

| + | } | ||

| + | input.close(); | ||

| + | output.close(); | ||

| + | |||

| + | ==Authors== | ||

| + | [http://ddwiki.di.uoa.gr/mediawiki/index.php/User:Msibeko Mads Sibeko] | ||

| + | |||

| + | [http://ddwiki.di.uoa.gr/mediawiki/index.php/User:Daarvag Dagfinn Aarvaag] | ||

| + | <br> | ||

| + | <br> | ||

| + | |||

| + | --[[User:Msibeko|Msibeko]] 14:36, 1 June 2007 (EEST) | ||

Latest revision as of 15:01, 14 July 2008

![]() THIS SECTION OF GCUBE DOCUMENTATION IS CURRENTLY UNDER UPDATE.

THIS SECTION OF GCUBE DOCUMENTATION IS CURRENTLY UNDER UPDATE.

Contents

Introduction

The Full Text Index is responsible for providing quick full text data retrieval capabilities in the DILIGENT environment.

Implementation Overview

Services

The full text index is implemented through three services. They are all implemented according to the Factory pattern:

- The FullTextIndexManagement Service represents an index manager. There is a one to one relationship between an Index and a Management instance, and their life-cycles are closely related; an Index is created by creating an instance (resource) of FullTextIndexManagement Service, and an index is removed by terminating the corresponding FullTextIndexManagement resource. The FullTextIndexManagement Service should be seen as an interface for managing the life-cycle and properties of an Index, but it is not responsible for feeding or querying its index. In addition, a FullTextIndexManagement Service resource does not store the content of its Index locally, but contains references to content stored in Content Management Service.

- The FullTextIndexBatchUpdater Service is responsible for feeding an Index. One FullTextIndexBatchUpdater Service resource can only update a single Index, but one Index can be updated by multiple FullTextIndexBatchUpdater Service resources. Feeding is accomplished by instantiating a FullTextIndexBatchUpdater Service resources with the EPR of the FullTextIndexManagement resource connected to the Index to update, and connecting the updater resource to a ResultSet containing the content to be fed to the Index.

- The FullTextIndexLookup Service is responsible for creating a local copy of an index, and exposing interfaces for querying and creating statistics for the index. One FullTextIndexLookup Service resource can only replicate and lookup a single instance, but one Index can be replicated by any number of FullTextIndexLookup Service resources. Updates to the Index will be propagated to all FullTextIndexLookup Service resources replicating that Index.

It is important to note that none of the three services have to reside on the same node; they are only connected through WebService calls and the DILIGENT CMS. The following illustration shows the information flow and responsibilities for the different services used to implement the Full Text Index:

RowSet

The content to be fed into an Index, must be served as a ResultSet containing XML documents conforming to the ROWSET schema. This is a very simple schema, declaring that a document (ROW element) should contain of any number of FIELD elements with a name attribute and the text to be indexed for that field. The following is a simple but valid ROWSET containing two documents:

<ROWSET>

<ROW id="doc1">

<FIELD name="title">How to create an Index</FIELD>

<FIELD name="contents">Just read the WIKI</FIELD>

</ROW>

<ROW id="doc2">

<FIELD name="title">How to create a Nation</FIELD>

<FIELD name="contents">Talk to the UN</FIELD>

<FIELD name="references">un.org</FIELD>

</ROW>

</ROWSET>

IndexType

How the different fields in the ROWSET should be handled by the Index, and how the different fields in an Index should be handled during a query, is specified through an IndexType; an XML document conforming to the IndexType schema. An IndexType contains a field list which contains all the fields which should be indexed and/or stored in order to be presented in the query results, along with a specification of how each of the fields should be handled. The following is a possible IndexType for the type of ROWSET shown above:

<index-type>

<field-list>

<field name="title" lang="en">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

<field name="contents" lang="en>

<index>yes</index>

<store>no</store>

<return>no</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

<field name="references" lang="en>

<index>yes</index>

<store>no</store>

<return>no</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

</field-list>

</index-type>

Fields present in the ROWSET but not in the IndexType will be skipped. The elements under each "field" element are used to define how that field should be handled, and they should contain either "yes" or "no". The meaning of each of them is explained bellow:

- index

- specifies whether the specific field should be indexed or not (ie. whether the index should look for hits within this field)

- store

- specifies whether the field should be stored in its original format to be returned in the results from a query.

- return

- specifies whether a stored field should be returned in the results from a query. A field must have been stored to be returned. (This element is not available in the currently deployed indices)

- tokenize

- specifies whether the field should be tokenized. Should usually contain "yes".

- sort

- Not used

- boost

- Not used

For more complex content types, one can also specify sub-fields as in the following example:

<index-type>

<field-list>

<field name="contents">

<index>yes</index>

<store>no</store>

<return>no</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

<!-- subfields of contents -->

<field name="title">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

<!-- subfields of title which itself is a subfield of contents -->

<field name="bookTitle">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

<field name="chapterTitle">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

</field>

<field name="foreword">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

<field name="startChapter">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

<field name="endChapter">

<index>yes</index>

<store>yes</store>

<return>yes</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

</field>

<!-- not a subfield -->

<field name="references">

<index>yes</index>

<store>no</store>

<return>no</return>

<tokenize>yes</tokenize>

<sort>no</sort>

<boost>1.0</boost>

</field>

</field-list>

</index-type>

Querying the field "contents" in an index using this IndexType would return hitsin all its sub-fields, which is all fields except references. Querying the field "title" would return hits in both "bookTitle" and "chapterTitle" in addition to hits in the "title" field. Querying the field "startChapter" would only return hits in from "startChapter" since this field does not contain any sub-fields. Please be aware that using sub-fields adds extra fields in the index, and therefore uses more disks pace.

We currently have five standard index types, loosely based on the available metadata schemas. However any data can be indexed using each, as long as the RowSet follows the IndexType:

- index-type-default-1.0 (DublinCore)

- index-type-TEI-2.0

- index-type-eiDB-1.0

- index-type-iso-1.0

- index-type-FT-1.0

The IndexType of a FullTextIndexManagement Service resource can be changed as long as no FullTextIndexBatchUpdater resources have connected to it. The reason for this limitation is that the processing of fields should be the same for all documents in an index; all documents in an index should be handled according to the same IndexType.

The IndexType of a FullTextIndexLookup Service resource is originally retrieved from the FullTextIndexManagement Service resource it is connected to. However, the "returned" property can be changed at any time in order to change which fields are returned. Keep in mind that only fields which have a "stored" attribute set to "yes" can have their "returned" field altered to return content.

Query language

The Full Text Index uses the Lucene query language, but does not allow the use of fuzzy searches, proximity searches, range searches or boosting of a term. In addition, queries using wildcards will not return usable query statistics.

Statistics

Linguistics

The linguistics component is used in the Full Text Index.

Two linguistics components are available; the language identifier module, and the lemmatizer module.

The language identifier module is used during feeding in the FullTextBatchUpdater to identify the language in the documents. The lemmatizer module is used by the FullTextLookup module during search operations to search for all possible forms (nouns and adjectives) of the search term.

The language identifier module has two real implementations (plugins) and a dummy plugin (doing nothing, returning always "nolang" when called). The lemmatizer module contains one real implementation (one plugin) (no suitable alternative was found to make a second plugin), and a dummy plugin (always returning an empty String "").

Fast has provided proprietary technology for one of the language identifier modules (Fastlangid) and the lemmatizer module (Fastlemmatizer). The modules provided by Fast require a valid license to run (see later). The license is a 32 character long string. This string must be provided by Fast (contact Stefan Debald, setfan.debald@fast.no), and saved in the appropriate configuration file (see install a lingustics license).

The current license is valid until end of March 2008.

Plugin implementation

The classes implementing the plugin framework for the language identifier and the lemmatizer are in the SVN module common. The package is:

org/diligentproject/indexservice/common/linguistics/lemmatizerplugin and org/diligentproject/indexservice/common/linguistics/langidplugin

The class LanguageIdFactory loads an instance of the class LanguageIdPlugin. The class LemmatizerFactory loads an instance of the class LemmatizerPlugin.

The language id plugins implements the class org.diligentproject.indexservice.common.linguistics.langidplugin.LanguageIdPlugin. The lemmatizer plugins implements the class org.diligentproject.indexservice.common.linguistics.lemmatizerplugin.LemmatizerPlugin. The factory use the method:

Class.forName(pluginName).newInstance();

when loading the implementations. The parameter pluginName is the package name of the plugin class to be loaded and instantiated.

Language Identification

There are two real implementations of the language identification plugin available in addition to the dummy plugin that always returns "nolang".

The plugin implementations that can be selected when the FullTextBatchUpdaterResource is created:

org.diligentproject.indexservice.common.linguistics.jtextcat.JTextCatPlugin

org.diligentproject.indexservice.linguistics.fastplugin.FastLanguageIdPlugin

org.diligentproject.indexservice.common.linguistics.languageidplugin.DummyLangidPlugin

JTextCat

The JTextCat is maintained by http://textcat.sourceforge.net/. It is a light weight text categorization language tool in Java. It implements the N-Gram-Based Text Categorization algorithms that is described here: http://citeseer.ist.psu.edu/68861.html It supports the languages: German, English, French, Spanish, Italian, Swedish, Polish, Dutch, Norwegian, Finnish, Albanian, Slovakian, Slovenian, Danish and Hungarian.

The JTexCat is loaded and accessed by the plugin: org.diligentproject.indexservice.common.linguistics.jtextcat.JTextCatPlugin

The JTextCat contains no config - or bigram files since all the statistical data about the languages are contained in the package itself.

The JTextCat is delivered in the jar file: textcat-1.0.1.jar.

The license for the JTextCat: http://www.gnu.org/copyleft/lesser.html

Fastlangid

The Fast language identification module is developed by Fast. It supports "all" languages used on the web. The tools is implemented in C++. The C++ code is loaded as a shared library object. The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. The shared library objects are compiled on Linux RHE3 and RHE4.

The Java native interface is generated using Swig.

The Fast langid module is loaded by the plugin (using the LanguageIdFactory)

org.diligentproject.indexservice.linguistics.fastplugin.FastLanguageIdPlugin

The plugin loads the shared object library, and when init is called, instantiate the native C++ objects that identifies the languages.

The Fastlangid is in the SVN module: trunk/linguistics/fastlinguistics/fastlangid

The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file config.txt

The shared library object is called liblangid.so

The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/langid.

The org_diligentproject_indexservice_langid.jar contains the plugin FastLangidPlugin (that is loaded by the LanguageIdFactory) and the Java native interface to the shared library object.

The shared library object liblangid.so is deployed in the $GLOBUS_LOCATION/lib catalogue.

The license for the Fastlangid plugin:

Language Identifier Usage

The language identifier is used by the Full Text Updater in the Full Text Index. The plugin to use for an updater is decided when the resource is created, as a part of the create resource call. (see Full Text Updater). The parameter is the package name of the implementation to be loaded and used to identify the language.

The language identification module and the lemmatizer module are loaded at runtime by using a factory that loads the implementation that is going to be used.

The feeded documents may contain the language per field in the document. If present this specified language is used when indexing the document. In this case the language id module is not used. If no language is specified in the document, and there is a language identification plugin loaded, the FullTextIndexBatchUpdater Service will try to identify the language of the field using the loaded plugin for language identification. Since language is assigned at the Collections level in Diligent, all fields of all documents in a language aware collection should contain a "lang" attribute with the language of the collection.

A language aware query can be performed at a query or term basis:

- the query "_querylang_en: car OR bus OR plane" will look for English occurrences of all the terms in the query.

- the queries "car OR _lang_en:bus OR plane" and "car OR _lang_en_title:bus OR plane" will only limit the terms "bus" and "title:bus" to English occurrences. (the version without a specified field will not work in the currently deployed indices)

- Since language is specified at a collection level, language aware queries should only be used for language neutral collections.

Lemmatization

There is one real implementations of the lemmatizer plugin available in addition to the dummy plugin that always returns "" (empty string).

The plugin implementations is selected when the FullTextLookupResource is created:

org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin

org.diligentproject.indexservice.common.linguistics.languageidplugin.DummyLemmatizerPlugin

Fastlemmatizer

The Fast lemmatizer module is developed by Fast. The lemmatizer modules depends on .aut files (config files) for the language to be lemmatized. Both expansion and reduction is supported, but expansion is used. The terms (noun and adjectives) in the query are expanded.

The lemmatizer is configured for the following languages: German, Italian, Portuguese, French, English, Spanish, Netherlands, Norwegian. To support more languages, additional .aut files must be loaded and the config file LemmatizationQueryExpansion.xml must be updated.

The lemmatizer is implemented in C++. The C++ code is loaded as a shared library object. The Fast langid plugin interfaces a Java wrapper that loads the shared library objects and calls the native C++ code. The shared library objects are compiled on Linux RHE3 and RHE4.

The Java native interface is generated using Swig.

The Fast lemmatizer module is loaded by the plugin (using the LemmatizerIdFactory)

org.diligentproject.indexservice.linguistics.fastplugin.FastLemmatizerPlugin

The plugin loads the shared object library, and when init is called, instantiate the native C++ objects.

The Fastlemmatizer is in the SVN module: trunk/linguistics/fastlinguistics/fastlemmatizer

The lib catalog contains one catalog for RHE3 and one catalog for RHE4 shared objects (.so). The etc catalog contains the config files. The license string is contained in the config file LemmatizerConfigQueryExpansion.xml The shared library object is called liblemmatizer.so

The configuration files for the langid module are installed in $GLOBUS_LOACTION/etc/lemmatizer.

The org_diligentproject_indexservice_lemmatizer.jar contains the plugin FastLemmatizerPlugin (that is loaded by the LemmatizerFactory) and the Java native interface to the shared library.

The shared library liblemmatizer.so is deployed in the $GLOBUS_LOCATION/lib catalogue.

The $GLOBUS_LOCATION/lib must therefore be include in the LD_LIBRARY_PATH environment variable.

Fast lemmatizer configuration

The LemmatizerConfigQueryExpansion.xml contains the paths to the .aut files that is loaded when a lemmatizer is instanciated.

<lemmas active="yes" parts_of_speech="NA">etc/lemmatizer/resources/dictionaries/lemmatization/en_NA_exp.aut</lemmas>

The path is relative to the env variable GLOBUS_LOCATION. If this path is wrong, it the Java machine will core dump.

The license for the Fastlemmatizer plugin:

Fast lemmatizer logging

The lemmatizer logs info, debug and error messages to the file "lemmatizer.txt"

Lemmatization Usage

The FullTextIndexLookup Service uses expansion during lemmatization; a word (of a query) is expanded into all known versions of the word. It is of course important to know the language of the query in order to know which words to expand the query with. Currently the same methods used to specify language for a language aware query is used is used to specify language for the lemmatization process. A way of separating these two specifications (such that lemmatization can be performed without performing a language aware query) will be made available shortly...

Linguistics Licenses

The current license key for the fastalngid and fastlemmatizer is valid through March 2008.

If a new license is required please contact: Stefan.debald@fast.no to get a new license key.

The license must be installed both in the Fastlangid and the Fastlemmatizer module.

The fastlangid license is installed by updating the SVN text file: linguistics/fastlinguistics/fastlangid/etc/langid/config.txt

Use a text editor and replace the 32 character license string with the new license string:

// The license key // Contact stefand.debald@fast.no for new license key: LICSTR=KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG

A running system is updated by replacing the license string in the file: $GLOBUS_LOCATION/etc/langid/config.txt as described above.

The fastlemmatizer license is installed by updating the SVN text file:

linguistics/fastlinguistics/fastlemmatizer/etc/LemmatizationConfigQueryExpansion.xml

Use a text editor and replace the 32 character license string with the new license string:

<lemmatization default_mode="query_expansion" default_query_language="en" license="KILMDEPFKHNBNPCBAKONBCCBFLKPOEFG">

The running system is updated by replacing the license string in the file: $GLOBUS_LOCATION/etc/lemmatizer/LemmatizationConfigQueryExpansion.xml

Partitioning

In order to handle situations where an Index replication does not fit on a single node, partitioning has been implemented for the FullTextIndexLookup Service; in cases where there is not enough space to perform an update/addition on the FullTextIndexLookup Service resource, a new resource will be created to handle all the content which didn't fit on the first resource. The partitioning is handled automatically and is transparent when performing a query, however the possibility of enabling/disabling partitioning will be added in the future. In the deployed Indices partitioning has been disabled due to problems with the creation of statistics. Will be fixed shortly.

Dependencies

Will be filled out shortly

Usage Example

Create a Management Resource

//Get the factory portType String managementFactoryURI = "http://some.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexManagementFactoryService"; FullTextIndexManagementFactoryServiceAddressingLocator managementFactoryLocator = new FullTextIndexManagementFactoryServiceAddressingLocator(); managementFactoryEPR = new EndpointReferenceType(); managementFactoryEPR.setAddress(new Address(managementFactoryURI)); managementFactory = managementFactoryLocator .getFullTextIndexManagementFactoryPortTypePort(managementFactoryEPR); //Create generator resource and get endpoint reference of WS-Resource. org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResource managementCreateArguments = new org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResource(); managementCreateArguments.setIndexTypeName(indexType));//Optional (only needed if not provided in RS) managementCreateArguments.setIndexID(indexID);//Optional (should usually not be set, and the service will create the ID) managementCreateArguments.setCollectionID("myCollectionID"); managementCreateArguments.setContentType("MetaData"); org.diligentproject.indexservice.fulltextindexmanagement.stubs.CreateResourceResponse managementCreateResponse = managementFactory.createResource(managementCreateArguments); managementInstanceEPR = managementCreateResponse.getEndpointReference(); String indexID = managementCreateResponse.getIndexID();

Create an Updater Resource and start feeding

//Get the factory portType updaterFactoryURI = "http://some.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexBatchUpdaterFactoryService"; //could be on any node updaterFactoryEPR = new EndpointReferenceType(); updaterFactoryEPR.setAddress(new Address(updaterFactoryURI)); updaterFactory = updaterFactoryLocator .getFullTextIndexBatchUpdaterFactoryPortTypePort(updaterFactoryEPR); //Create updater resource and get endpoint reference of WS-Resource org.diligentproject.indexservice.fulltextindexbatchupdater.stubs.CreateResource updaterCreateArguments = new org.diligentproject.indexservice.fulltextindexbatchupdater.stubs.CreateResource(); //Connect to the correct Index updaterCreateArguments.setMainIndexID(indexID); //Now let's insert some data into the index... Firstly, get the updater EPR. org.diligentproject.indexservice.fulltextindexbatchupdater.stubs.CreateResourceResponse updaterCreateResponse = updaterFactory .createResource(updaterCreateArguments); updaterInstanceEPR = updaterCreateResponse.getEndpointReference(); //Get updater instance PortType updaterInstance = updaterInstanceLocator.getFullTextIndexBatchUpdaterPortTypePort(updaterInstanceEPR); //read the EPR of the ResultSet containing the ROWSETs to feed into the index BufferedReader in = new BufferedReader(new FileReader(eprFile)); String line; resultSetLocator = ""; while((line = in.readLine())!=null){ resultSetLocator += line; } //Tell the updater to start gathering data from the ResultSet updaterInstance.process(resultSetLocator);

Create a Lookup resource and perform a query

//Let's put it on another node for fun... lookupFactoryURI = "http://another.domain.no:8080/wsrf/services/diligentproject/index/FullTextIndexLookupFactoryService"; FullTextIndexLookupFactoryServiceAddressingLocator lookupFactoryLocator = new FullTextIndexLookupFactoryServiceAddressingLocator(); EndpointReferenceType lookupFactoryEPR = null; EndpointReferenceType lookupEPR = null; FullTextIndexLookupFactoryPortType lookupFactory = null; FullTextIndexLookupPortType lookupInstance = null; //Get factory portType lookupFactoryEPR= new EndpointReferenceType(); lookupFactoryEPR.setAddress(new Address(lookupFactoryURI)); lookupFactory =lookupFactoryLocator.getFullTextIndexLookupFactoryPortTypePort(factoryEPR); //Create resource and get endpoint reference of WS-Resource org.diligentproject.indexservice.fulltextindexlookup.stubs.CreateResource lookupCreateResourceArguments = new org.diligentproject.indexservice.fulltextindexlookup.stubs.CreateResource(); org.diligentproject.indexservice.fulltextindexlookup.stubs.CreateResourceResponse lookupCreateResponse = null; lookupCreateResourceArguments.setMainIndexID(indexID); lookupCreateResponse = lookupFactory.createResource( lookupCreateResourceArguments); lookupEPR = lookupCreateResponse.getEndpointReference(); //Get instance PortType lookupInstance = instanceLocator.getFullTextIndexLookupPortTypePort(instanceEPR); //Perform a query String query = "good OR evil"; String epr = lookupInstance.query(query); //Print the results to screen. (refer to the ResultSet Framework page for a more detailed explanation) RSXMLReader reader=null; ResultElementBase[] results; try{ //create a reader for the ResultSet we created reader = RSXMLReader.getRSXMLReader(new RSLocator(epr)); //Print each part of the RS to std.out System.out.println("<Results>"); do{ System.out.println(" <Part>"); if (reader.getNumberOfResults() > 0){ results = reader.getResults(ResultElementGeneric.class); for(int i = 0; i < results.length; i++ ){ System.out.println(" "+results[i].toXML()); } } System.out.println(" </Part>"); if(!reader.getNextPart()){ break; } } while(true); System.out.println("</Results>"); } catch(Exception e){ e.printStackTrace(); }

Getting statistics from a Lookup resource

String statsLocation = lookupInstance.createStatistics(new CreateStatistics()); //Connect to a CMS Running Instance EndpointReferenceType cmsEPR = new EndpointReferenceType(); cmsEPR.setAddress(new Address("http://swiss.domain.ch:8080/wsrf/services/diligentproject/contentmanagement/ContentManagementServiceService")); ContentManagementServiceServiceAddressingLocator cmslocator = new ContentManagementServiceServiceAddressingLocator(); cms = cmslocator.getContentManagementServicePortTypePort(cmsEPR); //Retrieve the statistics file from CMS GetDocumentParameters getDocumentParams = new GetDocumentParameters(); getDocumentParams.setDocumentID(statsLocation); getDocumentParams.setTargetFileLocation(BasicInfoObjectDescription.RAW_CONTENT_IN_MESSAGE); DocumentDescription description = cms.getDocument(getDocumentParams); //Write the statistics file from memory to disk File downloadedFile = new File("Statistics.xml"); DecompressingInputStream input = new DecompressingInputStream( new BufferedInputStream(new ByteArrayInputStream(description.getRawContent()), 2048)); BufferedOutputStream output = new BufferedOutputStream( new FileOutputStream(downloadedFile), 2048); byte[] buffer = new byte[2048]; int length; while ( (length = input.read(buffer)) >= 0){ output.write(buffer, 0, length); } input.close(); output.close();

Authors

--Msibeko 14:36, 1 June 2007 (EEST)