Difference between revisions of "Statistical Algorithms Importer: Docker Support"

| Line 4: | Line 4: | ||

A Docker image represents an easy-way to deliver software in packages called containers. Containers are isolated from one another and bundle their own software, libraries and configuration files and thus they may contribute to simplifying the configuration of the D4Science infrastructure. | A Docker image represents an easy-way to deliver software in packages called containers. Containers are isolated from one another and bundle their own software, libraries and configuration files and thus they may contribute to simplifying the configuration of the D4Science infrastructure. | ||

| − | D4Science delivers a solution allowing to exploit Docker while preserving the main features of the D4Science infrastructure: replicability, reusability, sharing, accounting of the execution will all be preserved by following and exploiting the[https://services.d4science.org/group/rprototypinglab/data-miner?OperatorId=org.gcube.dataanalysis.wps.statisticalmanager.synchserver.mappedclasses.transducerers.DOCKER_IMAGE_EXECUTOR Docker Image Executor] algorithm. | + | D4Science delivers a solution allowing to exploit Docker while preserving the main features of the D4Science infrastructure: replicability, reusability, sharing, accounting of the execution will all be preserved by following and exploiting the [https://services.d4science.org/group/rprototypinglab/data-miner?OperatorId=org.gcube.dataanalysis.wps.statisticalmanager.synchserver.mappedclasses.transducerers.DOCKER_IMAGE_EXECUTOR Docker Image Executor] algorithm. |

This page explains how to create and run Docker Images in the D4Science infrastructure through the [[DataMiner_Manager|DataMiner Manager]] service and the algorithms developed with the [[Statistical_Algorithms_Importer|Statistical Algorithms Importer (SAI)]]. More information on Docker can be found [https://www.docker.com/ here]. | This page explains how to create and run Docker Images in the D4Science infrastructure through the [[DataMiner_Manager|DataMiner Manager]] service and the algorithms developed with the [[Statistical_Algorithms_Importer|Statistical Algorithms Importer (SAI)]]. More information on Docker can be found [https://www.docker.com/ here]. | ||

Revision as of 14:33, 24 September 2020

A Docker image represents an easy-way to deliver software in packages called containers. Containers are isolated from one another and bundle their own software, libraries and configuration files and thus they may contribute to simplifying the configuration of the D4Science infrastructure. D4Science delivers a solution allowing to exploit Docker while preserving the main features of the D4Science infrastructure: replicability, reusability, sharing, accounting of the execution will all be preserved by following and exploiting the Docker Image Executor algorithm.

This page explains how to create and run Docker Images in the D4Science infrastructure through the DataMiner Manager service and the algorithms developed with the Statistical Algorithms Importer (SAI). More information on Docker can be found here.

The Algorithm Docker Image Executor

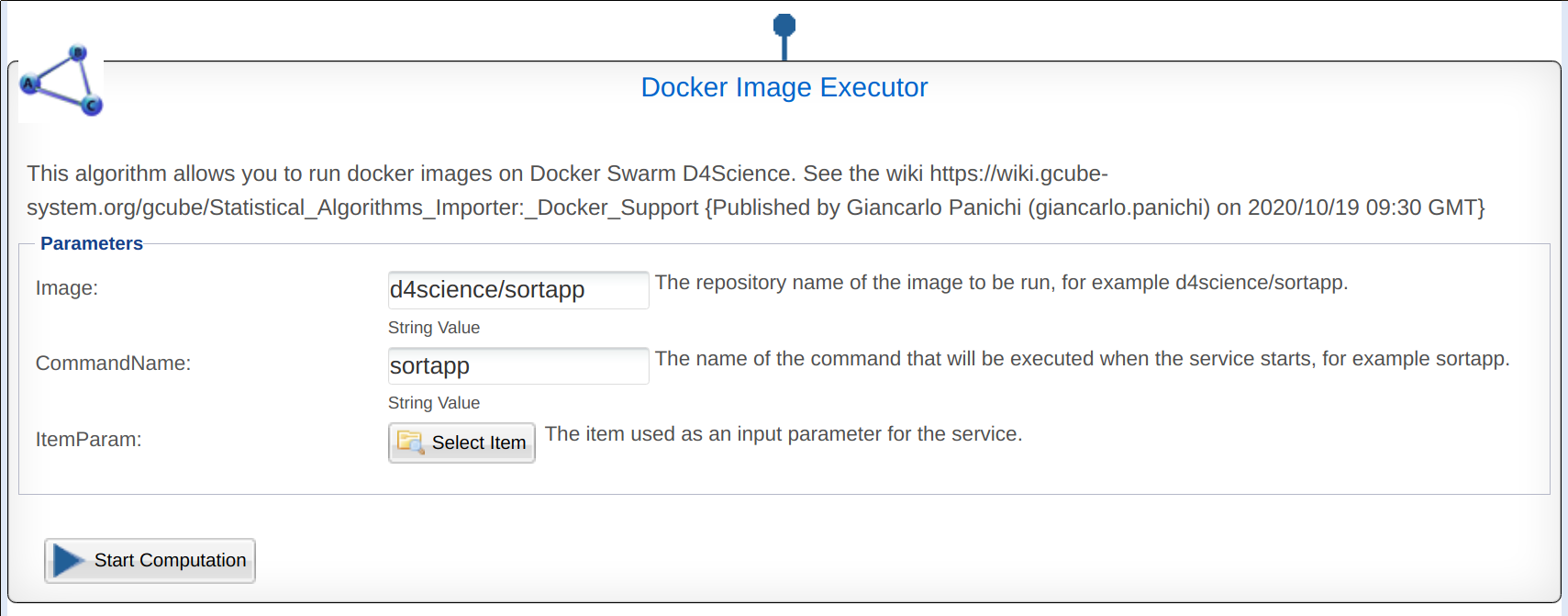

The Docker Image Executor algorithm allows its users to retrieve and run an image in D4Science Swarm cluster, from a Docker Hub repository (only public repositories are supported!). The algorithm is already published and made available by the D4Science infrastructure:

To run the algorithm the user must enter:

- Image, the name of the repository (e.g. d4science/sortapp)

- CommandName, the name of the command to invoke when the service is started (e.g. sortapp)

- FileParam, a file stopred into the user's workspace to be passed as an input parameter along with the run command (e.g. sortableelements.txt)

- there is no specific constraint for the file format or content, every app developer is free to use the most suitable one;

This algorithm will take care of retrieving the user token and passing the parameters to the Docker Service in this format:

<command-name> <token> <file-item-id> <temp-dir-item-id>

The algorithm is called to deliver the token and the input file to the service created starting from the chosen image. Moreover, the algorithm delivers the id of the temporary folder that was created on the StorageHub service. This folder is expected to be used by the algorithm to store the results of a computation. The service created from the chosen image will be responsible for saving the data of its own computation in the folder indicated by the algorithm interacting with the StorageHub service. When the execution of the Docker Service is completed, the Docker Image Executor algorithm will take care of returning the content of the folder as a zip file. So, it is important that the Docker Image is written with these constraints in mind.

How Create A Docker Image

An example of how to create a Docker Image suitable for running via the Docker Image Executor algorithm is shown here. This image is built starting from the base python:3.6-alpine image and installing the sortapp application written in python3.6 (see Dockerfile).

- The image is available by Docker Hub here: d4science/sortapp

The sortapp application built in this example simply does the sorting of strings. The strings are contained in the file indicated by the FileParam parameter.

This is just an example since the image could also be constructed using other languages and different base images. It is instead mandatory that the software packed in the image accepts the parameters as passed by the Docker Image Executor and respects the constraint to save the results in the temporary folder on StorageHub as indicated.