Difference between revisions of "Legacy applications integration"

(→Architectural Design) |

|||

| (42 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

| − | + | <!-- CATEGORIES --> | |

| + | [[Category: Developer's Guide]][[Category: GCube Spatial Data Infrastructure]][[Category: TO BE REMOVED]] | ||

| + | <!-- CATEGORIES --> | ||

| + | {| align="right" | ||

| + | ||__TOC__ | ||

| + | |} | ||

== Context == | == Context == | ||

| Line 34: | Line 39: | ||

== The computing resources and interfaces for Legacy Applications == | == The computing resources and interfaces for Legacy Applications == | ||

| − | The OGC Web Processing Service allows exposing processing services over geospatial data | + | The OGC Web Processing Service allows exposing processing services over geospatial data. |

52North has implemented a WPS Java framework where processing algorithms (e.g. spatial resampling, temporal aggregation, etc.) are implemented as WPS processes that can invoked by clients. | 52North has implemented a WPS Java framework where processing algorithms (e.g. spatial resampling, temporal aggregation, etc.) are implemented as WPS processes that can invoked by clients. | ||

This implementation does not provide underlying computing resources besides the server hosting the WPS implementation. The scalability is not ensured and QoS/SLA cannot be guaranteed. | This implementation does not provide underlying computing resources besides the server hosting the WPS implementation. The scalability is not ensured and QoS/SLA cannot be guaranteed. | ||

| Line 44: | Line 49: | ||

Coupling both allows exposing geospatial processing services using the OGC WPS interface and exploit scalable processing resources. | Coupling both allows exposing geospatial processing services using the OGC WPS interface and exploit scalable processing resources. | ||

| − | Legacy Applications thus exploit the Hadoop Map/Reduce streaming, a utility which allows users to create and run jobs with any executables (e.g. shell utilities) as the mapper and/or the reducer. | + | Legacy Applications thus exploit the Hadoop Map/Reduce streaming, a utility which allows users to create and run jobs with any executables (e.g. shell utilities) as the mapper and/or the reducer. |

== Types of input parameters in WPS-Hadoop == | == Types of input parameters in WPS-Hadoop == | ||

| Line 54: | Line 59: | ||

== Architectural Design == | == Architectural Design == | ||

| − | + | The figure below depicts the overall approach to support Legacy Applications with WPS-Hadoop. | |

| + | [[File:Legacy_applications.png|800px]] | ||

| − | + | The sub-sections below provide the bottom-up description of the architectural design elements | |

| − | The | + | === The Legacy Application: R Hello World! === |

| − | + | We use a very simple R application that takes two fixed parameters: a=2, b=3 and a few words as mapper parameter. | |

| − | + | ||

| − | + | ||

| − | + | ||

| − | + | The R Hello World! code is provided below: | |

| − | + | <code> | |

| − | + | <nowiki/>#!/usr/bin/Rscript --vanilla --slave | |

| − | + | ||

| + | args <- commandArgs(trailingOnly = TRUE) | ||

| − | + | word <- args[1] | |

| − | + | a <- as.numeric(args[2]) | |

| + | |||

| + | b <- as.numeric(args[3]) | ||

| + | |||

| + | c <- a+b | ||

| + | |||

| + | cat('Hello world, ', word,'! ') | ||

| + | |||

| + | cat('The sum of ', a, ' and ', b, ' is ', c, '\n') | ||

| + | </code> | ||

| + | |||

| + | The first line instructs the OS what interpreter to use. Note: the R script needs to be chmoded as executable. | ||

| + | |||

| + | === The Legacy Application processing trigger script === | ||

| + | |||

| + | The Legacy Application processing trigger script is a bash script (any other scripting language will do) to manage the inputs and outputs: | ||

| + | * Deal with the inputs that come from the Hadoop Map/Reduce as: | ||

| + | ** environmental variables for fixed input parameter | ||

| + | ** piped arguments for mapper parameter values | ||

| + | * Publish the outputs generated by the Legacy Application | ||

| + | |||

| + | The R Hello World! processing trigger script is provided below: | ||

| + | |||

| + | <code> | ||

| + | while read word | ||

| + | |||

| + | do | ||

| + | |||

| + | <nowiki/> test.r $word $a $b | ||

| + | |||

| + | done | ||

| + | </code> | ||

| + | |||

| + | === The Legacy Application WPS-Hadoop process === | ||

| + | |||

| + | The Legacy Application WPS-Hadoop process is a Java class that transforms the WPS process inputs (see next sub-section) into Hadoop Map/Reduce streaming arguments where: | ||

| + | * Fixed input parameters are passed as environmental variable using the -cmdenv name=value (the option can be used to pass more parameters. In our simple example, the Java class defines | ||

| + | |||

| + | <code> | ||

| + | hadoop ... -cmdenv a=2 -cmdenv b=3 | ||

| + | </code> | ||

| + | * Mapper input parameter values passed to the mapper using pipes | ||

| + | |||

| + | The Legacy Application files (R code) and processing trigger script are packaged as a jar on the WPS-Hadoop server and deployed on the Hadoop Map/Reduce cluster at runtime. This eases the Legacy Application maintenance activities. The Java class has then to: | ||

| + | * copy the jar package to the HDFS filesystem of the target Hadoop Map/Reduce cluster | ||

| + | * define the Hadoop streaming option -archives and the path in the HDFS (copied in the previous step) | ||

| + | <code> | ||

| + | -archives hdfs://<path to RHelloWorld.jar> | ||

| + | </code> | ||

| + | |||

| + | The structure proposed for the contents of the jar package is: | ||

| + | |||

| + | [[File:jar_package.png|400px]] | ||

| + | |||

| + | Although the contents refer an application.xml file, at this stage it is not required. It nevertheless paves the road for defining workflows of jobs using Oozie. | ||

| + | |||

| + | For that purpose, the application.xml file would have two main blocks: | ||

| + | * the job(s) template section | ||

| + | * the workflow template section. | ||

| + | |||

| + | The first part is to define the job templates in the workflow XML application definition file. | ||

| + | |||

| + | At this stage, our unique processing block of the workflow needs a job template. | ||

A proposed example contains the XML lines below: | A proposed example contains the XML lines below: | ||

<source lang="xml"> | <source lang="xml"> | ||

| − | <jobTemplate id=" | + | <jobTemplate id="jobname"> |

| − | <streamingExecutable>/application/ | + | <streamingExecutable>/application/RHelloWorld/run</streamingExecutable> |

| − | <defaultParameters | + | <defaultParameters> |

| − | + | <parameter id="a">2</parameter> | |

| − | <parameter id=" | + | <parameter id="b">3</parameter> |

| − | <parameter id=" | + | |

</defaultParameters> | </defaultParameters> | ||

<defaultJobconf> | <defaultJobconf> | ||

| − | <property id="app.job.max.tasks"> | + | <property id="app.job.max.tasks">4</property> |

| − | + | </defaultJobconf> | |

</jobTemplate> | </jobTemplate> | ||

</source> | </source> | ||

| − | + | === The Legacy Application WPS interface === | |

| + | |||

| + | The Legacy Application WPS interface is what the client will see from the integrated Legacy Application as an OGC WPS process. | ||

| + | |||

| + | The OGC WPS standard specification foresees three methods | ||

| + | * GetCapabilities - this operation allows clients to retrieve the processes metadata. The response is a XML file including metadata listing all processes (services) using identifiers (and not URLs) exposed | ||

| + | |||

| + | * DescribeProcess - this operation allows clients to retrieve the full description of a process using its identifier (returned by the GetCapabilities operation). The response includes information about parameters, input data and formats. | ||

| + | |||

| + | * Execute - this operation allows the WPS clients to submit the execution of a given WPS process. The request includes the service, request, version, identifier, datainputs, response form and language. | ||

| + | |||

| + | The Legacy Application DescribeProcess WPS response is partly defined with the definition of an XML file store on the WPS-Hadoop server. | ||

| + | |||

| + | The R Hello World!'s is shown below: | ||

| + | |||

| + | <code> | ||

| + | TBW | ||

| + | </code> | ||

| + | |||

| + | |||

| + | == Hadoop Map/Reduce cluster requirements == | ||

| + | |||

| + | The Hadoop Map/Reduce serving legacy applications (only those required): | ||

| + | * Must have the legacy software installed (including licenses if applicable): | ||

| + | ** R with all required packages. Additional packages could be deployed at runtime in the user space but this approach slows downs the processing and thus is not recommended. Baseline changes can be submitted to include new R packages in the Hadoop Map/Reduce clusters. | ||

| + | ** MatLab (requires a commercial license) | ||

| + | ** Octave (runs some MatLab code, no license) | ||

| + | ** IDL (requires a commercial license) | ||

| + | * Do not require the legacy applications deployed, these are managed by WPS-Hadoop at runtime | ||

| + | |||

| + | == Roles == | ||

| + | |||

| + | The following roles have been identified: | ||

| − | + | # Legacy application provider - this actor provides the Legacy Application code. He/she does not need to have any knowlegde about the WPS-Hadoop. He/she must understand the WPS specification in order to define the WPS process interface. | |

| − | + | # Legacy application integrator - this actor implements all aspects of the Legacy Application integration in WPS-Hadoop. He/she should not change the Legacy Application code | |

| + | # Legacy application client integrator - this actor implements the WPS client in the VREs for process exploitation. | ||

| − | + | == What info the Legacy application provider has to gather == | |

| − | The | + | The Legacy Application provider provides: |

| − | * | + | * WPS process interface (XML file) with |

| − | * | + | Title |

| + | Abstract | ||

| + | Data Inputs | ||

| + | Data Outputs | ||

| + | * Pseudo-code process logic to allow the integrator to implement the Java process class and wrapping script | ||

| + | * Legacy application package following the structure agreed (can be via a software repository) | ||

Latest revision as of 16:59, 6 July 2016

Context

The Geospatial Cluster goal is to:

- Data discovery of internal/external geospatial data repositories

- Data access to discovered data

- Data processing of discovered/accessed data

- Data visualization of discovered/accessed/processed data

This page focuses on the geospatial data processing of discovered/accessed data with Legacy Applications.

Objectives

The main objectives for the geospatial data processing are

- Define enrichment needs of bio-ecological or activity occurrences with environmental data: OBIS Ocean Physics, VTI, VME

- Designing and planning implementation for enrichment capacity

Advanced geospatial analytical and modelling features - e.g. R geospatial, reallocation, aggregation

- Defining advanced geospatial processes required in reallocation, aggregation, interpolation

- Designing and planning implementation for geospatial processes capacity

What are Legacy Applications

Legacy Applications are existing software applications written in third party languages such as R, IDL, MatLab, Python. Legacy Applications can not be re-written in Java as:

- legacy applications come a very specific knowledge domain hard to transfer to coders

- Time and resource consuming

- Converted applications would have poor maintainability

- Time-to-market too long

- Limitations on the number of applications supported

Examples of legacy applications are those written in R, IDL and MatLab. These are common software packages used for science applications development.

The computing resources and interfaces for Legacy Applications

The OGC Web Processing Service allows exposing processing services over geospatial data. 52North has implemented a WPS Java framework where processing algorithms (e.g. spatial resampling, temporal aggregation, etc.) are implemented as WPS processes that can invoked by clients. This implementation does not provide underlying computing resources besides the server hosting the WPS implementation. The scalability is not ensured and QoS/SLA cannot be guaranteed.

The Hadoop Map/Reduce model is used to provide the processing resources where:

- Processes can be map/reduce pure implementations using Java libraries packed at runtime and deployed by Hadoop Map/Reduce. This approach is not applicable to Legacy Applications

- Processes can be third party or other languages (bash, python, etc.) using Map/Reduce Streaming (pipes)

Coupling both allows exposing geospatial processing services using the OGC WPS interface and exploit scalable processing resources.

Legacy Applications thus exploit the Hadoop Map/Reduce streaming, a utility which allows users to create and run jobs with any executables (e.g. shell utilities) as the mapper and/or the reducer.

Types of input parameters in WPS-Hadoop

In order to exploit the parallel computing model offered by Hadoop Map/Reduce, we define two types of parameters:

- Fixed input parameters - these are parameters that have fixed values for all input parameters to process (e.g. PI=3.14, a=2, b=3). These parameters are managed as environmental variables at processing runtime

- Mapped input parameter - these parameters are used as input to the Hadoop Map/Reduce mapper. If the mapped input parameter contains more than one value, Hadoop Map/Reduce will try to use more task trackers at runtime.

Architectural Design

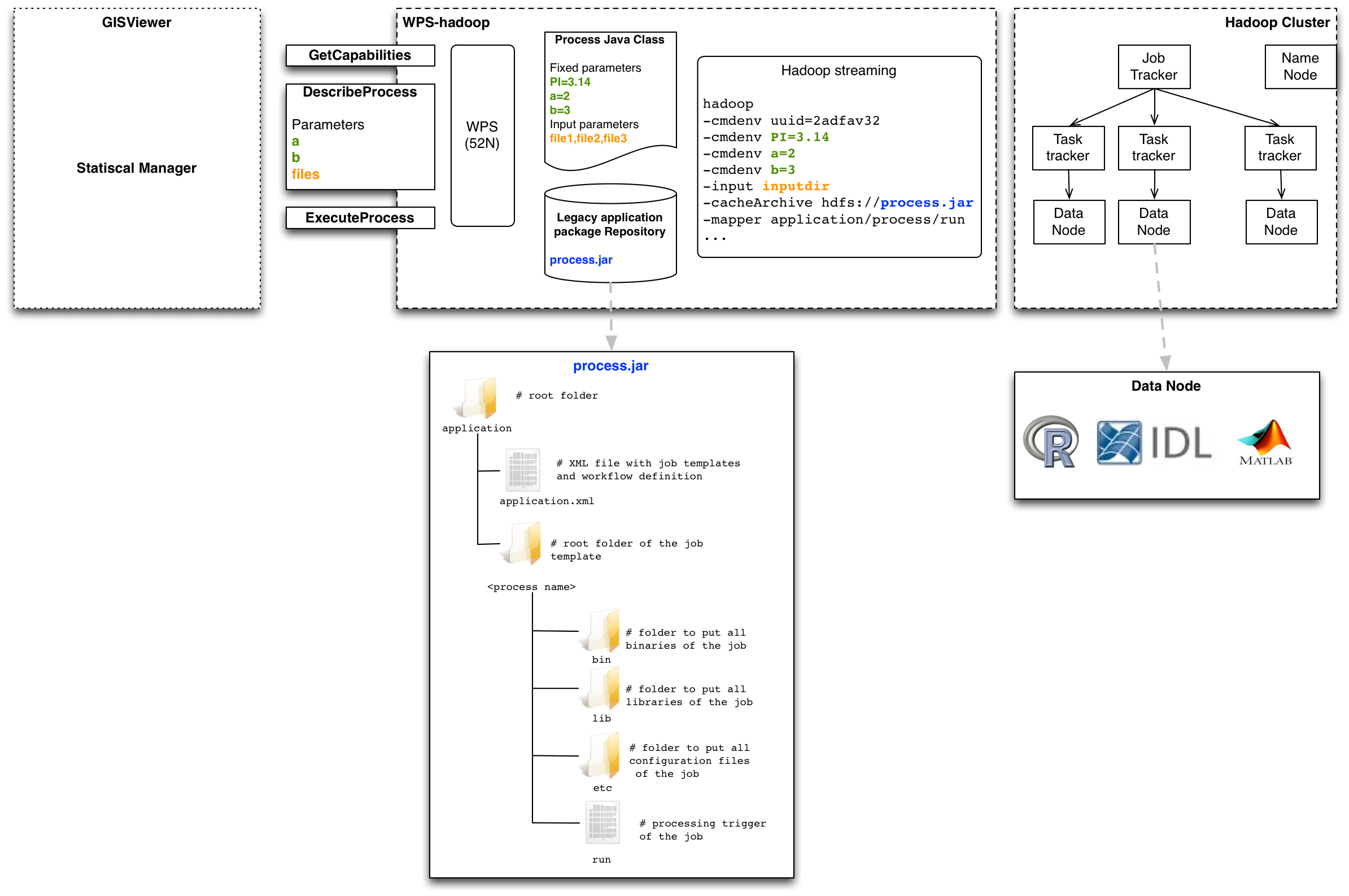

The figure below depicts the overall approach to support Legacy Applications with WPS-Hadoop.

The sub-sections below provide the bottom-up description of the architectural design elements

The Legacy Application: R Hello World!

We use a very simple R application that takes two fixed parameters: a=2, b=3 and a few words as mapper parameter.

The R Hello World! code is provided below:

#!/usr/bin/Rscript --vanilla --slave

args <- commandArgs(trailingOnly = TRUE)

word <- args[1]

a <- as.numeric(args[2])

b <- as.numeric(args[3])

c <- a+b

cat('Hello world, ', word,'! ')

cat('The sum of ', a, ' and ', b, ' is ', c, '\n')

The first line instructs the OS what interpreter to use. Note: the R script needs to be chmoded as executable.

The Legacy Application processing trigger script

The Legacy Application processing trigger script is a bash script (any other scripting language will do) to manage the inputs and outputs:

- Deal with the inputs that come from the Hadoop Map/Reduce as:

- environmental variables for fixed input parameter

- piped arguments for mapper parameter values

- Publish the outputs generated by the Legacy Application

The R Hello World! processing trigger script is provided below:

while read word

do

test.r $word $a $b

done

The Legacy Application WPS-Hadoop process

The Legacy Application WPS-Hadoop process is a Java class that transforms the WPS process inputs (see next sub-section) into Hadoop Map/Reduce streaming arguments where:

- Fixed input parameters are passed as environmental variable using the -cmdenv name=value (the option can be used to pass more parameters. In our simple example, the Java class defines

hadoop ... -cmdenv a=2 -cmdenv b=3

- Mapper input parameter values passed to the mapper using pipes

The Legacy Application files (R code) and processing trigger script are packaged as a jar on the WPS-Hadoop server and deployed on the Hadoop Map/Reduce cluster at runtime. This eases the Legacy Application maintenance activities. The Java class has then to:

- copy the jar package to the HDFS filesystem of the target Hadoop Map/Reduce cluster

- define the Hadoop streaming option -archives and the path in the HDFS (copied in the previous step)

-archives hdfs://<path to RHelloWorld.jar>

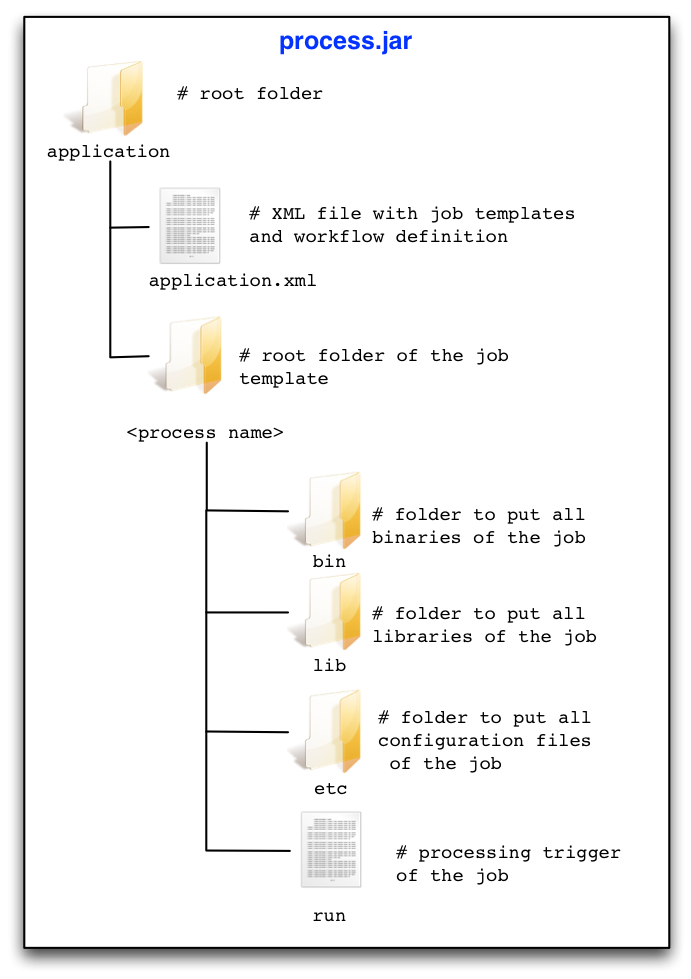

The structure proposed for the contents of the jar package is:

Although the contents refer an application.xml file, at this stage it is not required. It nevertheless paves the road for defining workflows of jobs using Oozie.

For that purpose, the application.xml file would have two main blocks:

- the job(s) template section

- the workflow template section.

The first part is to define the job templates in the workflow XML application definition file.

At this stage, our unique processing block of the workflow needs a job template.

A proposed example contains the XML lines below:

<jobTemplate id="jobname"> <streamingExecutable>/application/RHelloWorld/run</streamingExecutable> <defaultParameters> <parameter id="a">2</parameter> <parameter id="b">3</parameter> </defaultParameters> <defaultJobconf> <property id="app.job.max.tasks">4</property> </defaultJobconf> </jobTemplate>

The Legacy Application WPS interface

The Legacy Application WPS interface is what the client will see from the integrated Legacy Application as an OGC WPS process.

The OGC WPS standard specification foresees three methods

- GetCapabilities - this operation allows clients to retrieve the processes metadata. The response is a XML file including metadata listing all processes (services) using identifiers (and not URLs) exposed

- DescribeProcess - this operation allows clients to retrieve the full description of a process using its identifier (returned by the GetCapabilities operation). The response includes information about parameters, input data and formats.

- Execute - this operation allows the WPS clients to submit the execution of a given WPS process. The request includes the service, request, version, identifier, datainputs, response form and language.

The Legacy Application DescribeProcess WPS response is partly defined with the definition of an XML file store on the WPS-Hadoop server.

The R Hello World!'s is shown below:

TBW

Hadoop Map/Reduce cluster requirements

The Hadoop Map/Reduce serving legacy applications (only those required):

- Must have the legacy software installed (including licenses if applicable):

- R with all required packages. Additional packages could be deployed at runtime in the user space but this approach slows downs the processing and thus is not recommended. Baseline changes can be submitted to include new R packages in the Hadoop Map/Reduce clusters.

- MatLab (requires a commercial license)

- Octave (runs some MatLab code, no license)

- IDL (requires a commercial license)

- Do not require the legacy applications deployed, these are managed by WPS-Hadoop at runtime

Roles

The following roles have been identified:

- Legacy application provider - this actor provides the Legacy Application code. He/she does not need to have any knowlegde about the WPS-Hadoop. He/she must understand the WPS specification in order to define the WPS process interface.

- Legacy application integrator - this actor implements all aspects of the Legacy Application integration in WPS-Hadoop. He/she should not change the Legacy Application code

- Legacy application client integrator - this actor implements the WPS client in the VREs for process exploitation.

What info the Legacy application provider has to gather

The Legacy Application provider provides:

- WPS process interface (XML file) with

Title Abstract Data Inputs Data Outputs

- Pseudo-code process logic to allow the integrator to implement the Java process class and wrapping script

- Legacy application package following the structure agreed (can be via a software repository)