Difference between revisions of "Spatial Data Processing"

(→Design) |

(→A complete Example: the Bathymetry Algorithm) |

||

| Line 77: | Line 77: | ||

N.B. Alternatively to the step 1. you can follow steps 4. , 5. and 6. first, then click on Upload Process and pick the .java file of your just developed process. Then follow from step 2 onwards. | N.B. Alternatively to the step 1. you can follow steps 4. , 5. and 6. first, then click on Upload Process and pick the .java file of your just developed process. Then follow from step 2 onwards. | ||

| − | == | + | == Some complete examples == |

| + | |||

| + | |||

| + | ===The Bathymetry Algorithm === | ||

| + | |||

Here is the complete description of the use of WPS-hadoop library, through the example of Bathymetry retrieving from a netCDF file. | Here is the complete description of the use of WPS-hadoop library, through the example of Bathymetry retrieving from a netCDF file. | ||

| − | === Class Diagram === | + | ==== Class Diagram ==== |

[[Image:WPSClassDiagram.png|1500px]] | [[Image:WPSClassDiagram.png|1500px]] | ||

| − | === BathymetryAlgorithm.xml === | + | ==== BathymetryAlgorithm.xml ==== |

This file must be named exactly like the .java one. | This file must be named exactly like the .java one. | ||

| Line 120: | Line 124: | ||

</source> | </source> | ||

| − | === Requests examples === | + | ==== Requests examples ==== |

This is an example of how to request the execution of the BathymetryAlgorithm. | This is an example of how to request the execution of the BathymetryAlgorithm. | ||

Revision as of 08:04, 1 August 2012

Contents

Overview

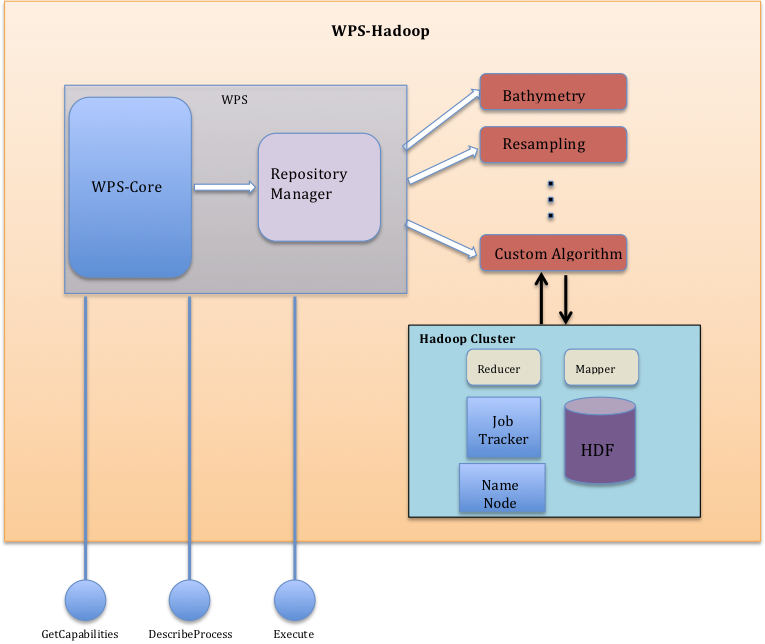

Geospatial Data Processing takes advantage of the OGC Web Processing Service (WPS) as web interface to allow for the dynamic deployment of user processes. In this case the WPS chosen is the 52° North WPS, allowing the development and deployment of user “algorithms”. Is dimostrated that such “algorithms” can be developed to be processed exploiting the powerful and distributed framework offered by Apache™ Hadoop™ MapReduce

Thus was born WPS-hadoop.

Key Features

WPS-hadoop offers a web interface to access the algorithms from external HTTP clients through three different kind of requests, made available to 52 North WPS:

- The GetCapabilities operation provides access to general information about a live WPS implementation, and lists the operations and access methods supported by that implementation. 52N WPS supports the GetCapabilities operation via HTTP GET and POST.

- The DescribeProcess operation allows WPS clients to request a full description of one or more processes that can be executed by the service. This description includes the input and output parameters and formats and can be used to automatically build a user interface to capture the parameter values to be used to execute a process.

- The Execute operation allows WPS clients to run a specified process implemented by the server, using the input parameter values provided and returning the output values produced. Inputs can be included directly in the Execute request, or reference web accessible resources.

Design

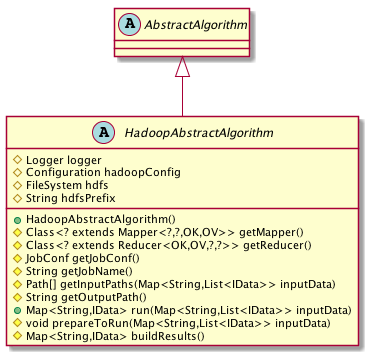

Extending the AbstractAlgorithm class (by 52N) we have created a new abstract class called HadoopAbstractAlgorithm where the Business Logic, hidden to the developer, is used to execute the process creating a Job for the hadoop framework.

Develop a custom process

The custom process class has to extend HadoopAbstractAlgorithm which allows you to specify the Hadoop Configuration parameters (e.g. from XML files), the Mapper and Reducer classes, Input Paths, Output Path, all the operations needed before to run the process and the way to retrieve the results. By using HadoopAbstractAlgorithm, you need to fill these simple methods:

- protected Class<? extends Mapper<?, ?, LongWritable, Text>> getMapper()

This method returns the class to be used as Mapper;

- protected Class<? extends Reducer<LongWritable, Text, ?, ?>> getReducer()

This method returns the class to be used as Reducer (if exists);

- protected Path[] getInputPaths(Map<String, List<IData>> inputData)

This method allows to the business logic to know the exact input path(s) to pass to the Hadoop framework;

- protected String getOutputPath()

This method allows to the business logic to know the exact output path to pass to the Hadoop framework;

- protected Map buildResults()

This method is called by the business logic method to pass build output that the WPS does expect;

- public void prepareToRun(Map<String, List<IData>> inputData)

This method has to be filled by all the operations to do before to run the Hadoop Job (e.g. WPS input validation);

- protected JobConf getJobConf()

This method allows the user to specify all the configuration resources for (from) Hadoop framework (e.g. XML conf files).

Deploy custom process

WPS-hadoop is deployed over Tomcat container.

In order to deploy the recently developed process, you need to:

- Export in a jar file the process.

- Copy the exported lib into the WEB-INF/lib directory.

- Restart tomcat.

- Next, we need to register the newly created algorithm:

- Go to http://localhost:yourport/wps/ , e.g.http://localhost:8080/wps/.

- Click on 52n WPSAdmin console.

- Login with:

- Username: wps

- Password: wps

- The Web Admin Console lets you change the basic configuration of the WPS and upload processes.

- Click on Algorithm Repository --> Properties (the '+' sign).

- Click on the Green '+' to register your process: Type in the left field Algorithm and in the right field the fully qualified class name of your created class (i.e. package + class name, e.g. org.n52.wps.demo.ConvexHullDemo).

- Click on the save icon (the 'disk').

- Next, Click on the top left on 'Save and Activate configuration'.

- Your new Process is now available, test it under: http://localhost:yourport/wps/WebProcessingService?Request=GetCapabilities&Service=WPS or directly http://localhost:yourport/wps/test.hmtl.

N.B. Alternatively to the step 1. you can follow steps 4. , 5. and 6. first, then click on Upload Process and pick the .java file of your just developed process. Then follow from step 2 onwards.

Some complete examples

The Bathymetry Algorithm

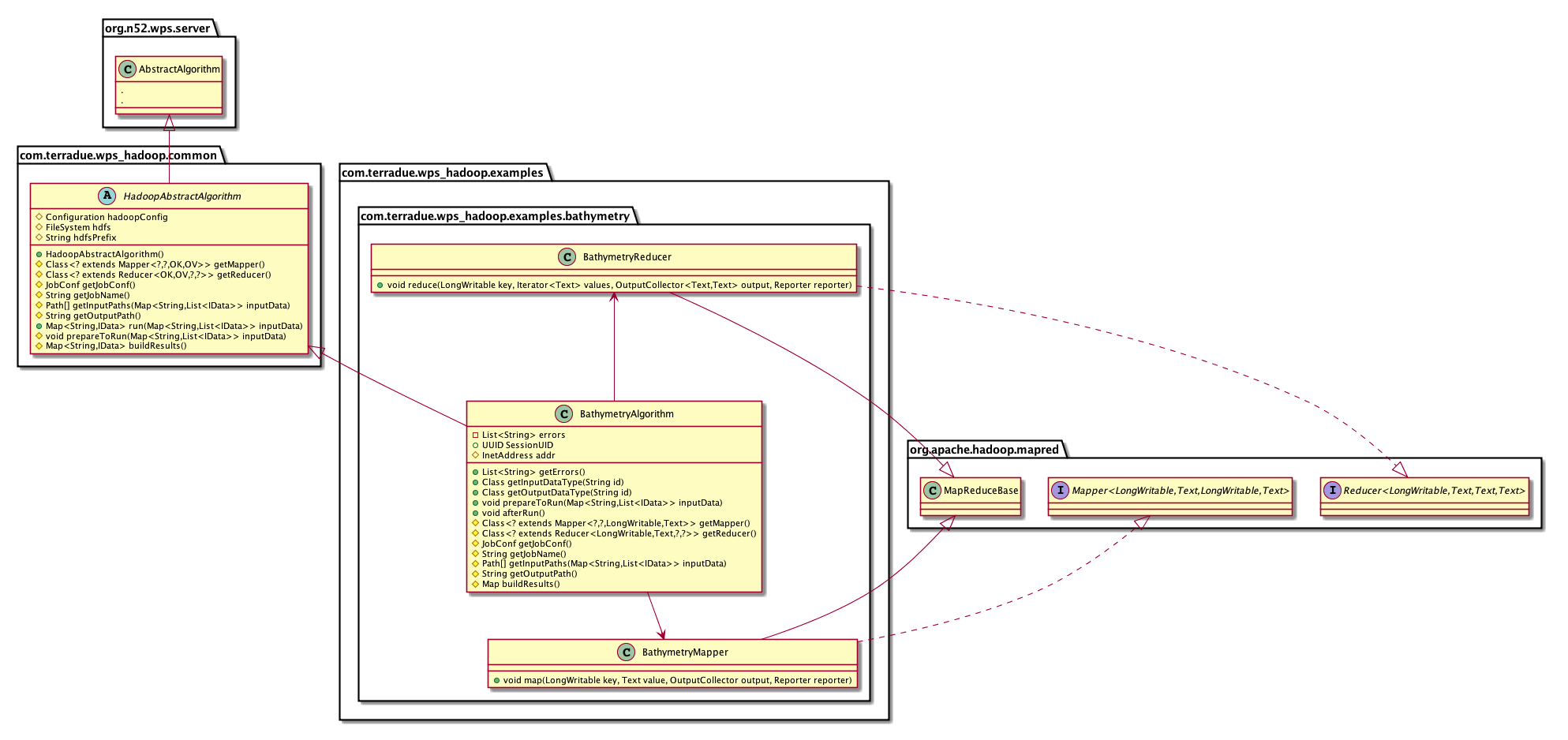

Here is the complete description of the use of WPS-hadoop library, through the example of Bathymetry retrieving from a netCDF file.

Class Diagram

BathymetryAlgorithm.xml

This file must be named exactly like the .java one.

<?xml version="1.0" encoding="UTF-8"?> <wps:ProcessDescriptions xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0 http://geoserver.itc.nl:8080/wps/schemas/wps/1.0.0/wpsDescribeProcess_response.xsd" xml:lang="en-US" service="WPS" version="1.0.0"> <ProcessDescription wps:processVersion="1.0.0" storeSupported="true" statusSupported="false"> <ows:Identifier>com.terradue.wps.BathymetryAlgorithm</ows:Identifier> <ows:Title>Bathymetry Algorithm</ows:Title> <ows:Abstract>by Hadoop</ows:Abstract> <ows:Metadata xlink:title="Bathymetry" /> <DataInputs> <Input minOccurs="1" maxOccurs="1"> <ows:Identifier>InputFile</ows:Identifier> <ows:Title>InputFile</ows:Title> <ows:Abstract>URL to a file containing x,y parameters</ows:Abstract> <LiteralData> <ows:DataType ows:reference="xs:string"></ows:DataType> <ows:AnyValue/> </LiteralData> </Input> </DataInputs> <ProcessOutputs> <Output> <ows:Identifier>result</ows:Identifier> <ows:Title>result</ows:Title> <ows:Abstract>result</ows:Abstract> <LiteralOutput> <ows:DataType ows:reference="xs:string"/> </LiteralOutput> </Output> </ProcessOutputs> </ProcessDescription> </wps:ProcessDescriptions>

Requests examples

This is an example of how to request the execution of the BathymetryAlgorithm.

XML request example

<?xml version="1.0" encoding="UTF-8" standalone="yes"?> <wps:Execute service="WPS" version="1.0.0" xmlns:wps="http://www.opengis.net/wps/1.0.0" xmlns:ows="http://www.opengis.net/ows/1.1" xmlns:xlink="http://www.w3.org/1999/xlink" xmlns:xsi="http://www.w3.org/2001/XMLSchema-instance" xsi:schemaLocation="http://www.opengis.net/wps/1.0.0 http://schemas.opengis.net/wps/1.0.0/wpsExecute_request.xsd"> <ows:Identifier>com.terradue.wps.BathymetryAlgorithm</ows:Identifier> <wps:DataInputs> <wps:Input> <ows:Identifier>InputFile</ows:Identifier> <ows:Title>Input file for Bathymetry</ows:Title> <wps:Data> <wps:LiteralData>http://t2-10-11-30-97.play.terradue.int:8888/wps/maps/coordinates</wps:LiteralData> </wps:Data> </wps:Input> </wps:DataInputs> <wps:ResponseForm> <wps:ResponseDocument storeExecuteResponse="false"> <wps:Output asReference="false"> <ows:Identifier>result</ows:Identifier> </wps:Output> </wps:ResponseDocument> </wps:ResponseForm> </wps:Execute>

KVP (Key Value Pairs) request example

http://t2-10-11-30-97.play.terradue.int:8888/wps/WebProcessingService?Request=Execute&service=WPS&version=1.0.0&language=en-CA&Identifier=com.terradue.wps_hadoop.examples.bathymetry.BathymetryAlgorithm&DataInputs=InputFile=http://t2-10-11-30-97.play.terradue.int:8888/wps/maps/coordinates