Monitoring a gCube infrastructure With Nagios

Contents

Overview

Nagios [1] is a popular open source computer monitor, network monitoring and infrastructure monitoring software application. Nagios offers complete monitoring and alerting for servers, switches, applications, and services and is considered the defacto industry standard in IT infrastructure monitoring.

Nagios components

Nagios is composed by 2 main components the Nagios Server and Nagios plugins

Nagios Server

A Nagios server is an application running tests to monitored hots distributed on the infrastructures, it offers a powerful web interface which can be used by administrator to visualize / configure tests executions.

The installation instruction for Ubuntu,Fedora and OpenSuse can be found at [2]

Nagios Plugins

Nagios plugins are applications that can be executed by the Nagios server or directly in the monitored host. in the case of plugins executed on monitored host the Nagios Server can exploit several methods in order to retrieve the monitoring test results, this capability is available trough 3 different Nagios Addons:

- NRPE which allows remotely execute Nagios plugins on other Linux/Unix machines. This allows you to monitor remote machine metrics (disk usage, CPU load, etc.)

- NRDP is a flexible data transport mechanism and processor for Nagios. It is designed with a simple and powerful architecture that allows for it to be easily extended and customized to fit individual users' needs. It uses standard ports protocols (HTTP(S) and XML)

- NSCA allows to integrate passive alerts and checks from remote machines and applications with Nagios. Useful for processing security alerts, as well as deploying redundant and distributed Nagios setups.

At the moment the Nagios monitoring plugins in gCube are executed directly by the Nagios server, so none of the method described before is currectly exploited. The usage of an NRPE daemon on each node of the infrastructure is currently under investigation.

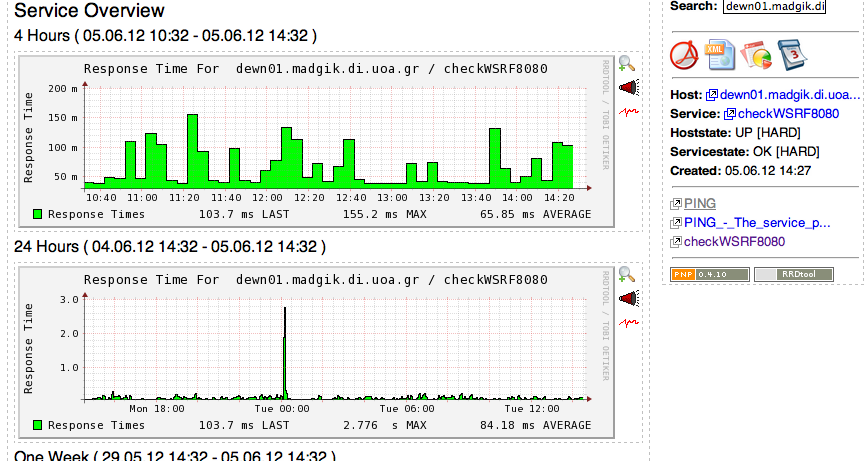

PNP4Nagios

PNP is an extension for Nagios that plots the performance data provided by the probes as long as they follow the Nagios plug-in development guidelines.

Installation and configuration

In this document the version available in the EPEL repositories will be used (0.4). pnp4nagios already provides some documentation for version 0.4, but as it seems not to be clear enough, all steps will be detailed here. Manual installation of the pnp4nagios and php packages is needed as well

Configuring RRD

In nagios.cfg, you have to set the following parameters

process_performance_data=1 enable_environment_macros=1 service_perfdata_command=process-service-perfdata

Which

- Enables the processing of performance data

- Enablse the passing of environment variables (only for Nagios 3.x)

- Specifies the service used to process the performance data

process-service-perfdata is already defined under /etc/nagios/objects/commands.cfg (or similar named file), but the default definition has to be changed

define command {

command_name process-service-perfdata

command_line /usr/bin/perl /usr/libexec/pnp4nagios/process_perfdata.pl

}

Once these modifications are done, restart Nagios.

# service nagios restart

Link between Nagios and pnp4nagios

just be sure that this line

action_url /nagios/html/pnp4nagios/index.php?host=$HOSTNAME$&srv=$SERVICEDESC$

is present in the generic-service.cfg definition. Reload Nagios and you will see a small start linking to the graph, next to each service, and in the detailed view as well.

Hosts & Services Monitored in gCube

The services and hosts monitored in a gCube infrastructure correspond to mainly 3 categories:

- GHN nodes - The nodes hosting a gCore container

- UMD nodes - The nodes hosting UMD services

- gCube Runtime Resources - The nodes hosting third party services needed at runtime by other gCube Services.

TO BE COMPLETED

GHN monitoring

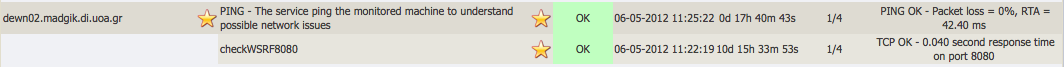

GHNs nodes and the related running gCore containers are monitored by two services:

- PING service

- checkWSRF<port>

the PING service just ping the monitored host to get to understand possible network issues or host outages. On the other hand the checkWSRF<port> service tries to open a socket connection to the container port ( which must be public accessible), to understand if gCore container is up and running.

NRPE plugin installation and configuration

The Installation of NRPE on each infrastructure node togheter with naios-plugins, allows the Nagios server to retrieve monitoring information. At the moment the following monitoring plugins are going to be configured for each infrastructure node:

- nrpe-disk - checking the disk usage of the partition where GHNs/Runtime Resources are installed

- nrpe-load - checking load of the node

- nrpe-totalproc - checking total number of precesses of the node

- nrpe-swap - checking the node swap partition.

Installation

The NRPE daemon running on each infrastructure node need to be contacted by the Nagios server. For this reason the following port need to be open on each node where the NRPE daemon is going to be installed:

- 5666/tcp

SL4

The installation of the Nagios NRPE plugin on this version of OS, is available trough the DAG repository which comes preinstalled.

If it's not enabled by default on the system, please enable it before starting the installation.

The installation can be perfomed as follows:

yum install nagios-nrpe

to start the plugin :

- service nrpe start

SL5 64 bit

The installation of the Nagios NRPE plugin on this version of OS, is available trough the EPEL repository.

In order to configure the EPEL repository on the node, please check the EPEL web site [3].

Once the EPEL repo has been properly configured, the installation can be perfomrd as follows:

yum install nagios-nrpe nagios-plugins-all

to start the plugin :

- service nrpe start

CentOS 64 bit

The installation of the Nagios NRPE plugin on this version of OS, is available trough the DAG repository.

- rpm -Uhv http://apt.sw.be/redhat/el5/en/x86_64/rpmforge/RPMS/rpmforge-release-0.3.6-1.el5.rf.x86_64.rpm

Once the repo file are installed the installation is as follows:

- sudo yum install nagios-nrpe.x86_64 nagios-plugins-nrpe.x86_64

- chkconfig nrpe on

to start the plugin :

- service nrpe restart

Ubuntu/Debian

The installation can be performed as follows:

- sudo apt-get install nagios-nrpe-server

N.B. on older version of those OSs the nagios-plugins package should be also installed since is not a dependency of nagios-nrpe-server

the nagios-nrpe-service can be started :

- service nagios-nrpe-server start

xinetd configuration

The NRPE daemon can be configured as well trough xinted

- yum install xinetd / apt-get install xinitd

- adduser nagios

- Configure /etc/xinetd.d/nrpe :

# description: NRPE (Nagios Remote Plugin Executor)

service nrpe

{

flags = REUSE

type = UNLISTED

port = 5666

socket_type = stream

wait = no

user = nagios

group = nagios

server = /usr/sbin/nrpe

server_args = -c /etc/nagios/nrpe.cfg --inetd

log_on_failure += USERID

disable = yes

only_from = 127.0.0.1 128.142.164.84

}

- chkconfig xinetd on

- service xinetd restart

- add the nrpe to /etc/services:

# Local services nrpe 5666/tcp # NRPE

- chkconfig nrpe on

- service nrpe restart

Base plugins Configuration

The configuration of the plugin needs to be performed as follows (for SL5, CentOS, Ubuntu, Debian):

- edit the file /etc/nagios/nrpe.cfg

- allowed_hosts=128.142.164.84

- to modify: command[check_hda1]=/usr/lib[64]/nagios/plugins/check_disk -w 10% -c 5% -p </dev/sda1> ( you need to configure it with the partition where GHN is installed)

- to add: command[check_swap]=/usr/lib[64]/nagios/plugins/check_swap -w 20% -c 10%

Please note that depending on the architecture the plugins are installed either under /usr/lib or /usr/lib64 folder.

for SL4 instead:

- edit the file /etc/nagios/nrpe.cfg

- allowed_hosts=128.142.164.84

- to modify: command[check_hda1]=/usr/lib/nagios/plugins/check_disk -w 10% -c 5% -p </dev/sda1> ( you need to configure it with the partition where GHN is installed)

- to add: command[check_swap]=/usr/lib/nagios/plugins/check_swap -w 20% -c 10%

to apply changes:

- service nrpe/nagios-nrpe-server reload

Nagios Configuration for gCube

As said the current Nagios monitoring architecture in gCube does not require the installation of plugins on the monitored machine. The test are only executed by the Nagios server with some configuration to be addressed on the monitored service/host.

Base Configuration

Nagios configuration is stored in the so called 'object configuration files'. Those file contains the definition of host, host_groups, contact, services, etc.. Object definition can be split across several config files which have to be declared inside the /etc/nagios/nagios.conf as follows:

cfg_file=/etc/nagios/objects/myobjects.cfg

if configuration files are stored in a dedicated folder, the folder can be declared in the configuration to be included:

cfg_dir=/etc(nagios/objects/myobjectsfolder

given that, we prepared some base configuration for a gCube infrastructure that can be checkout from : [4]

In details the following 2 configuration files in order to group specific hosts and services need to be installed under /etc/nagios/objects

servicegroups.cfg

define servicegroup{

servicegroup_name mysql

alias MYSQL Database Services

}

define servicegroup{

servicegroup_name psql

alias PSQL Database Services

}

define servicegroup{

servicegroup_name ghn

alias ghn hosting node

}

define servicegroup{

servicegroup_name message broker

alias message broker

}

define servicegroup{

servicegroup_name umd service

alias umd service

}

and hostgroups.cfg

define hostgroup{

hostgroup_name GHN

alias gCube Hosting Node

}

define hostgroup{

hostgroup_name gCube Runtime Resource

alias gCube Runtime Resource

}

define hostgroup{

hostgroup_name UMD node

alias UMD node

}

both files need to be included in the Nagios configuration (/etc/nagios/nagios.conf ) as follows

cfg_file=/etc/nagios/objects/hostgroups.cfg cfg_file=/etc/nagios/objects/servicegroups.cfg

GHN monitoring plugins

From the same svn location [5], the base configuration files for GHNs monitoring are available.

For each monitored GHN the following host object need to be created inside the /etc/nagios/objects/gcube-hosts folder:

define host {

use linux-server

host_name nodexx.domain

alias nodexx.domain

address xx.xx.xx..xx

hostgroups GHN

}

and for each monitored GHN a service object need to be configured inside the /etc/nagios/objects/gcube-services , corresponding to the container running on the host and the <port> parameter ( multiple containers can run on a single host and in that case multiple services need to be configured):

define service{

use local-service

host_name nodexx.domain

service_description checkWSRF<port>

check_command check_tcp!<port>

servicegroups ghn

notifications_enabled 1

}

both folders have to be included in the nagios configuration as follows:

cfg_dir=/etc/nagios/objects/gcube-hosts cfg_dir=/etc/nagios/objects/gcube-services

In addition the following PING service definition is defined and applied to each type of nodes:

define service{

use local-service

hostgroup_name GHN, UMD node, gCube Runtime Resource

service_description PING - The service ping the monitored machine to understand possible network issues

check_command check_ping!100.0,20%!500.0,60%

}

once configured, nagios configuration need to be reloaded by typing :

sudo service nagios reload

Other services monitoring plugins

Tomcat plugin

The following plugin is used in order to monitor Tomcat availability, memory and threads:

The plugin is installed at Nagios Server node and needs Tomcat manager to be configured on tomcat side.

The following command definition needs to be added to the commands.cfg file:

define command {

command_name check_tomcat

command_line /bin/bash -c "/usr/lib64/nagios/plugins/check_tomcat.pl -H $HOSTADDRESS$ -p $ARG1$ -l $ARG2$ -a $ARG3$ -t 5 -n ."

}

Mongo DB plugin

The following plugin is used in order to monitor MongoDB services:

https://github.com/mzupan/nagios-plugin-mongodb

On Nagios server side the following command definitions need to be included into the commands.cfg file:

define command {

command_name check_mongodb

command_line $USER1$/nagios-plugin-mongodb/check_mongodb.py -H $HOSTADDRESS$ -A $ARG1$ -P $ARG2$ -W $ARG3$ -C $ARG4$

}

define command {

command_name check_mongodb_database

command_line $USER1$/nagios-plugin-mongodb/check_mongodb.py -H $HOSTADDRESS$ -A $ARG1$ -P $ARG2$ -W $ARG3$ -C $ARG4$ -d $ARG5$

}

define command {

command_name check_mongodb_replicaset

command_line $USER1$/nagios-plugin-mongodb/check_mongodb.py -H $HOSTADDRESS$ -A $ARG1$ -P $ARG2$ -W $ARG3$ -C $ARG4$ -r $ARG5$

}

define command {

command_name check_mongodb_query

command_line $USER1$/nagios-plugin-mongodb/check_mongodb.py -H $HOSTADDRESS$ -A $ARG1$ -P $ARG2$ -W $ARG3$ -C $ARG4$ -q $ARG5$

}

Cassandra plugin

Cassandra monitoring is implemented trough the check_jmx plugin.

Download

wget http://downloads.sourceforge.net/project/nagioscheckjmx/nagioscheckjmx/1.0/check_jmx.tar.gz

Untar:

tar -xvfz check_jmx.tar.gz

Move:

mv check_jmx /usr/local/libexec/nagios

Add this to your commands.cfg file:

define command {

command_name check_jmx

command_line $USER1$/check_jmx -U service:jmx:rmi:///jndi/rmi://$HOSTADDRESS$:8080/jmxrmi -O java.lang:type=Memory -A HeapMemoryUsage -K used -I HeapMemoryUsage -J used -vvvv -w 19327352832 -c 20401094656

}

Add this to your services.cfg file:

define service{

use local-service

hostgroup_name cas-servers

service_description JMX

check_command check_jmx

contact_groups admins

}

Couchbase plugin

The plugin is available from https://github.com/YakindanEgitim/nagios-plugin-couchbase/.

It enables plenty of checks over a couchbase service instance.

The installation is as follows:

clone the repo under /usr/lib/nagios/plugins

add the following commands defintions ( more can be added if mote in deep monitoring need to be implemented)

define command{

command_name cb_item_count

command_line $USER1$/nagios-plugin-couchbase/src/check_couchbase.py -u $ARG1$ -p $ARG2$ -I $HOSTADDRESS$ -P $ARG3$ -b $ARG4$ --item-count -W $ARG5$ -C $ARG6$

}

define command{

command_name cb_low_watermark

command_line $USER1$/nagios-plugin-couchbase/src/check_couchbase.py -u $ARG1$ -p $ARG2$ -I $HOSTADDRESS$ -P $ARG3$ -b $ARG4$ --low-watermark -W $ARG5$ -C $ARG6$

}

define command{

command_name cb_mem_used

command_line $USER1$/nagios-plugin-couchbase/src/check_couchbase.py -u $ARG1$ -p $ARG2$ -I $HOSTADDRESS$ -P $ARG3$ -b $ARG4$ --memory-used -W $ARG5$ -C $ARG6$

}

and the following service definition ( to repeat for each defined command:

define service {

hostgroup_name couchbase

service_description item_count

check_command cb_item_count!<username>!<pass>!<port>!<bucket name> !100000!1000000 ; warning for 1k , critical is 1MB

}

ElasticSearch plugin

The elastic search plugin can be found at https://github.com/saj/nagios-plugin-elasticsearch it requires the installation also of a python framework for nagios https://github.com/saj/pynagioscheck

The installation can be performed as follows:

clone both nagios-plugin-elasticsearch and pynagioscheck plugins

copy the nagios-plugin-elasticsearch folder under /usr/lib/nagios/plugins and on the same folder the files pynagioscheck/nagioscheck.py and pynagioscheck/nagioscheck.pyc

the following command definition should be added

define command{

command_name check_elasticsearch_cluster

command_line $USER1$/nagios-plugin-elasticsearch/check_elasticsearch -H $HOSTADDRESS$ -p $ARG1$ -m 1

}

as well as the service definition

define service {

service_description check_elasticsearch_cluster

check_command check_elasticsearch_cluster!<port>

}

DB monitoring plugins

The plugins currently exploited in the infrastructure are the Mysql [6] and PSQL [7] plugins. They are installed together with the installation of the Nagios server.

In order to properly configure the execution of this plugins, the following commands has to be defined in the configuration file : /etc/nagios/ojects/command.cfg

################################################################################

# MYSQL Commands

################################################################################

# command 'check_mysql_health'

define command{

command_name check_mysql_health

command_line <PATH>/check_mysql_health -H $HOSTADDRESS$ --user $ARG1$ -password $ARG2$ --mode $ARG3$

}

# command 'check_mysql_health_tresholds'

define command{

command_name check_mysql_health_tresholds

command_line <PATH>/check_mysql_health -H $HOSTADDRESS$ --user $ARG1$ -password $ARG2$ --mode $ARG3$ --warning $ARG4$ --critical $ARG5$

}

################################################################################

# PostgreSQL Commands

################################################################################

define command {

command_name check_postgres_size

command_line <PATH>/check_postgres.pl -H $HOSTADDRESS$ -u $ARG1$ -db $ARG2$ --action database_size -w $ARG3$ -c $ARG4$

}

define command {

command_name check_postgres_locks

command_line <PATH>/check_postgres.pl -H $HOSTADDRESS$ -u $ARG1$ -db $ARG2$--action locks w $ARG3$ -c $ARG4$

}

TO COMPLETE

Integration with gCube Information System

The previous mentioned configuration steps can be automatically performed by relying on the information published on the gCube Information System. The gCube Information System stores infact the info about :

- GHN hosts

- UMD hosts ( if BDII wrapper is deployed)

- Runtime Resources.

Therefore a first version of a client which generates Nagios conf by contacting the IS has been developed and it's available at [8].

LDAP configuration

The Nagios web interface can be configured in order to give access to Infrastructure Managers and Site admins by contacting an LDAP server.

the apache configuration files need to be modified as follows:

/etc/httpd/conf.d/nagios.conf:

<Directory "/usr/lib64/nagios/cgi"> .... AuthBasicProvider ldap AuthType Basic AuthName "LDAP Authentication" AuthzLDAPAuthoritative on AuthLDAPURL "ldap://<your ldap url>" NONE Require valid-user ... </Directory> <Directory "/usr/share/nagios"> .... AuthBasicProvider ldap AuthType Basic AuthName "LDAP Authentication" AuthzLDAPAuthoritative on AuthLDAPURL "ldap://<your ldap url>" NONE Require valid-user ...

/etc/httpd/conf.d/pnp4nagios.conf:

<Directory "/usr/share/nagios/html/pnp4nagios">

.....

AuthBasicProvider ldap

AuthType Basic

AuthName "LDAP Authentication"

AuthzLDAPAuthoritative on

AuthLDAPURL "<your ldap url>" NONE

<Directory>